After Install Fedora Workstation 38 (Gnome GUI) this tutorial is mainly to see what to expect from a freshly installed Fedora 38 Workstation – the look and feel of the GUI (Gnome – version 44.0).

- Xorg X11 server – 1.20.14 and Xorg X11 server XWayland 22.1.9 is used by default

- GNOME (the GUI) – 44.0

- linux kernel – 6.2.9

The idea of this tutorial is just to see what to expect from https://docs.fedoraproject.org/en-US/releases/f38/ – the look and feel of the GUI, the default installed programs, and their look and how to do some basic steps with them. Here the reader finds more than 214 screenshots and not so much text the main idea is not to distract the user with much text and version information and 3 meaningless screenshots , which the reader cannot see anything for the user interface, but these days the user interface is the primary goal of a Desktop system. Only for comparison there are couple of old versions reviews, too – Review of freshly installed Fedora 37 Workstation (Gnome GUI), Review of freshly installed Fedora 36 Workstation (Gnome GUI) and more.

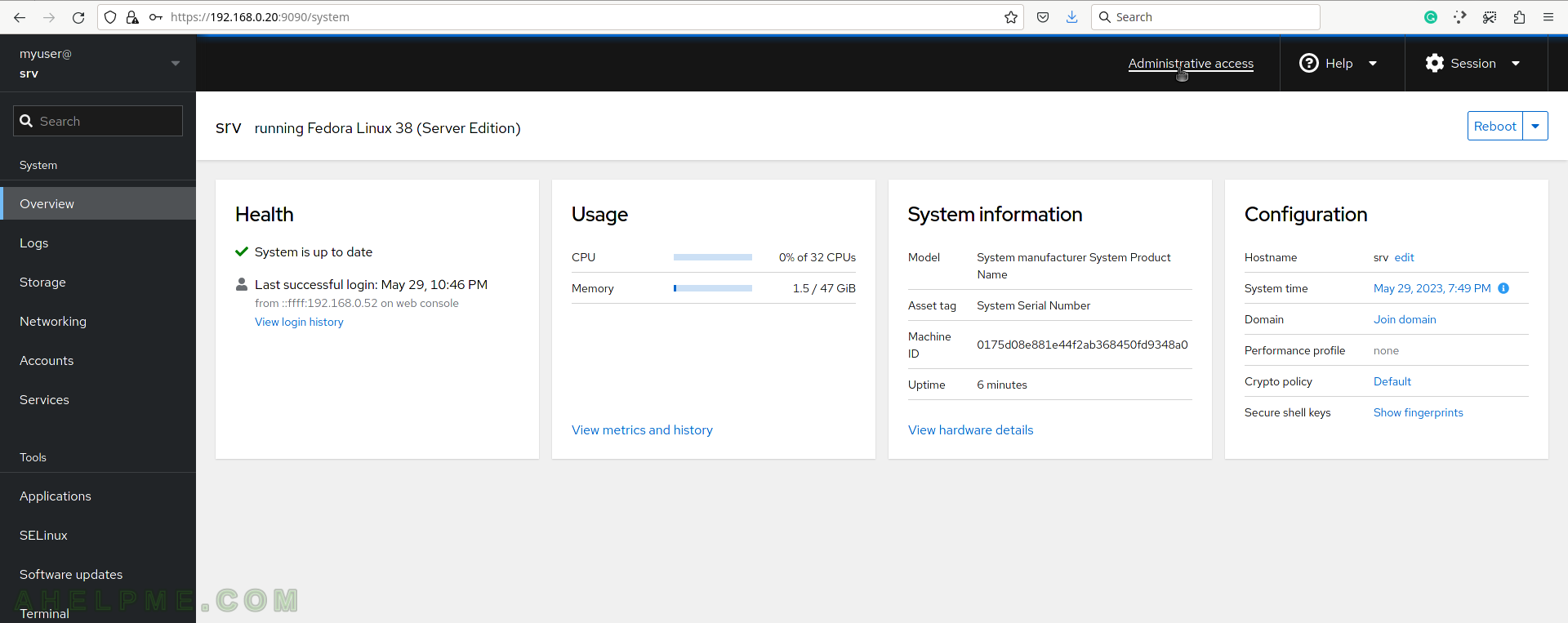

For more details about what software version could be installed check out the Software and technical details of Fedora Server 38 including cockpit screenshots. The same software could be installed in Fedora 38 Workstation to build a decent development desktop system.

For all installation and review articles, real workstations are used, not virtual environments!

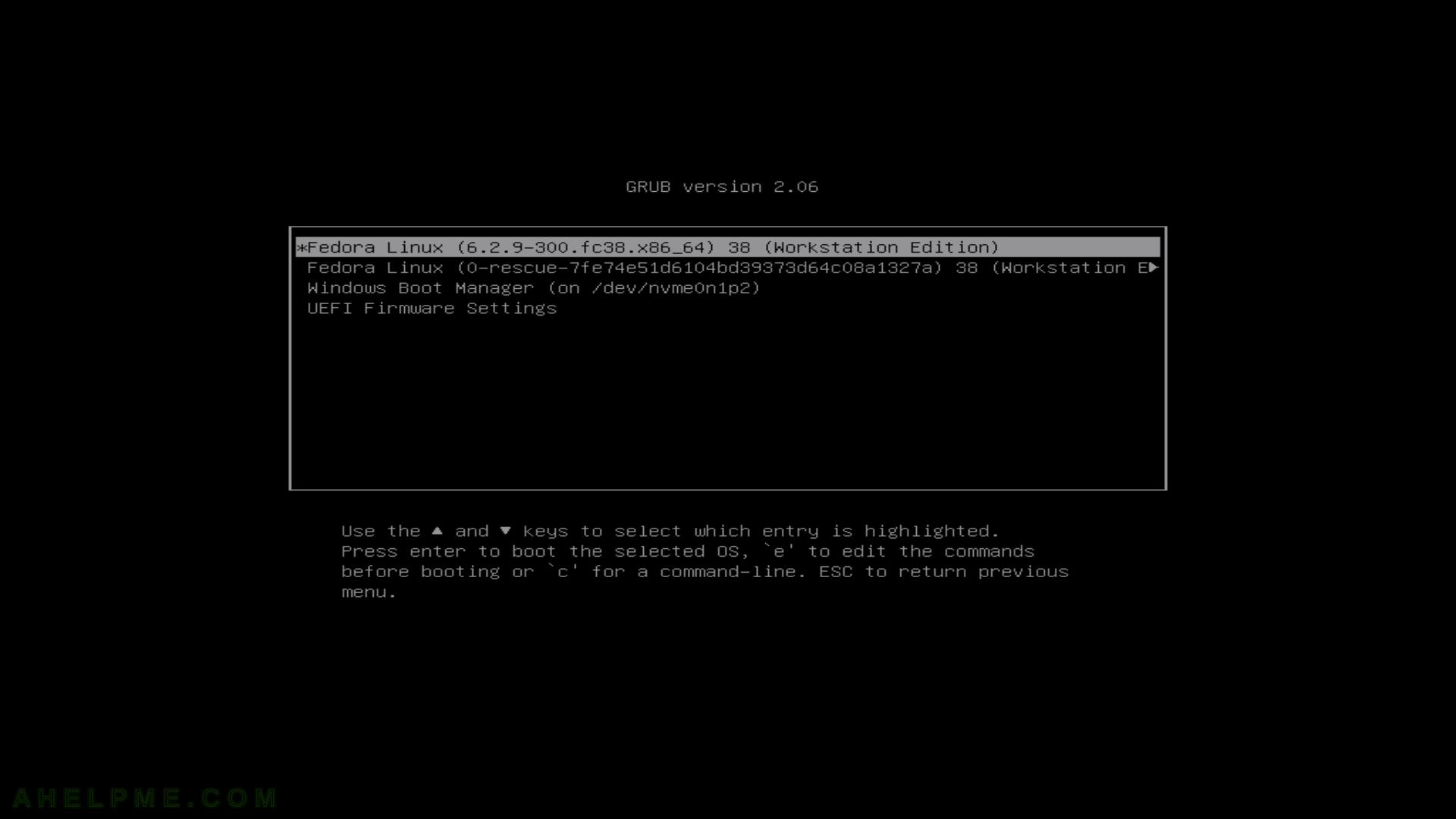

SCREENSHOT 1) Fedora Linux (6.2.9-300.fc38.x86_64) 38 (Workstation Edition)

Keep on reading!