Binding the GlusterFS nodes on a physical interface may lead to local availability problems even for replication nodes. Bringing down the physical interface will bring down the nodes, even the local replica for the local mounts and applications.

- 100% local uptime. The local replica will be 100% available. The loopback interface is always in the upstate! Network interfaces are not 100% upstate, because of network reload or cable unplug. Cable unplug (or port down for a switch configuration reload) could lead to a short time unavailable of the local node even for the local system!

- node resolve rely on /etc/hosts records, not network and remote DNS system.

- No speed limit. The read from the local system through the loopback interface could easily increase above 1G or even 10G. Probably, building nodes with replicas over a 1G network is much more affected than a network with 10G connectivity. Reading from a node relying on a loopback interface could pass 10G, even though the server is connected to a 1G network!

An addition note – another kind of the proposed solution here is to use a virtual interface to bind the IP of the GlusterFS brick. The most common type of virtual interface is using a bridge interface for the IP.

The example here is to bring a GlusterFS volume in replication mode with 3 servers, i.e. 3 replicas. Each server may mount locally the GlusterFS volume with name VOL1 and it would not get unavailable if the main interface

- server’s hostname: node1, hostname for the replica brick glnode1. IP: 192.168.0.20, but the node1 locally is resolved as 127.0.0.1 through /etc/hosts.

- server’s hostname: node2, hostname for the replica brick glnode2. IP: 192.168.0.30, but the node1 locally is resolved as 127.0.0.1 through /etc/hosts.

- server’s hostname: node3, hostname for the replica brick glnode3 IP: 192.168.0.31, but the node3 locally is resolved as 127.0.0.1 through /etc/hosts.

Of course, the server’s hostname could be used, but it better to have a separate domain for the GlusterFS bricks. Sometimes server hostnames should be a real IP or some software may rely on it, too.

And here are all the commands to bring up the GlusterFS volume on 3 servers:

STEP 1) Install GlusterFS software and initial configuration.

There are GlisterFS packages in the official CentOS 8, but a newer version is supported in the Storage SIG. The GlusterFS version installed in this article is 8.4. Install the software under node1, node2, and node3.

yum install -y centos-release-gluster

yum install -y glusterfs-server

Add the following lines at the end of node1:/etc/hosts file.

127.0.0.1 glnode1

192.168.0.30 glnode2

192.168.0.31 glnode3

Add the following lines at the end of node2:/etc/hosts file.

192.168.0.20 glnode1

127.0.0.1 glnode2

192.168.0.31 glnode3

Add the following lines at the end of node3:/etc/hosts file.

192.168.0.20 glnode1

192.168.0.30 glnode2

127.0.0.1 glnode3

Start the GlusterFS service on the three nodes

systemctl start glusterd

Mount the storage device if any and make the directory where the GlusterFS brick will reside:

mount /mnt/storage/

mkdir -p /mnt/storage/gluster/brick

STEP 2) Configure the firewall.

CentOS 8 uses firewalld and here a new zone for the GlusterFS is created and the GlusterFS service is added in the whitelist of the new zone. The three IPs of the nodes are also added in the new zone:

firewall-cmd --permanent --new-zone=glusternodes

firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.20

firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.30

firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.31

firewall-cmd --permanent --zone=glusternodes --add-service=glusterfs

firewall-cmd --reload

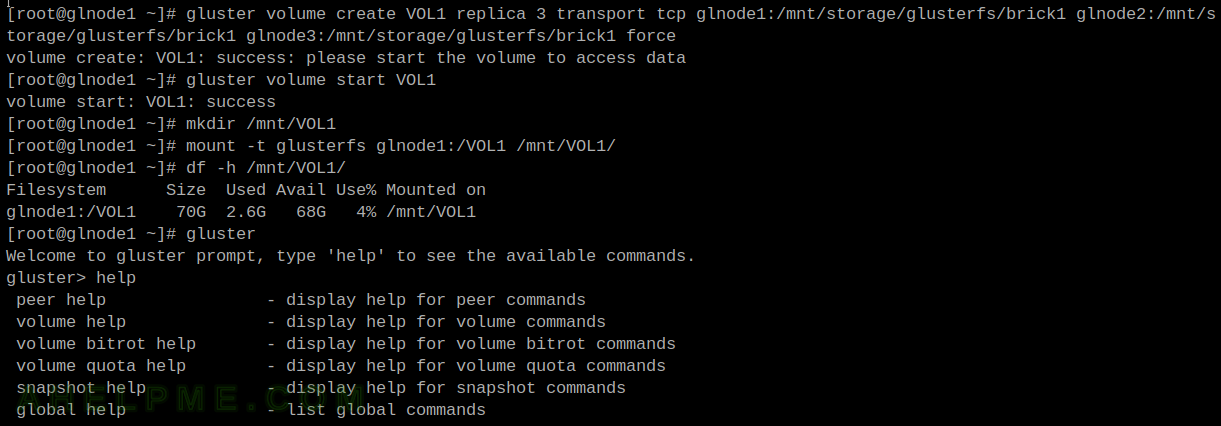

STEP 3) Add peers to the GlusterFS cluster and create a 3 node replica volume.

gluster peer probe glnode2

gluster peer probe glnode3

gluster volume create VOL1 replica 3 transport tcp glnode1:/mnt/storage/gluster/brick glnode2:/mnt/storage/gluster/brick glnode3:/mnt/storage/gluster/brick

gluster volume start VOL1

The GlusterFS volume can be mounted with:

mount -t glusterfs glnode1:/VOL1 /mnt/VOL1/

And /etc/fstab sample line:

glnode1:/VOL1 /mnt/VOL1 glusterfs defaults,noatime,direct-io-mode=disable 0 0

Always use the local hostname for the current node server. If you would like to mount the volume VOL1 on node1, use glnode1:/VOL1 and so on.

Bringing down the physical interface of the server, which is connected to the Internet (aka the network interface with the real IP) would not make the GlusterFS brick unavailable for the local mounts and applications.

In a cluster with only replicas, the local application will just continue using the mounted GlusterFS volume (or native GlusterFS clients) relying only on the local Gluster brick till the main Internet connection comes back.

Create 3 node replica volume – the whole output

[root@node1 ~]# yum install -y centos-release-gluster

CentOS Stream 8 - AppStream 5.8 MB/s | 6.7 MB 00:01

CentOS Stream 8 - BaseOS 2.0 MB/s | 2.3 MB 00:01

CentOS Stream 8 - Extras 22 kB/s | 9.1 kB 00:00

Dependencies resolved.

============================================================================================================================================

Package Architecture Version Repository Size

============================================================================================================================================

Installing:

centos-release-gluster8 noarch 1.0-1.el8 extras 9.3 k

Installing dependencies:

centos-release-storage-common noarch 2-2.el8 extras 9.4 k

Transaction Summary

============================================================================================================================================

Install 2 Packages

Total download size: 19 k

Installed size: 2.4 k

Downloading Packages:

(1/2): centos-release-gluster8-1.0-1.el8.noarch.rpm 136 kB/s | 9.3 kB 00:00

(2/2): centos-release-storage-common-2-2.el8.noarch.rpm 145 kB/s | 9.4 kB 00:00

--------------------------------------------------------------------------------------------------------------------------------------------

Total 27 kB/s | 19 kB 00:00

warning: /var/cache/dnf/extras-9705a089504ff150/packages/centos-release-gluster8-1.0-1.el8.noarch.rpm: Header V3 RSA/SHA256 Signature, key ID 8483c65d: NOKEY

CentOS Stream 8 - Extras 725 kB/s | 1.6 kB 00:00

Importing GPG key 0x8483C65D:

Userid : "CentOS (CentOS Official Signing Key) <security@centos.org>"

Fingerprint: 99DB 70FA E1D7 CE22 7FB6 4882 05B5 55B3 8483 C65D

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

Key imported successfully

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : centos-release-storage-common-2-2.el8.noarch 1/2

Installing : centos-release-gluster8-1.0-1.el8.noarch 2/2

Running scriptlet: centos-release-gluster8-1.0-1.el8.noarch 2/2

Verifying : centos-release-gluster8-1.0-1.el8.noarch 1/2

Verifying : centos-release-storage-common-2-2.el8.noarch 2/2

Installed:

centos-release-gluster8-1.0-1.el8.noarch centos-release-storage-common-2-2.el8.noarch

Complete!

[root@node1 ~]# yum install -y glusterfs-server

Last metadata expiration check: 0:00:14 ago on Wed Apr 14 13:01:50 2021.

Dependencies resolved.

============================================================================================================================================

Package Architecture Version Repository Size

============================================================================================================================================

Installing:

glusterfs-server x86_64 8.4-1.el8 centos-gluster8 1.4 M

Installing dependencies:

attr x86_64 2.4.48-3.el8 baseos 68 k

device-mapper-event x86_64 8:1.02.175-5.el8 baseos 269 k

device-mapper-event-libs x86_64 8:1.02.175-5.el8 baseos 269 k

device-mapper-persistent-data x86_64 0.8.5-4.el8 baseos 468 k

glusterfs x86_64 8.4-1.el8 centos-gluster8 689 k

glusterfs-cli x86_64 8.4-1.el8 centos-gluster8 214 k

glusterfs-client-xlators x86_64 8.4-1.el8 centos-gluster8 899 k

glusterfs-fuse x86_64 8.4-1.el8 centos-gluster8 171 k

libaio x86_64 0.3.112-1.el8 baseos 33 k

libgfapi0 x86_64 8.4-1.el8 centos-gluster8 125 k

libgfchangelog0 x86_64 8.4-1.el8 centos-gluster8 67 k

libgfrpc0 x86_64 8.4-1.el8 centos-gluster8 89 k

libgfxdr0 x86_64 8.4-1.el8 centos-gluster8 61 k

libglusterd0 x86_64 8.4-1.el8 centos-gluster8 45 k

libglusterfs0 x86_64 8.4-1.el8 centos-gluster8 350 k

lvm2 x86_64 8:2.03.11-5.el8 baseos 1.6 M

lvm2-libs x86_64 8:2.03.11-5.el8 baseos 1.1 M

psmisc x86_64 23.1-5.el8 baseos 151 k

python3-pyxattr x86_64 0.5.3-18.el8 centos-gluster8 35 k

rpcbind x86_64 1.2.5-8.el8 baseos 70 k

userspace-rcu x86_64 0.10.1-4.el8 baseos 101 k

Transaction Summary

============================================================================================================================================

Install 22 Packages

Total download size: 8.2 M

Installed size: 24 M

Downloading Packages:

(1/22): glusterfs-cli-8.4-1.el8.x86_64.rpm 1.1 MB/s | 214 kB 00:00

(2/22): glusterfs-8.4-1.el8.x86_64.rpm 2.6 MB/s | 689 kB 00:00

(3/22): glusterfs-fuse-8.4-1.el8.x86_64.rpm 1.1 MB/s | 171 kB 00:00

(4/22): glusterfs-client-xlators-8.4-1.el8.x86_64.rpm 2.3 MB/s | 899 kB 00:00

(5/22): libgfapi0-8.4-1.el8.x86_64.rpm 1.8 MB/s | 125 kB 00:00

(6/22): libgfchangelog0-8.4-1.el8.x86_64.rpm 755 kB/s | 67 kB 00:00

(7/22): libgfrpc0-8.4-1.el8.x86_64.rpm 756 kB/s | 89 kB 00:00

(8/22): libgfxdr0-8.4-1.el8.x86_64.rpm 579 kB/s | 61 kB 00:00

(9/22): libglusterd0-8.4-1.el8.x86_64.rpm 641 kB/s | 45 kB 00:00

(10/22): glusterfs-server-8.4-1.el8.x86_64.rpm 3.4 MB/s | 1.4 MB 00:00

(11/22): libglusterfs0-8.4-1.el8.x86_64.rpm 1.0 MB/s | 350 kB 00:00

(12/22): python3-pyxattr-0.5.3-18.el8.x86_64.rpm 97 kB/s | 35 kB 00:00

(13/22): attr-2.4.48-3.el8.x86_64.rpm 85 kB/s | 68 kB 00:00

(14/22): device-mapper-event-1.02.175-5.el8.x86_64.rpm 342 kB/s | 269 kB 00:00

(15/22): device-mapper-event-libs-1.02.175-5.el8.x86_64.rpm 338 kB/s | 269 kB 00:00

(16/22): libaio-0.3.112-1.el8.x86_64.rpm 679 kB/s | 33 kB 00:00

(17/22): device-mapper-persistent-data-0.8.5-4.el8.x86_64.rpm 1.5 MB/s | 468 kB 00:00

(18/22): psmisc-23.1-5.el8.x86_64.rpm 1.5 MB/s | 151 kB 00:00

(19/22): rpcbind-1.2.5-8.el8.x86_64.rpm 1.2 MB/s | 70 kB 00:00

(20/22): lvm2-libs-2.03.11-5.el8.x86_64.rpm 3.1 MB/s | 1.1 MB 00:00

(21/22): userspace-rcu-0.10.1-4.el8.x86_64.rpm 474 kB/s | 101 kB 00:00

(22/22): lvm2-2.03.11-5.el8.x86_64.rpm 3.3 MB/s | 1.6 MB 00:00

--------------------------------------------------------------------------------------------------------------------------------------------

Total 2.8 MB/s | 8.2 MB 00:02

warning: /var/cache/dnf/centos-gluster8-ae72c2c38de8ee20/packages/glusterfs-8.4-1.el8.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e451e5b5: NOKEY

CentOS-8 - Gluster 8 1.0 MB/s | 1.0 kB 00:00

Importing GPG key 0xE451E5B5:

Userid : "CentOS Storage SIG (http://wiki.centos.org/SpecialInterestGroup/Storage) <security@centos.org>"

Fingerprint: 7412 9C0B 173B 071A 3775 951A D4A2 E50B E451 E5B5

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Storage

Key imported successfully

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : libgfxdr0-8.4-1.el8.x86_64 1/22

Running scriptlet: libgfxdr0-8.4-1.el8.x86_64 1/22

Installing : libglusterfs0-8.4-1.el8.x86_64 2/22

Running scriptlet: libglusterfs0-8.4-1.el8.x86_64 2/22

Installing : libgfrpc0-8.4-1.el8.x86_64 3/22

Running scriptlet: libgfrpc0-8.4-1.el8.x86_64 3/22

Installing : libaio-0.3.112-1.el8.x86_64 4/22

Installing : glusterfs-client-xlators-8.4-1.el8.x86_64 5/22

Installing : device-mapper-event-libs-8:1.02.175-5.el8.x86_64 6/22

Running scriptlet: glusterfs-8.4-1.el8.x86_64 7/22

Installing : glusterfs-8.4-1.el8.x86_64 7/22

Running scriptlet: glusterfs-8.4-1.el8.x86_64 7/22

Installing : libglusterd0-8.4-1.el8.x86_64 8/22

Running scriptlet: libglusterd0-8.4-1.el8.x86_64 8/22

Installing : glusterfs-cli-8.4-1.el8.x86_64 9/22

Installing : device-mapper-event-8:1.02.175-5.el8.x86_64 10/22

Running scriptlet: device-mapper-event-8:1.02.175-5.el8.x86_64 10/22

Installing : lvm2-libs-8:2.03.11-5.el8.x86_64 11/22

Installing : libgfapi0-8.4-1.el8.x86_64 12/22

Running scriptlet: libgfapi0-8.4-1.el8.x86_64 12/22

Installing : device-mapper-persistent-data-0.8.5-4.el8.x86_64 13/22

Installing : lvm2-8:2.03.11-5.el8.x86_64 14/22

Running scriptlet: lvm2-8:2.03.11-5.el8.x86_64 14/22

Installing : libgfchangelog0-8.4-1.el8.x86_64 15/22

Running scriptlet: libgfchangelog0-8.4-1.el8.x86_64 15/22

Installing : userspace-rcu-0.10.1-4.el8.x86_64 16/22

Running scriptlet: userspace-rcu-0.10.1-4.el8.x86_64 16/22

Running scriptlet: rpcbind-1.2.5-8.el8.x86_64 17/22

Installing : rpcbind-1.2.5-8.el8.x86_64 17/22

Running scriptlet: rpcbind-1.2.5-8.el8.x86_64 17/22

Installing : psmisc-23.1-5.el8.x86_64 18/22

Installing : attr-2.4.48-3.el8.x86_64 19/22

Installing : glusterfs-fuse-8.4-1.el8.x86_64 20/22

Installing : python3-pyxattr-0.5.3-18.el8.x86_64 21/22

Installing : glusterfs-server-8.4-1.el8.x86_64 22/22

Running scriptlet: glusterfs-server-8.4-1.el8.x86_64 22/22

Verifying : glusterfs-8.4-1.el8.x86_64 1/22

Verifying : glusterfs-cli-8.4-1.el8.x86_64 2/22

Verifying : glusterfs-client-xlators-8.4-1.el8.x86_64 3/22

Verifying : glusterfs-fuse-8.4-1.el8.x86_64 4/22

Verifying : glusterfs-server-8.4-1.el8.x86_64 5/22

Verifying : libgfapi0-8.4-1.el8.x86_64 6/22

Verifying : libgfchangelog0-8.4-1.el8.x86_64 7/22

Verifying : libgfrpc0-8.4-1.el8.x86_64 8/22

Verifying : libgfxdr0-8.4-1.el8.x86_64 9/22

Verifying : libglusterd0-8.4-1.el8.x86_64 10/22

Verifying : libglusterfs0-8.4-1.el8.x86_64 11/22

Verifying : python3-pyxattr-0.5.3-18.el8.x86_64 12/22

Verifying : attr-2.4.48-3.el8.x86_64 13/22

Verifying : device-mapper-event-8:1.02.175-5.el8.x86_64 14/22

Verifying : device-mapper-event-libs-8:1.02.175-5.el8.x86_64 15/22

Verifying : device-mapper-persistent-data-0.8.5-4.el8.x86_64 16/22

Verifying : libaio-0.3.112-1.el8.x86_64 17/22

Verifying : lvm2-8:2.03.11-5.el8.x86_64 18/22

Verifying : lvm2-libs-8:2.03.11-5.el8.x86_64 19/22

Verifying : psmisc-23.1-5.el8.x86_64 20/22

Verifying : rpcbind-1.2.5-8.el8.x86_64 21/22

Verifying : userspace-rcu-0.10.1-4.el8.x86_64 22/22

Installed:

attr-2.4.48-3.el8.x86_64 device-mapper-event-8:1.02.175-5.el8.x86_64

device-mapper-event-libs-8:1.02.175-5.el8.x86_64 device-mapper-persistent-data-0.8.5-4.el8.x86_64

glusterfs-8.4-1.el8.x86_64 glusterfs-cli-8.4-1.el8.x86_64

glusterfs-client-xlators-8.4-1.el8.x86_64 glusterfs-fuse-8.4-1.el8.x86_64

glusterfs-server-8.4-1.el8.x86_64 libaio-0.3.112-1.el8.x86_64

libgfapi0-8.4-1.el8.x86_64 libgfchangelog0-8.4-1.el8.x86_64

libgfrpc0-8.4-1.el8.x86_64 libgfxdr0-8.4-1.el8.x86_64

libglusterd0-8.4-1.el8.x86_64 libglusterfs0-8.4-1.el8.x86_64

lvm2-8:2.03.11-5.el8.x86_64 lvm2-libs-8:2.03.11-5.el8.x86_64

psmisc-23.1-5.el8.x86_64 python3-pyxattr-0.5.3-18.el8.x86_64

rpcbind-1.2.5-8.el8.x86_64 userspace-rcu-0.10.1-4.el8.x86_64

Complete!

[root@node1 ~]# systemctl start glusterd

[root@node1 ~]# systemctl status glusterd

● glusterd.service - GlusterFS, a clustered file-system server

Loaded: loaded (/usr/lib/systemd/system/glusterd.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2021-04-14 13:06:39 UTC; 3s ago

Docs: man:glusterd(8)

Process: 10151 ExecStart=/usr/sbin/glusterd -p /var/run/glusterd.pid --log-level $LOG_LEVEL $GLUSTERD_OPTIONS (code=exited, status=0/SUCC>

Main PID: 10152 (glusterd)

Tasks: 9 (limit: 11409)

Memory: 5.6M

CGroup: /system.slice/glusterd.service

└─10152 /usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO

Apr 14 13:06:39 node1 systemd[1]: Starting GlusterFS, a clustered file-system server...

Apr 14 13:06:39 node1 systemd[1]: Started GlusterFS, a clustered file-system server.

[root@node1 ~]# tail -n 3 /etc/hosts

127.0.0.1 glnode1

192.168.0.30 glnode2

192.168.0.31 glnode3

[root@node1 ~]# ping glnode1

PING glnode1 (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost (127.0.0.1): icmp_seq=1 ttl=64 time=0.058 ms

64 bytes from localhost (127.0.0.1): icmp_seq=2 ttl=64 time=0.050 ms

^C

--- glnode1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1016ms

rtt min/avg/max/mdev = 0.050/0.054/0.058/0.004 ms

[root@node1 ~]# mkdir -p /mnt/storage/gluster/brick

[root@node1 ~]# firewall-cmd --permanent --new-zone=glusternodes

success

[root@node1 ~]# firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.20

success

[root@node1 ~]# firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.30

success

[root@node1 ~]# firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.31

success

[root@node1 ~]# firewall-cmd --permanent --zone=glusternodes --add-service=glusterfs

success

[root@node1 ~]# firewall-cmd --reload

success

[root@node1 ~]# firewall-cmd --zone=glusternodes --list-all

glusternodes (active)

target: default

icmp-block-inversion: no

interfaces:

sources: 192.168.0.20 192.168.0.30 192.168.0.31

services: glusterfs

ports:

protocols:

forward: no

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:

[root@node1 ~]# gluster peer probe glnode2

peer probe: success

[root@node1 ~]# gluster peer probe glnode3

peer probe: success

[root@node1 ~]# gluster peer status

Number of Peers: 2

Hostname: glnode2

Uuid: ab63f0a0-0a72-4fcd-9f34-b88040d1a8e3

State: Peer in Cluster (Connected)

Hostname: glnode3

Uuid: 439ccd19-a95e-427c-ab72-6b65effcbe06

State: Peer in Cluster (Connected)

[root@node1 ~]# gluster volume create VOL1 replica 3 transport tcp glnode1:/mnt/storage/gluster/brick glnode2:/mnt/storage/gluster/brick glnode3:/mnt/storage/gluster/brick

volume create: VOL1: success: please start the volume to access data

[root@node1 ~]# gluster volume start VOL1

volume start: VOL1: success

[root@node1 ~]# gluster volume info VOL1

Volume Name: VOL1

Type: Replicate

Volume ID: 7da7bb05-2c9b-464b-b3f9-8940eeb5b0bb

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: glnode1:/mnt/storage/gluster/brick

Brick2: glnode2:/mnt/storage/gluster/brick

Brick3: glnode3:/mnt/storage/gluster/brick

Options Reconfigured:

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@node1 storage]# mkdir -p /mnt/VOL1

[root@node1 storage]# tail -n 1 /etc/fstab

glnode1:/VOL1 /mnt/VOL1 glusterfs defaults,noatime,direct-io-mode=disable 0 0

[root@node1 storage]# mount /mnt/VOL1/

[root@node1 storage]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 892M 0 892M 0% /dev

tmpfs 909M 0 909M 0% /dev/shm

tmpfs 909M 8.5M 901M 1% /run

tmpfs 909M 0 909M 0% /sys/fs/cgroup

/dev/sda1 95G 1.5G 89G 2% /

/dev/sda3 976M 148M 762M 17% /boot

tmpfs 182M 0 182M 0% /run/user/0

glnode1:/VOL1 95G 2.4G 89G 3% /mnt/VOL1

[root@node1 storage]# ls -altr /mnt/VOL1/

total 8

drwxr-xr-x. 3 root root 4096 Apr 14 13:31 .

drwxr-xr-x. 4 root root 4096 Apr 14 13:34 ..