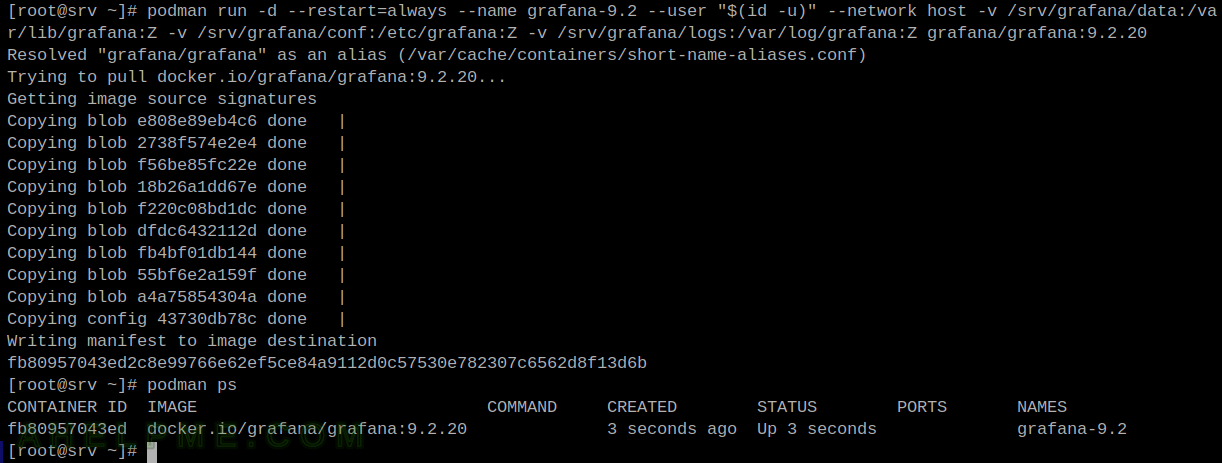

This article is a follow up after the Run podman/docker InfluxDB 1.8 container to collect statistics from collectd, where the time series database InfluxDB stores data and by using Grafana in another container it is easy and lightweight enough to visualize the collected data.

Containerizing the Grafana service is simple enough with docker/podman, but there are several tips and steps to consider before doing it. These steps will significantly ease the maintainer’s life, making upgrading, moving to another server, or backup important data really easy – just stop and start another container with the same options except name and container version.

Here are the important points to mind when running Grafana 9 in a docker/podman container:

Keep on reading!

Tag: docker

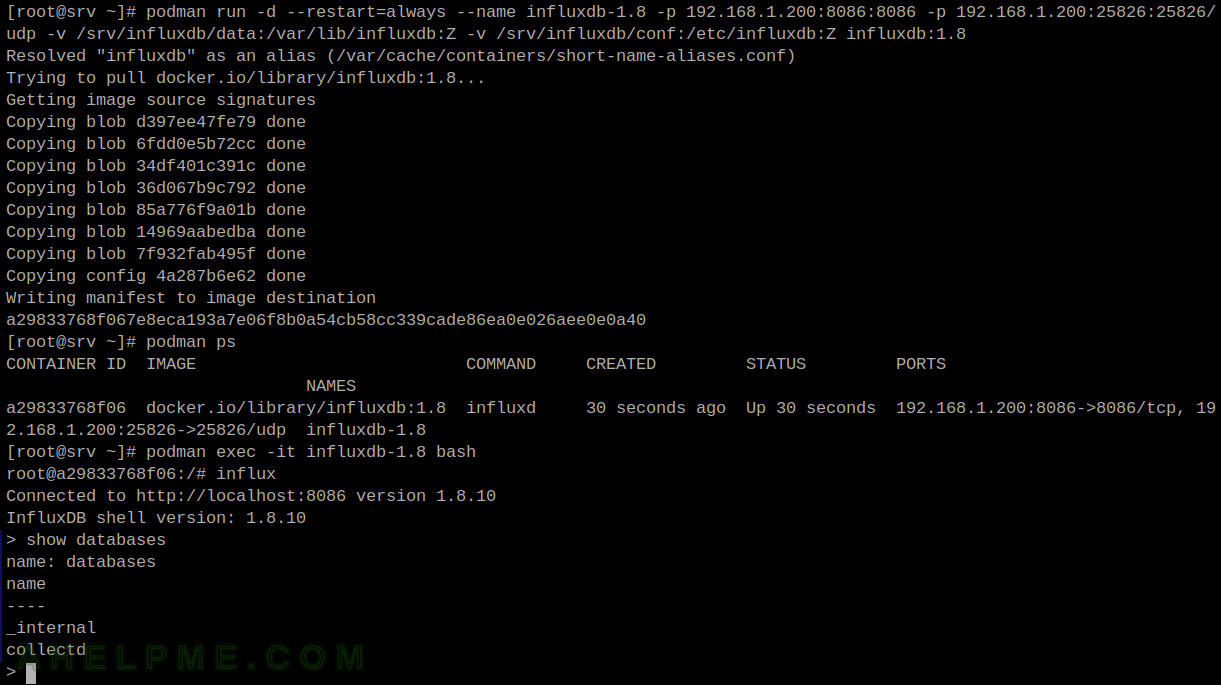

Run podman/docker InfluxDB 1.8 container to collect statistics from collectd

Yet another article on the topic of the InfluxDB 1.8 and collectd gathering statistics, in continuation of the articles Monitor and analyze with Grafana, influxdb 1.8 and collectd under Ubuntu 22.04 LTS and Monitor and analyze with Grafana, influxdb 1.8 and collectd under CentOS Stream 9. This time, the InfluxDB runs in a container created with podman or docker software.

Here are the important points to mind when running InfluxDB 1.8 in a docker/podman container:

Keep on reading!

Run a docker container with bigger storage

By default, the Docker command-line utility docker runs containers with 10G storage, which in most cases is enough, but if the user wants to just run a specific container with bigger storage there is an option for the docker command:

docker run --storage-opt size=50G

The option size=50G will set the docker container storage for the current only run command!

Run a Ubuntu 22.04 Docker container with 50G root storage:

root@srv ~ # docker run --storage-opt size=50G -it ubuntu:22.04 bash Unable to find image 'ubuntu:22.04' locally 22.04: Pulling from library/ubuntu e96e057aae67: Pull complete Digest: sha256:4b1d0c4a2d2aaf63b37111f34eb9fa89fa1bf53dd6e4ca954d47caebca4005c2 Status: Downloaded newer image for ubuntu:22.04 root@4caab8c61157:/# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/docker-253:0-39459726-2f2d655687e5bd39620a2a083960ac969d8163b806152765a1fc166f0a82d3d9 50G 170M 50G 1% / tmpfs 64M 0 64M 0% /dev tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup shm 64M 0 64M 0% /dev/shm /dev/mapper/map-99f55d81-4132-42d4-9515-33d8cc11d3e2 3.6T 1.5T 2.2T 40% /etc/hosts tmpfs 7.8G 0 7.8G 0% /proc/asound tmpfs 7.8G 0 7.8G 0% /proc/acpi tmpfs 7.8G 0 7.8G 0% /proc/scsi tmpfs

It’s worth mentioning this option “–storage-opt size=50G” is different from the “–storage-opt dm.basesize=50G“, the first one is used as a command argument to the docker command-line utility. The second one is used with the dockerd daemon to change the default Docker behavior from 10G to 50G storage. Note, either option cannot change the storage size of the already started container.

Starting up standalone ClickHouse server with basic configuration in docker

ClickHouse is a powerful column-oriented database written in C, which generates analytical and statistical reports in real-time using SQL statements!

It supports on-the-fly compression of the data, cluster setup of replicas and shards instances over thousands of servers, and multi-master cluster modes.

The ClickHouse is an ideal instrument for weblogs and easy real-time generating reports of the weblogs! Or for storing the data of user behaviour and interactions with web sites or applications.

The easiest way to run a CLickHouse instance is within a docker/podman container. The docker hub hosts official containers image maintained by the ClickHouse developers.

And this article will show how to run a ClickHouse standalone server, how to manage the ClickHouse configuration features, and what obstacles the user may encounter.

Here are some key points:

- Main server configuration file is config.xml (in /etc/clickhouse-server/config.xml) – all server’s settings like listening port, ports, logger, remote access, cluster setup (shards and replicas), system settings (time zone, umask, and more), monitoring, query logs, dictionaries, compressions and so on. Check out the server settings: https://clickhouse.com/docs/en/operations/server-configuration-parameters/settings/

- The main user configuration file is users.xml (in /etc/clickhouse-server/users.xml), which specifies profiles, users, passwords, ACL, quotas, and so on. It also supports SQL-driven user configuration, check out the available settings and users’ options – https://clickhouse.com/docs/en/operations/settings/settings-users/

- By default, there is a root user with administrative privileges without password, which could only connect to the server from the localhost.

- Do not edit the main configuration file(s). Some options may get deprecated and removed and the modified configuration file to become incompatible with the new releases.

- Every configuration setting could be overriden with configuration files in config.d/. A good practice is to have a configuration file per each setting, which overrides the default one in config.xml. For example:

root@srv ~ # ls -al /etc/clickhouse-server/config.d/ total 48 drwxr-xr-x 2 root root 4096 Nov 22 04:40 . drwxr-xr-x 4 root root 4096 Nov 22 04:13 .. -rw-r--r-- 1 root root 343 Sep 16 2021 00-path.xml -rw-r--r-- 1 root root 58 Nov 22 04:40 01-listen.xml -rw-r--r-- 1 root root 145 Feb 3 2020 02-log_to_console.xml

There are three configurations files, which override the default paths (00-path.xml), change the default listen setting (01-listen.xml), and log to console (02-log_to_console.xml). Here is what to expect in 00-path.xml

<yandex> <path replace="replace">/mnt/storage/ClickHouse/var/</path> <tmp_path replace="replace">/mnt/storage/ClickHouse/tmp/</tmp_path> <user_files_path replace="replace">/mnt/storage/ClickHouse/var/user_files/</user_files_path> <format_schema_path replace="replace">/mnt/storage/ClickHouse/format_schemas/</format_schema_path> </yandex>So the default settings in config.xml path, tmp_path, user_files_path and format_schema_path will be replaced with the above values.

To open the ClickHouse for the outer world, i.e. listen to 0.0.0.0 just include a configuration file like 01-listen.xml.<yandex> <listen_host>0.0.0.0</listen_host> </yandex> - When all additional (including user) configuration files are processed and the result is written in preprocessed_configs/ directory in var directory, for example /var/lib/clickhouse/preprocessed_configs/

- The configuration directories are reloaded each 3600 seconds (by default, it could be changed) by the ClickHouse server and on a change in the configuration files new processed ones are generated and in most cases the changes are loaded on-the-fly. Still, there are settings, which require manual restart of the main process. Check out the manual for more details.

- By default, the logger is in the trace log level, which may generate an enormous amount of logging data. So just change the settings to something more production meaningful like warning level (in config.d/04-part_log.xml).

<yandex> <logger> <level>warning</level> </logger> </yandex> - ClickHouse default ports:

- 8123 is the HTTP client port (8443 is the HTTPS). The client can connect with curl or wget or other command-line HTTP(S) clients to manage and insert data in databases and tables.

- 9000 is the native TCP/IP client port (9440 is the TLS enabled port for this service) to manage and insert data in databases and tables.

- 9004 is the MySQL protocol port. ClickHouse supports MySQL wire protocol and it can be enabled by the

<yandex> <mysql_port>9004</mysql_port> </yandex> - 9009 is the port, which ClickHouse uses to exchange data between ClickHouse servers when using cluster setup and replicas/shards.

- There is a flag directory, in which files with special names may instruct ClickHouse to process commands. For example, creating a blank file with the name: /var/lib/clickhouse/flags/force_restore_data will instruct the ClickHouse to begin a restore procedure for the server.

- A good practice is to make backup of the whole configuration directory despite the main configuration file(s) are not changed and in original state.

- The SQL commands, which are supported by CickHouse server: https://clickhouse.com/docs/en/sql-reference/ and https://clickhouse.com/docs/en/sql-reference/statements/

- The basic and fundamental table type is MergeTree, which is designed for inserting a very large amount of data into a table – https://clickhouse.com/docs/en/engines/table-engines/mergetree-family/mergetree/

- Bear in mind, ClickHouse supports SQL syntax and some of the SQL statements, but UPDATE and DELETE statements are not supported, just INSERTs! The main idea behind the ClickHouse is not to change the data, but to add only!

- Batch INSERTs are the preferred way of inserting data! In fact, there is a recommendation of 1 INSERT per a second in the ClickHouse manual

Easy install the latest docker-compose with pip3 under Ubuntu

At present, the latest docker-compose version, which could be installed under Ubuntu 18, 20, and 21 is the 1.25 and 1.27 versions. There may be significant changes included in the latest versions and if one wants to install it there are two options:

- Manual installation from docker from the github – https://github.com/docker/compose/releases

- Install it from pip3.

For example, depends_on.service.condition: service_healthy is added with version 1.28. Using this new feature it is fairly easy to implement waiting for a docker container (service) before starting another docker.

Here is how easy it is to install and to have the latest stable docker-compose version, which is 1.29.2 at the writing of this article:

STEP 1) Update and upgrade.

Do this step always before installing new software.

sudo apt update sudo apt upgrade -y

STEP 2) Install pip3 and docker.

pip 3 is the package installer for Python 3. When using docker-compose it is supposed to have the very Docker software, too.

apt install python3-pip docker systemctl start docker

STEP 3) Install docker-compose using pip3.

pip3 install docker-compose

And here is what a version command prints:

root@srv:~# docker-compose version docker-compose version 1.29.2, build unknown docker-py version: 5.0.2 CPython version: 3.8.10 OpenSSL version: OpenSSL 1.1.1f 31 Mar 2020

Just to note, installing packages using other programs other than apt may lead to future conflicts!

The whole console output of the pip3 installing docker-compose

root@srv:~# apt update

Get:1 http://archive.ubuntu.com/ubuntu focal InRelease [265 kB]

Get:2 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB]

Get:3 http://archive.ubuntu.com/ubuntu focal-backports InRelease [101 kB]

Get:4 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Get:5 http://archive.ubuntu.com/ubuntu focal/multiverse amd64 Packages [177 kB]

Get:6 http://archive.ubuntu.com/ubuntu focal/restricted amd64 Packages [33.4 kB]

Get:7 http://archive.ubuntu.com/ubuntu focal/universe amd64 Packages [11.3 MB]

Get:8 http://archive.ubuntu.com/ubuntu focal/main amd64 Packages [1275 kB]

Get:9 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [1514 kB]

Get:10 http://archive.ubuntu.com/ubuntu focal-updates/multiverse amd64 Packages [33.3 kB]

Get:11 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 Packages [1069 kB]

Get:12 http://archive.ubuntu.com/ubuntu focal-updates/restricted amd64 Packages [575 kB]

Get:13 http://archive.ubuntu.com/ubuntu focal-backports/universe amd64 Packages [6324 B]

Get:14 http://archive.ubuntu.com/ubuntu focal-backports/main amd64 Packages [2668 B]

Get:15 http://security.ubuntu.com/ubuntu focal-security/main amd64 Packages [1070 kB]

Get:16 http://security.ubuntu.com/ubuntu focal-security/universe amd64 Packages [790 kB]

Get:17 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 Packages [30.1 kB]

Get:18 http://security.ubuntu.com/ubuntu focal-security/restricted amd64 Packages [525 kB]

Fetched 19.0 MB in 1s (16.7 MB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

All packages are up to date.

root@srv:~# apt upgrade -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

Calculating upgrade... Done

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

root@srv:~# apt install -y python3-pip docker

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

binutils binutils-common binutils-x86-64-linux-gnu build-essential ca-certificates cpp cpp-9 dirmngr dpkg-dev fakeroot file g++ g++-9 gcc gcc-9 gcc-9-base gnupg gnupg-l10n gnupg-utils

gpg gpg-agent gpg-wks-client gpg-wks-server gpgconf gpgsm libalgorithm-diff-perl libalgorithm-diff-xs-perl libalgorithm-merge-perl libasan5 libasn1-8-heimdal libassuan0 libatomic1

libbinutils libbsd0 libc-dev-bin libc6-dev libcc1-0 libcrypt-dev libctf-nobfd0 libctf0 libdpkg-perl libexpat1 libexpat1-dev libfakeroot libfile-fcntllock-perl libgcc-9-dev

libgdbm-compat4 libgdbm6 libglib2.0-0 libglib2.0-data libgomp1 libgssapi3-heimdal libhcrypto4-heimdal libheimbase1-heimdal libheimntlm0-heimdal libhx509-5-heimdal libicu66 libisl22

libitm1 libkrb5-26-heimdal libksba8 libldap-2.4-2 libldap-common liblocale-gettext-perl liblsan0 libmagic-mgc libmagic1 libmpc3 libmpdec2 libmpfr6 libnpth0 libperl5.30 libpython3-dev

libpython3-stdlib libpython3.8 libpython3.8-dev libpython3.8-minimal libpython3.8-stdlib libquadmath0 libreadline8 libroken18-heimdal libsasl2-2 libsasl2-modules libsasl2-modules-db

libsqlite3-0 libssl1.1 libstdc++-9-dev libtsan0 libubsan1 libwind0-heimdal libx11-6 libx11-data libxau6 libxcb1 libxdmcp6 libxml2 linux-libc-dev make manpages manpages-dev mime-support

netbase openssl patch perl perl-modules-5.30 pinentry-curses python-pip-whl python3 python3-dev python3-distutils python3-lib2to3 python3-minimal python3-pkg-resources

python3-setuptools python3-wheel python3.8 python3.8-dev python3.8-minimal readline-common shared-mime-info tzdata wmdocker xdg-user-dirs xz-utils zlib1g-dev

Suggested packages:

binutils-doc cpp-doc gcc-9-locales dbus-user-session libpam-systemd pinentry-gnome3 tor debian-keyring g++-multilib g++-9-multilib gcc-9-doc gcc-multilib autoconf automake libtool flex

bison gdb gcc-doc gcc-9-multilib parcimonie xloadimage scdaemon glibc-doc git bzr gdbm-l10n libsasl2-modules-gssapi-mit | libsasl2-modules-gssapi-heimdal libsasl2-modules-ldap

libsasl2-modules-otp libsasl2-modules-sql libstdc++-9-doc make-doc man-browser ed diffutils-doc perl-doc libterm-readline-gnu-perl | libterm-readline-perl-perl libb-debug-perl

liblocale-codes-perl pinentry-doc python3-doc python3-tk python3-venv python-setuptools-doc python3.8-venv python3.8-doc binfmt-support readline-doc

The following NEW packages will be installed:

binutils binutils-common binutils-x86-64-linux-gnu build-essential ca-certificates cpp cpp-9 dirmngr docker dpkg-dev fakeroot file g++ g++-9 gcc gcc-9 gcc-9-base gnupg gnupg-l10n

gnupg-utils gpg gpg-agent gpg-wks-client gpg-wks-server gpgconf gpgsm libalgorithm-diff-perl libalgorithm-diff-xs-perl libalgorithm-merge-perl libasan5 libasn1-8-heimdal libassuan0

libatomic1 libbinutils libbsd0 libc-dev-bin libc6-dev libcc1-0 libcrypt-dev libctf-nobfd0 libctf0 libdpkg-perl libexpat1 libexpat1-dev libfakeroot libfile-fcntllock-perl libgcc-9-dev

libgdbm-compat4 libgdbm6 libglib2.0-0 libglib2.0-data libgomp1 libgssapi3-heimdal libhcrypto4-heimdal libheimbase1-heimdal libheimntlm0-heimdal libhx509-5-heimdal libicu66 libisl22

libitm1 libkrb5-26-heimdal libksba8 libldap-2.4-2 libldap-common liblocale-gettext-perl liblsan0 libmagic-mgc libmagic1 libmpc3 libmpdec2 libmpfr6 libnpth0 libperl5.30 libpython3-dev

libpython3-stdlib libpython3.8 libpython3.8-dev libpython3.8-minimal libpython3.8-stdlib libquadmath0 libreadline8 libroken18-heimdal libsasl2-2 libsasl2-modules libsasl2-modules-db

libsqlite3-0 libssl1.1 libstdc++-9-dev libtsan0 libubsan1 libwind0-heimdal libx11-6 libx11-data libxau6 libxcb1 libxdmcp6 libxml2 linux-libc-dev make manpages manpages-dev mime-support

netbase openssl patch perl perl-modules-5.30 pinentry-curses python-pip-whl python3 python3-dev python3-distutils python3-lib2to3 python3-minimal python3-pip python3-pkg-resources

python3-setuptools python3-wheel python3.8 python3.8-dev python3.8-minimal readline-common shared-mime-info tzdata wmdocker xdg-user-dirs xz-utils zlib1g-dev

.....

.....

0 upgraded, 128 newly installed, 0 to remove and 0 not upgraded.

Need to get 84.6 MB of archives.

After this operation, 370 MB of additional disk space will be used.

Processing triggers for ca-certificates (20210119~20.04.1) ...

Updating certificates in /etc/ssl/certs...

0 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d...

done.

root@srv:~# pip3 install docker-compose

Collecting docker-compose

Downloading docker_compose-1.29.2-py2.py3-none-any.whl (114 kB)

|████████████████████████████████| 114 kB 12.4 MB/s

Collecting requests<3,>=2.20.0

Downloading requests-2.26.0-py2.py3-none-any.whl (62 kB)

|████████████████████████████████| 62 kB 355 kB/s

Collecting jsonschema<4,>=2.5.1

Downloading jsonschema-3.2.0-py2.py3-none-any.whl (56 kB)

|████████████████████████████████| 56 kB 3.4 MB/s

Collecting websocket-client<1,>=0.32.0

Downloading websocket_client-0.59.0-py2.py3-none-any.whl (67 kB)

|████████████████████████████████| 67 kB 3.3 MB/s

Collecting texttable<2,>=0.9.0

Downloading texttable-1.6.4-py2.py3-none-any.whl (10 kB)

Collecting PyYAML<6,>=3.10

Downloading PyYAML-5.4.1-cp38-cp38-manylinux1_x86_64.whl (662 kB)

|████████████████████████████████| 662 kB 76.9 MB/s

Collecting dockerpty<1,>=0.4.1

Downloading dockerpty-0.4.1.tar.gz (13 kB)

Collecting docker[ssh]>=5

Downloading docker-5.0.2-py2.py3-none-any.whl (145 kB)

|████████████████████████████████| 145 kB 119.5 MB/s

Collecting distro<2,>=1.5.0

Downloading distro-1.6.0-py2.py3-none-any.whl (19 kB)

Collecting docopt<1,>=0.6.1

Downloading docopt-0.6.2.tar.gz (25 kB)

Collecting python-dotenv<1,>=0.13.0

Downloading python_dotenv-0.19.0-py2.py3-none-any.whl (17 kB)

Collecting urllib3<1.27,>=1.21.1

Downloading urllib3-1.26.6-py2.py3-none-any.whl (138 kB)

|████████████████████████████████| 138 kB 141.1 MB/s

Collecting charset-normalizer~=2.0.0; python_version >= "3"

Downloading charset_normalizer-2.0.4-py3-none-any.whl (36 kB)

Collecting certifi>=2017.4.17

Downloading certifi-2021.5.30-py2.py3-none-any.whl (145 kB)

|████████████████████████████████| 145 kB 133.3 MB/s

Collecting idna<4,>=2.5; python_version >= "3"

Downloading idna-3.2-py3-none-any.whl (59 kB)

|████████████████████████████████| 59 kB 1.6 MB/s

Collecting pyrsistent>=0.14.0

Downloading pyrsistent-0.18.0-cp38-cp38-manylinux1_x86_64.whl (118 kB)

|████████████████████████████████| 118 kB 131.3 MB/s

Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from jsonschema<4,>=2.5.1->docker-compose) (45.2.0)

Collecting six>=1.11.0

Downloading six-1.16.0-py2.py3-none-any.whl (11 kB)

Collecting attrs>=17.4.0

Downloading attrs-21.2.0-py2.py3-none-any.whl (53 kB)

|████████████████████████████████| 53 kB 899 kB/s

Collecting paramiko>=2.4.2; extra == "ssh"

Downloading paramiko-2.7.2-py2.py3-none-any.whl (206 kB)

|████████████████████████████████| 206 kB 147.7 MB/s

Collecting cryptography>=2.5

Downloading cryptography-3.4.8-cp36-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.2 MB)

|████████████████████████████████| 3.2 MB 147.4 MB/s

Collecting bcrypt>=3.1.3

Downloading bcrypt-3.2.0-cp36-abi3-manylinux2010_x86_64.whl (63 kB)

|████████████████████████████████| 63 kB 1.4 MB/s

Collecting pynacl>=1.0.1

Downloading PyNaCl-1.4.0-cp35-abi3-manylinux1_x86_64.whl (961 kB)

|████████████████████████████████| 961 kB 139.4 MB/s

Collecting cffi>=1.12

Downloading cffi-1.14.6-cp38-cp38-manylinux1_x86_64.whl (411 kB)

|████████████████████████████████| 411 kB 84.0 MB/s

Collecting pycparser

Downloading pycparser-2.20-py2.py3-none-any.whl (112 kB)

|████████████████████████████████| 112 kB 140.9 MB/s

Building wheels for collected packages: dockerpty, docopt

Building wheel for dockerpty (setup.py) ... done

Created wheel for dockerpty: filename=dockerpty-0.4.1-py3-none-any.whl size=16604 sha256=d6f2d3d74bad523b1a308a952176a1db84cb604611235c1a5ae1c936cefe7889

Stored in directory: /root/.cache/pip/wheels/1a/58/0d/9916bf3c72e224e038beb88f669f68b61d2f274df498ff87c6

Building wheel for docopt (setup.py) ... done

Created wheel for docopt: filename=docopt-0.6.2-py2.py3-none-any.whl size=13704 sha256=f8c389703e63ff7ec3734b240ba8d62c8f8bd99f3b05ccdcb0de1397aa523655

Stored in directory: /root/.cache/pip/wheels/56/ea/58/ead137b087d9e326852a851351d1debf4ada529b6ac0ec4e8c

Successfully built dockerpty docopt

Installing collected packages: urllib3, charset-normalizer, certifi, idna, requests, pyrsistent, six, attrs, jsonschema, websocket-client, texttable, PyYAML, dockerpty, pycparser, cffi, cryptography, bcrypt, pynacl, paramiko, docker, distro, docopt, python-dotenv, docker-compose

Successfully installed PyYAML-5.4.1 attrs-21.2.0 bcrypt-3.2.0 certifi-2021.5.30 cffi-1.14.6 charset-normalizer-2.0.4 cryptography-3.4.8 distro-1.6.0 docker-5.0.2 docker-compose-1.29.2 dockerpty-0.4.1 docopt-0.6.2 idna-3.2 jsonschema-3.2.0 paramiko-2.7.2 pycparser-2.20 pynacl-1.4.0 pyrsistent-0.18.0 python-dotenv-0.19.0 requests-2.26.0 six-1.16.0 texttable-1.6.4 urllib3-1.26.6 websocket-client-0.59.0

starting Hashicorp vault in server mode under docker container

Running Hashicorp vault in development mode is really easy, but starting the vault in server mode under a docker container may have some changes described in this article.

There are several simple steps, which is hard to get in one place, to run a Hashicorp vault in server mode (under docker):

- Prepare the directories to map in the docker. The data in the directories will be safe and won’t be deleted if the container is deleted.

- Prepare an initial base configuration to start the server. Without it, the server won’t startup. Even it is really simple.

- Start the Hashicorp vault process in a docker container.

- Initiliza the vault. During this step, the server will generate the database backend storage (files or in-memory or cloud backends) and 5 unseal keys and an administrative root token will be generated. To manage the vault an administrative user is required.

- Unseal the vault. Unencrypt the database backend to use the service with at least three commands and three different unseal keys generated during the initialization step.

- Login with the administrative user and enable vault engine to store values (or generate tokens, passwords, and so on). The example here enables the secret engine to store key:value backend. Check out the secrets engines – https://www.vaultproject.io/docs/secrets

STEP 1) Summary of the mapped directories in the docker

Three directories are preserved:

- /vault/config – contains configuration files in HCL or JSON format.

- /vault/data – the place, where the encrypted database files will be kept only if a similar storage engine is used like “file” or “raft” storages. More information here – https://www.vaultproject.io/docs/configuration/storage

- /vault/log – writing persistent audit logs. This feature should be enabled explicitly in the configuration.

The base directory used is /srv/vault/. And the three directories are created as follow and will be mapped in the docker container:

mkdir -p /srv/vault/config /srv/vault/data /srv/vault/log chmod 777 /srv/vault/data

The server’s directory /srv/vault/config will be mapped in docker’s directory /vault/config and the other two, too.

STEP 2) Initial base configuration

The initial configuration file is placed in /vault/config/config.hcl and is using HCL format – https://github.com/hashicorp/hcl. The initial configuration is minimal:

storage "raft" {

path = "/vault/data"

node_id = "node1"

}

listener "tcp" {

address = "127.0.0.1:8200"

tls_disable = 1

}

disable_mlock = true

api_addr = "http://127.0.0.1:8200"

cluster_addr = "https://127.0.0.1:8201"

ui = true

Place the file in /srv/vault/config/config.hcl

STEP 3) Start the Hashicorp vault server in docker

Mapping the three directories.

root@srv ~ # docker run --cap-add=IPC_LOCK -v /srv/vault/config:/vault/config -v /srv/vault/data:/vault/data -v /srv/vault/logs:/vault/logs --name=srv-vault vault server

==> Vault server configuration:

Api Address: http://127.0.0.1:8200

Cgo: disabled

Cluster Address: https://127.0.0.1:8201

Go Version: go1.14.7

Listener 1: tcp (addr: "127.0.0.1:8200", cluster address: "127.0.0.1:8201", max_request_duration: "1m30s", max_request_size: "33554432", tls: "disabled")

Log Level: info

Mlock: supported: true, enabled: false

Recovery Mode: false

Storage: raft (HA available)

Version: Vault v1.5.3

Version Sha: 9fcd81405feb320390b9d71e15a691c3bc1daeef

==> Vault server started! Log data will stream in below:

edit mysql options in docker (or docker-compose) mysql

Modifying the default options for the docker (podman) MySQL server is essential. The default MySQL options are too conservative and even for simple (automation?) tests the options could be .

For example, modifying only one or two of the default InnoDB configuration options may lead to boosting multiple times faster execution of SQL queries and the related automation tests.

Here are three simple ways to modify the (default or current) MySQL my.cnf configuration options:

- Command-line arguments. All MySQL configuration options could be overriden by passing them in the command line of mysqld binary. The format is:

--variable-name=value

and the variable names could be obtained by

mysqld --verbose --help

and for the live configuration options:

mysqladmin variables

- Options in a additional configuration file, which will be included in the main configuration. The options in /etc/mysql/conf.d/config-file.cnftake precedence.

- Replacing the default my.cnf configuration file – /etc/mysql/my.cnf.

Check out also the official page – https://hub.docker.com/_/mysql.

Under CentOS 8 docker is replaced by podman and just replace the docker with podman in all of the commands below.

OPTION 1) Command-line arguments.

This is the simplest way of modifying the default my.cnf (the one, which comes with the docker image or this in the current docker image file). It is fast and easy to use and change, just a little bit of much writing in the command-line. As mentioned above all MySQL options could be changed by a command-line argument to the mysqld binary. For example:

mysqld --innodb_buffer_pool_size=1024M

It will start MySQL server with variable innodb_buffer_pool_size set to 1G. Translating it to (for multiple options just add them at the end of the command):

-

docker run

root@srv ~ # docker run --name my-mysql -v /var/lib/mysql:/var/lib/mysql \ -e MYSQL_ROOT_PASSWORD=111111 \ -d mysql:8 \ --innodb_buffer_pool_size=1024M \ --innodb_read_io_threads=4 \ --innodb_flush_log_at_trx_commit=2 \ --innodb_flush_method=O_DIRECT 1bb7f415ab03b8bfd76d1cf268454e3c519c52dc383b1eb85024e506f1d04dea root@srv ~ # docker exec -it my-mysql mysqladmin -p111111 variables|grep innodb_buffer_pool_size | innodb_buffer_pool_size | 1073741824

-

docker-compose:

# Docker MySQL arguments example version: '3.1' services: db: image: mysql:8 command: --default-authentication-plugin=mysql_native_password --innodb_buffer_pool_size=1024M --innodb_read_io_threads=4 --innodb_flush_log_at_trx_commit=2 --innodb_flush_method=O_DIRECT restart: always environment: MYSQL_ROOT_PASSWORD: 111111 volumes: - /var/lib/mysql_data:/var/lib/mysql ports: - "3306:3306"Here is how to run it (the above text file should be named docker-compose.yml and the file should be in the current directory when executing the command below):

root@srv ~ # docker-compose up Creating network "docker-compose-mysql_default" with the default driver Creating my-mysql ... done Attaching to my-mysql my-mysql | 2020-06-16 09:45:35+00:00 [Note] [Entrypoint]: Entrypoint script for MySQL Server 8.0.20-1debian10 started. my-mysql | 2020-06-16 09:45:35+00:00 [Note] [Entrypoint]: Switching to dedicated user 'mysql' my-mysql | 2020-06-16 09:45:35+00:00 [Note] [Entrypoint]: Entrypoint script for MySQL Server 8.0.20-1debian10 started. my-mysql | 2020-06-16T09:45:36.293747Z 0 [Warning] [MY-011070] [Server] 'Disabling symbolic links using --skip-symbolic-links (or equivalent) is the default. Consider not using this option as it' is deprecated and will be removed in a future release. my-mysql | 2020-06-16T09:45:36.293906Z 0 [System] [MY-010116] [Server] /usr/sbin/mysqld (mysqld 8.0.20) starting as process 1 my-mysql | 2020-06-16T09:45:36.307654Z 1 [System] [MY-013576] [InnoDB] InnoDB initialization has started. my-mysql | 2020-06-16T09:45:36.942424Z 1 [System] [MY-013577] [InnoDB] InnoDB initialization has ended. my-mysql | 2020-06-16T09:45:37.136537Z 0 [System] [MY-011323] [Server] X Plugin ready for connections. Socket: '/var/run/mysqld/mysqlx.sock' bind-address: '::' port: 33060 my-mysql | 2020-06-16T09:45:37.279733Z 0 [Warning] [MY-010068] [Server] CA certificate ca.pem is self signed. my-mysql | 2020-06-16T09:45:37.306693Z 0 [Warning] [MY-011810] [Server] Insecure configuration for --pid-file: Location '/var/run/mysqld' in the path is accessible to all OS users. Consider choosing a different directory. my-mysql | 2020-06-16T09:45:37.353358Z 0 [System] [MY-010931] [Server] /usr/sbin/mysqld: ready for connections. Version: '8.0.20' socket: '/var/run/mysqld/mysqld.sock' port: 3306 MySQL Community Server - GPL.

And check the option:

root@srv ~ # docker exec -it my-mysql mysqladmin -p111111 variables|grep innodb_buffer_pool_size | innodb_buffer_pool_size | 1073741824

OPTION 2) Options in a additional configuration file.

Create a MySQL option file with name config-file.cnf:

[mysqld] innodb_buffer_pool_size=1024M innodb_read_io_threads=4 innodb_flush_log_at_trx_commit=2 innodb_flush_method=O_DIRECT

- docker run

- docker-compose

The source path may not be absolute path.# Docker MySQL arguments example version: '3.1' services: db: container_name: my-mysql image: mysql:8 command: --default-authentication-plugin=mysql_native_password restart: always environment: MYSQL_ROOT_PASSWORD: 111111 volumes: - /var/lib/mysql_data:/var/lib/mysql - ./config-file.cnf:/etc/mysql/conf.d/config-file.cnf ports: - "3306:3306"

The source path must be absolute path!

docker run --name my-mysql \ -v /var/lib/mysql_data:/var/lib/mysql \ -v /etc/mysql/docker-instances/config-file.cnf:/etc/mysql/conf.d/config-file.cnf \ -e MYSQL_ROOT_PASSWORD=111111 \ -d mysql:8

OPTION 3) Replacing the default my.cnf configuration file.

Add the modified options to a my.cnf template file and map it to the container on /etc/mysql/my.cnf. When overwriting the main MySQL option file – my.cnf you may map the whole /etc/mysql directory (just replace /etc/mysql/my.cnf with /etc/mysql below), too. The source file (or directory) may be any file (or directory) not the /etc/mysql/my.cnf (or /etc/mysql)

- docker run:

The source path must be absolute path.docker run --name my-mysql \ -v /var/lib/mysql_data:/var/lib/mysql \ -v /etc/mysql/my.cnf:/etc/mysql/my.cnf \ -e MYSQL_ROOT_PASSWORD=111111 \ --publish 3306:3306 \ -d mysql:8

Note: here a new option “–publish 3306:3306” is included to show how to map the ports out of the container like all the examples with the docker-compose here.

- docker-compose:

The source path may not be absolute path, but the current directory.# Use root/example as user/password credentials version: '3.1' services: db: container_name: my-mysql image: mysql:8 command: --default-authentication-plugin=mysql_native_password restart: always environment: MYSQL_ROOT_PASSWORD: 111111 volumes: - /var/lib/mysql_data:/var/lib/mysql - ./mysql/my.cnf:/etc/mysql/my.cnf ports: - "3306:3306"

Chromium browser in Ubuntu 20.04 LTS without snap to use in docker container

Ubuntu team has its own vision for the snap (https://snapcraft.io/) service and that’s why they have moved the really big and difficult to maintain Chromium browser package in the snap package. Unfortunately, the snap has many issues with docker containers and in short, it is way difficult to run snap in a docker container. The user may just want not to mess with snap packages (despite this is the future according to the Ubuntu team) or like most developers they all need a browser for their tests executed in a container.

Whether you are a developer or an ordinary user this article is for you, who wants Chromium browser installed not from the snap service under Ubuntu 20.04 LTS!

There are multiple options, which could end up with a Chromium browser installed on the system, not from the snap service:

- Using Debian package and Debian repository. The problem here is that using simultaneously Ubuntu and Debian repository on one machine is not a good idea! Despite the hack, Debian packages are with low priority – https://askubuntu.com/questions/1204571/chromium-without-snap/1206153#1206153

- Using Google Chrome – https://www.google.com/chrome/?platform=linux. It is just a single Debian package, which provides Chromium-like browser and all dependencies requesting the Chromium browser package are fulfilled.

- Using Chromium team dev or beta PPA (https://launchpad.net/~chromium-team) for the nearest version if still missing Ubuntu packages for Focal (Ubuntu 20.04 LTS).

- more options available?

This article will show how to use Ubuntu 18 (Bionic) Chromium browser package from Chromium team beta PPA under Ubuntu 20.04 LTS (Focal). Bionic package from the very same repository of Ubuntu Chromium team may be used, too.

All dependencies will be downloaded from the Ubuntu 20.04 and just several Chromium-* packages will be downloaded from the Chromium team PPA Ubuntu 19 repository. The chances to break something are really small compared to the options 1 above, which uses the Debian packages and repositories. Hope, soon we are going to have focal (Ubuntu 20.04 LTS) packages in the Ubuntu Chromium team PPA!

Dockerfile

An example of a Dockerfile installing Chromium (and python3 selenium for automating web browser interactions)

RUN apt-key adv --fetch-keys "https://keyserver.ubuntu.com/pks/lookup?op=get&search=0xea6e302dc78cc4b087cfc3570ebea9b02842f111" \ && echo 'deb http://ppa.launchpad.net/chromium-team/beta/ubuntu bionic main ' >> /etc/apt/sources.list.d/chromium-team-beta.list \ && apt update RUN export DEBIAN_FRONTEND=noninteractive \ && export DEBCONF_NONINTERACTIVE_SEEN=true \ && apt-get -y install chromium-browser RUN apt-get -y install python3-selenium

First command adds the repository key and the repository to the Ubuntu source lists. Note we are adding the “bionic main”, not “focal main”.

From the all dependencies of the Bionic chromium-browser only three packages are pulled from the Bionic repository and all other are from the Ubuntu 20 (Focal):

..... Get:1 http://ppa.launchpad.net/chromium-team/beta/ubuntu bionic/main amd64 chromium-codecs-ffmpeg-extra amd64 84.0.4147.38-0ubuntu0.18.04.1 [1174 kB] ..... Get:5 http://ppa.launchpad.net/chromium-team/beta/ubuntu bionic/main amd64 chromium-browser amd64 84.0.4147.38-0ubuntu0.18.04.1 [67.8 MB] ..... Get:187 http://ppa.launchpad.net/chromium-team/beta/ubuntu bionic/main amd64 chromium-browser-l10n all 84.0.4147.38-0ubuntu0.18.04.1 [3429 kB] .....

Here is the whole Dockerfile sample file:

# # Docker file for the image "chromium brower without snap" # FROM ubuntu:20.04 MAINTAINER myuser@example.com #chromium browser #original PPA repository, use if our local fails RUN echo "tzdata tzdata/Areas select Etc" | debconf-set-selections && echo "tzdata tzdata/Zones/Etc select UTC" | debconf-set-selections RUN export DEBIAN_FRONTEND=noninteractive && export DEBCONF_NONINTERACTIVE_SEEN=true RUN apt-get -y update && apt-get -y upgrade RUN apt-get -y install gnupg2 apt-utils wget #RUN wget -O /root/chromium-team-beta.pub "https://keyserver.ubuntu.com/pks/lookup?op=get&search=0xea6e302dc78cc4b087cfc3570ebea9b02842f111" && apt-key add /root/chromium-team-beta.pub RUN apt-key adv --fetch-keys "https://keyserver.ubuntu.com/pks/lookup?op=get&search=0xea6e302dc78cc4b087cfc3570ebea9b02842f111" && echo 'deb http://ppa.launchpad.net/chromium-team/beta/ubuntu bionic main ' >> /etc/apt/sources.list.d/chromium-team-beta.list && apt update RUN export DEBIAN_FRONTEND=noninteractive && export DEBCONF_NONINTERACTIVE_SEEN=true && apt-get -y install chromium-browser RUN apt-get -y install python3-selenium

Desktop install

The desktop installation is almost the same as the Dockerfile above. Just execute the following lines:

Keep on reading!

docker-compose using private registry (repository) with shell executor in gilab-runner

Using docker-compose may make your life easier only if you use docker images from the official docker registry. And may live forever without knowing the issues when you try to use gitlab-runner with shell executor and docker-compose, which tries to use an image from a private docker registry. The private docker registry is just your gitlab docker containers for your software.

Here is the very error from docker-compose:

pull access denied for applicaton-server, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

To be able to use a private docker repository (i.e. registry) with docker-compose in gitlab-runner you must check the following:

- Make sure the gitlab-runner is the same user as the user running docker-compose in your scripts, because the docker-compose will use the “~/.docker/config.json”, which hosts the login credentials to the docker repositories (including private ones) and is initially created when the before script kicks in and used (mapped) from the programs running from the gitlab-runner executors, as in the very case shell executor. In simple words, if you want to be able to login to a private docker registry within a gitlab runner the “~/.docker/config.json” must exist in the user’s home directory. If the gitlab-runner is running under the root user, the credential file will be located in “/root/.docker/config.json” so the docker-compose ran by the gitlab-runner must be executed from the root user!

The user running the gitlab-runner and the one, which later in your automation code executes docker-compose, MUST be the same.

So be careful with su and sudo in your scripts! Authentication command and the docker-compose must be with the same user.

- run a before script in .gitlab-ci.yml with docker login command using the gitlab CI authentication token (or explicitly with user and password if your environment is such configured) and the URL of the private docker image (including the port and the “/”):

.... before_script: - docker login -u gitlab-ci-token -p $CI_JOB_TOKEN mytest.private-docker-repository.com/ ....

In fact, you may run the above command just before the docker-compose

- Explicitly add the private registry URL (the whole URL to the image – include all the path to the image if any) to the docker image name in docker-compose.xml file.

In your docker-compose.xml include:.... image: mytest.private-docker-repository.com/myapp/applicaton-server:1.1.1 ....Do not rely on the docker-compose will use the private docker registry if you omit its URL like this:

.... image: applicaton-server:1.1.1 ....Probably an additional configuration is required to use it as default docker registry.

An extended output of the error and the problem:

...... Checking out xxx as master... Skipping Git submodules setup $ ./start-jobs.sh 00:12 + docker-compose -p applicaton-server-v2 run --rm --entrypoint 'dockerize -wait tcp://db:3306 -timeout 60s start-server ../main.xml ' applicaton-server Creating network "applicaton-server-v2_default" with the default driver Pulling db (mysql:5.7)... 5.6: Pulling from library/mysql Digest: sha256:xxx Status: Downloaded newer image for mysql:5.7 Creating applicaton-server-v2_db_1 ... Creating applicaton-server-v2_db_1 Pulling applicaton-server (applicaton-server:1.1.1)... pull access denied for applicaton-server, repository does not exist or may require 'docker login': denied: requested access to the resource is denied + finish + docker-compose logs .....

As you can see the “start-jobs.sh” executes “docker-compose” command and it won’t help if you issue “docker login” command just before “docker-compose”! And in fact, the line:

Pulling applicaton-server (applicaton-server:1.1.1)...

It informs for pulling the image, but it tries to pull it from the official docker registry and not the private docker registry (even it is included in the “~/.docker/config.json” and the file is in place).

You must include the URL of the private docker registry in the docker-compose.xml as mentioned above to be sure the docker-compose command will try to pull it from the right place!

Using “docker login” fails, too:

..... Checking out xxx as master... Skipping Git submodules setup $ ./start-jobs.sh 00:04 + docker login Error: Cannot perform an interactive login from a non TTY device + finish + docker-compose logs .....

Running docker login with gitlab CI token from the user script works perfectly (the script authenticates successfully in the private docker registry), but the problem below is that the docker-compose just pulls the image from the default registry, which is the official docker registry, not the private one. Despite there is a successful docker login command to private registry the default registry use used when there is no registry URL in the docker-compose.xml image section.

Checking out xxx as master... Skipping Git submodules setup 00:34 $ ./start-jobs.sh + trap finish EXIT + docker login -u gitlab-ci-token -p [MASKED] mytest.private-docker-repository.com/ WARNING! Using --password via the CLI is insecure. Use --password-stdin. WARNING! Your password will be stored unencrypted in /home/gitlab-runner/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded + docker-compose -p applicaton-server-v2 run --rm --entrypoint 'dockerize -wait tcp://db:3306 -timeout 60s start-server ../main.xml ' applicaton-server Creating network "applicaton-server-v2_default" with the default driver Pulling db (mysql:5.7)... 5.6: Pulling from library/mysql Digest: sha256:xxx Status: Downloaded newer image for mysql:5.7 Creating applicaton-server-v2_db_1 ... Creating applicaton-server-v2_db_1 Pulling applicaton-server (applicaton-server:latest)... pull access denied for applicaton-server, repository does not exist or may require 'docker login': denied: requested access to the resource is denied + finish + docker-compose logs

firewalld and podman (or docker) – no internet in the container and could not resolve host

If you happen to use CentOS 8 you have already discovered that Red Hat (i.e. CentOS) switch to podman, which is a fork of docker. So probably the following fix might help to someone, which does not use CentOS 8 or podman. For now, podman and docker are 99.99% the same.

So creating and starting a container is easy and in most cases one command only, but you may stumble on the error your container could not resolve or could not connect to an IP even there is a ping to the IP!

The service in the container may live a happy life without Internet access but just the mapped ports from the outside world. Still, it may happen to need Internet access, let’s say if an update should be performed.

Here is how to fix podman (docker) missing the Internet access in the container:

- No ping to the outside world. The chances you are missing

sysctl -w net.ipv4.ip_forward=1

And do not forget to make it permanent by adding the “net.ipv4.ip_forward=1” to /etc/sysctl.conf (or a file “.conf” in /etc/sysctl.d/).

- ping to the outside IP of the container is available, but no connection to any service is available! Probably the NAT is not enabled in your podman docker configuration. In the case with firewalld, at least, you must enable the masquerade option of the public zone

firewall-cmd --zone=public --add-masquerade firewall-cmd --permanent --zone=public --add-masquerade

The second command with “–permanent” is to make the option permanent over reboots.

The error – Could not resolve host (Name or service not known) despite having servers in /etc/resolv.conf and ping to them!

One may think having IPs in /etc/resolv.conf and ping to them in the container should give the container access to the Internet. But the following error occurs:

[root@srv /]# yum install telnet Loaded plugins: fastestmirror, ovl Determining fastest mirrors * base: artfiles.org * extras: centos.mirror.net-d-sign.de * updates: centos.bio.lmu.de http://mirror.fra10.de.leaseweb.net/centos/7.7.1908/os/x86_64/repodata/repomd.xml: [Errno 14] curl#6 - "Could not resolve host: mirror.fra10.de.leaseweb.net; Unknown error" Trying other mirror. http://artfiles.org/centos.org/7.7.1908/os/x86_64/repodata/repomd.xml: [Errno 14] curl#6 - "Could not resolve host: artfiles.org; Unknown error" Trying other mirror. ^C Exiting on user cancel [root@srv /]# ^C [root@srv /]# ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data. 64 bytes from 8.8.8.8: icmp_seq=1 ttl=56 time=5.05 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=56 time=5.06 ms ^C --- 8.8.8.8 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 5.050/5.055/5.061/0.071 ms [root@srv ~]# cat /etc/resolv.conf nameserver 8.8.8.8 nameserver 8.8.4.4 [root@srv /]# ping google.com ping: google.com: Name or service not known

The error 2 – Can’t connect to despite having ping to the IP!

[root@srv /]# ping 2.2.2.2 PING 2.2.2.2 (2.2.2.2) 56(84) bytes of data. 64 bytes from 2.2.2.2: icmp_seq=1 ttl=56 time=9.15 ms 64 bytes from 2.2.2.2: icmp_seq=2 ttl=56 time=9.16 ms ^C [root@srv2 /]# mysql -h2.2.2.2 -uroot -p Enter password: ERROR 2003 (HY000): Can't connect to MySQL server on '2.2.2.2' (113) [root@srv2 /]#

Despite having ping the MySQL server on 2.2.2.2 and despite the firewall on 2.2.2.2 allows outside connections the container could not connect to it. And testing other services like HTTP, HTTPS, FTP and so on resulted in “unable to connect“, too. Simply because the NAT (aka masquerade is not enabled in the firewall).