An annoying error when using the LXC container tools like lxc-attach, which is really simple to fix.

[root@srv ~]# lxc-attach -n db-cluster-3

lxc_container: attach.c: lxc_attach_run_shell: 1333 Permission denied - failed to exec shell

[root@srv ~]#

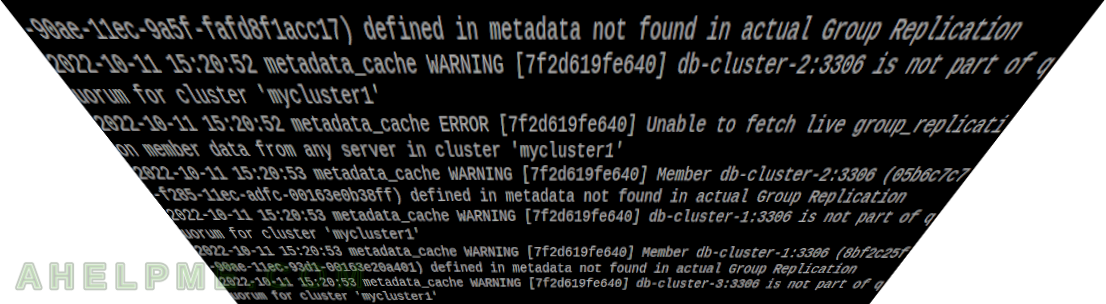

This error just reports the bash shell in the container cannot be started and the SELinux audit file adds some errors, too:

type=AVC msg=audit(1665745824.682:24229): avc: denied { entrypoint } for pid=20646 comm="lxc-attach" path="/usr/bin/bash" dev="md3" ino=111806476 scontext=system_u:system_r:unconfined_service_t:s0 tcontext=unconfined_u:object_r:var_log_t:s0 tclass=file

type=SYSCALL msg=audit(1665745824.682:24229): arch=c000003e syscall=59 success=no exit=-13 a0=24412c6 a1=7ffe87c07170 a2=2443870 a3=7ffe87c08c60 items=0 ppid=20644 pid=20646 auid=0 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts12 ses=3304 comm="lxc-attach" exe="/usr/bin/lxc-attach" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 key=(null)

type=PROCTITLE msg=audit(1665745824.682:24229): proctitle=6C78632D617474616368002D6E0064622D636C75737465722D33

type=AVC msg=audit(1665745824.682:24230): avc: denied { entrypoint } for pid=20646 comm="lxc-attach" path="/usr/bin/bash" dev="md3" ino=111806476 scontext=system_u:system_r:unconfined_service_t:s0 tcontext=unconfined_u:object_r:var_log_t:s0 tclass=file

type=SYSCALL msg=audit(1665745824.682:24230): arch=c000003e syscall=59 success=no exit=-13 a0=7f08b5e579a0 a1=7ffe87c07170 a2=2443870 a3=7ffe87c08c60 items=0 ppid=20644 pid=20646 auid=0 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts12 ses=3304 comm="lxc-attach" exe="/usr/bin/lxc-attach" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 key=(null)

type=PROCTITLE msg=audit(1665745824.682:24230): proctitle=6C78632D617474616368002D6E0064622D636C75737465722D33

So clearly, the problem is in SELinux, and turn it off temporarily with

setenforce 0

Turning off the SELinux is not the right thing! There are two aspects to the problem:

- Missing SELinux rules, which are installed with a special package container-selinux

- Wrong SELinux permissions for the LXC container’s root directory. In most cases, the user just changes the default /var/lib/lxc/[container] to something new and the LXC works, but it breaks some LXC parts.

Installing container-selinux is easy:

dnf install -y container-selinux

Or the old yum:

yum install -y container-selinux

Then check the SELinux attributes with:

[root@srv ~]# ls -altrZ /mnt/storage/servers/mycontainer/

drwxr-xr-x. root root unconfined_u:object_r:var_log_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:var_log_t:s0 config

drwxrwx---. root root unconfined_u:object_r:var_log_t:s0 .

drwxr-xr-x. root root unconfined_u:object_r:var_log_t:s0 rootfs

The problem is var_log_t, which is an SELinux file context and it should be container_var_lib_t. Stop the container and fix the permissions. If the default directory (/var/lib/lxc) were used, it would not have this problem. Adding the SELinux file context definition to the new directory is mandatory when changing the directory root of a container:

[root@srv ~]# semanage fcontext -a -t container_var_lib_t '/mnt/storage/servers/mycontainer(/.*)?'

[root@srv ~]# restorecon -Rv /mnt/storage/servers/mycontainer/

restorecon reset /mnt/storage/servers/mycontainer context unconfined_u:object_r:var_log_t:s0->unconfined_u:object_r:container_var_lib_t:s0

.....

.....

restorecon reset /mnt/storage/servers/mycontainer/config context unconfined_u:object_r:var_log_t:s0->unconfined_u:object_r:container_var_lib_t:s0

All files permissions under /mnt/storage/servers/mycontainer/ should be fixed with the restorecon. Start the LXC container and try to attach it with lxc-attach. Now, there should not be any errors:

[root@srv ~]# lxc-attach -n mycontainer

[root@mycontainer ~]#

The files’ context is the right one – container_var_lib_t:

[root@srv ~]# ls -altrZ /mnt/storage/servers/mycontainer/

drwxr-xr-x. root root unconfined_u:object_r:var_log_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:container_var_lib_t:s0 config

drwxrwx---. root root unconfined_u:object_r:container_var_lib_t:s0 .

drwxr-xr-x. root root unconfined_u:object_r:container_var_lib_t:s0 rootfs

More on LXC containers – https://ahelpme.com/category/software/lxc/.