A big change for Plasma KDE happened two months ago – a “Merge kwayland-server into kwin“.

So after KDE Plasma 5.25, there is no kwayland-server any more (respectively no kwayland-server with version 5.25 and no package in Gentoo) and it may block a Gentoo update with the following error:

mydesktop root # emerge -va --verbose-conflicts --verbose --backtrack=300 $(qlist -IC|grep -i kde)

......

......

[ebuild U ] dev-util/kdevelop-php-22.04.2:5::gentoo [21.12.3:5::gentoo] USE="handbook -debug -test" 1,057 KiB

[ebuild U ] kde-apps/umbrello-22.04.2:5::gentoo [21.12.3:5::gentoo] USE="handbook php -debug -test" 5,544 KiB

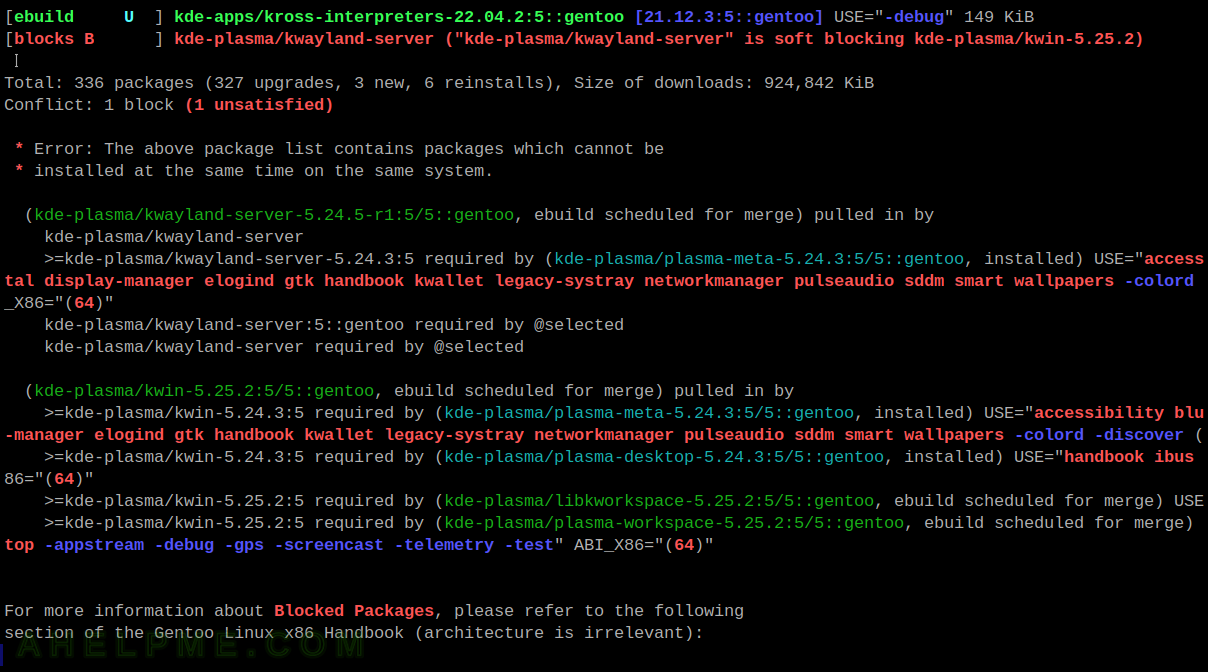

[ebuild U ] kde-apps/kross-interpreters-22.04.2:5::gentoo [21.12.3:5::gentoo] USE="-debug" 149 KiB

[blocks B ] kde-plasma/kwayland-server ("kde-plasma/kwayland-server" is soft blocking kde-plasma/kwin-5.25.2)

Total: 340 packages (329 upgrades, 5 new, 6 reinstalls), Size of downloads: 1,001,699 KiB

Conflict: 1 block (1 unsatisfied)

* Error: The above package list contains packages which cannot be

* installed at the same time on the same system.

(kde-plasma/kwayland-server-5.24.5-r1:5/5::gentoo, ebuild scheduled for merge) pulled in by

kde-plasma/kwayland-server

kde-plasma/kwayland-server:5::gentoo required by @selected

kde-plasma/kwayland-server required by @selected

(kde-plasma/kwin-5.25.2:5/5::gentoo, ebuild scheduled for merge) pulled in by

>=kde-plasma/kwin-5.25.2:5 required by (kde-plasma/plasma-desktop-5.25.2:5/5::gentoo, ebuild scheduled for merge) USE="handbook ibus kaccounts scim semantic-desktop -debug -emoji -telemetry -test" ABI_X86="(64)"

>=kde-plasma/kwin-5.25.2:5[lock] required by (kde-plasma/plasma-meta-5.25.2:5/5::gentoo, ebuild scheduled for merge) USE="accessibility bluetooth browser-integration crash-handler crypt desktop-portal display-manager elogind gtk handbook kwallet legacy-systray networkmanager pulseaudio sddm smart wallpapers -colord -discover (-firewall) -grub -plymouth -sdk -systemd -thunderbolt" ABI_X86="(64)"

>=kde-plasma/kwin-5.25.2:5 required by (kde-plasma/libkworkspace-5.25.2:5/5::gentoo, ebuild scheduled for merge) USE="-debug -test" ABI_X86="(64)"

>=kde-plasma/kwin-5.25.2:5 required by (kde-plasma/plasma-workspace-5.25.2:5/5::gentoo, ebuild scheduled for merge) USE="calendar fontconfig geolocation handbook policykit semantic-desktop -appstream -debug -gps -screencast -telemetry -test" ABI_X86="(64)"

emerge could not continue with the upgrade to KDE Platform 5.25.2.

kwayland-server is pulled by selected, but the last version of the package is from 5.24 release, which should immediately signal that there is something wrong with it, because the emerge command shows the latest KDE Plasma version to be 5.25 (with the exact version 5.25.2).

Solution – deselect/remove kde-plasma/kwayland-server

The solution is simple, just deselect it from the world slot to be sure it won’t be pulled again in the future. Remove the package manually if the error still persists, but only deselecting should work. Of course, it should not be selected in the command-line with emerge, neither. In general, such package won’t be available any more.

Always keep eye on the pulled versions and the versions you are trying to install, most of the time the problem is obvious and from a single “wrong/bad” package, which may generate e great deal of erroneous and frightening dependencies output.

mydesktop root # emerge --deselect kwayland-server >>> Removing kde-plasma/kwayland-server from "world" favorites file... >>> Removing kde-plasma/kwayland-server:5::gentoo from "world" favorites file...

And now the emerge command is OK and no problem with the dependencies and blocks:

mydesktop root # emerge -va --verbose-conflicts --verbose --backtrack=300 $(qlist -IC|grep -i kde|grep -v kwayland-server)

......

......

[ebuild N ] kde-plasma/kwin-5.25.2:5::gentoo USE="accessibility (caps) handbook lock multimedia -debug -gles2-only -plasma -screencast -test" 6,468 KiB

[uninstall ] kde-plasma/kwayland-server-5.24.3:5::gentoo USE="-debug -doc -test"

[blocks b ] kde-plasma/kwayland-server ("kde-plasma/kwayland-server" is soft blocking kde-plasma/kwin-5.25.2)

[ebuild U ] kde-plasma/libkworkspace-5.25.2:5::gentoo [5.24.3:5::gentoo] USE="-debug -test" 0 KiB

......

......

[ebuild U ] kde-apps/akregator-22.04.2:5::gentoo [21.12.3:5::gentoo] USE="handbook -debug -speech -telemetry -test" 2,209 KiB

Total: 339 packages (328 upgrades, 5 new, 6 reinstalls, 1 uninstall), Size of downloads: 1,001,483 KiB

Conflict: 1 block (all satisfied)

More on Gentoo blocking – Gentoo update tips when updating packages with blocks and masked files