At present, the latest version of GlusterFS is 11 and the latest version of CentOS is CentOS Stream 9.

This article will present how to build 3 file replicas node cluster using the latest version of GlusterFS and CentOS Stream 9. There are old versions of this topic here – Create and export a GlusterFS volume with NFS-Ganesha in CentOS 8 and glusterfs with localhost (127.0.0.1) nodes on different servers – glusterfs volume with 3 replicas.

Summary

Here is what the 3-nodes replicas cluster represents:

- 3 servers with minimal CentOS Stream 9 installation – Network installation of CentOS Stream 9 (20220606.0) – minimal server installation.

- EPEL, CRB, and GlusterFS (supported by the CentOS SIG comunity) repositories to be installed on the systems.

- Firewall configuration to allow GlusterFS service with Firewalld.

- Create a cluster volume with 3 file replicas. It uses file replication, not block replication. Replicated volumes.

STEP 1) Install the additional repositories.

Three additional repositories should be installed – all of them are official from the CentOS community or Fedora official community, so there tend to be really stable and do not break the package integrity.

[root@glnode1 ~]# dnf search epel Last metadata expiration check: 0:08:37 ago on Thu 22 Jun 2023 12:22:10 PM UTC. ================================================== Name Matched: epel =================================================== epel-next-release.noarch : Extra Packages for Enterprise Linux Next repository configuration epel-release.noarch : Extra Packages for Enterprise Linux repository configuration [root@glnode1 ~]# dnf install -y epel-release Last metadata expiration check: 0:08:45 ago on Thu 22 Jun 2023 12:22:10 PM UTC. Dependencies resolved. ========================================================================================================================= Package Architecture Version Repository Size ========================================================================================================================= Installing: epel-release noarch 9-2.el9 extras-common 17 k Installing weak dependencies: epel-next-release noarch 9-2.el9 extras-common 8.1 k Transaction Summary ========================================================================================================================= Install 2 Packages Total download size: 25 k Installed size: 26 k Downloading Packages: (1/2): epel-next-release-9-2.el9.noarch.rpm 17 kB/s | 8.1 kB 00:00 (2/2): epel-release-9-2.el9.noarch.rpm 30 kB/s | 17 kB 00:00 ------------------------------------------------------------------------------------------------------------------------- Total 14 kB/s | 25 kB 00:01 CentOS Stream 9 - Extras packages 833 kB/s | 2.1 kB 00:00 Importing GPG key 0x1D997668: Userid : "CentOS Extras SIG (https://wiki.centos.org/SpecialInterestGroup) <security@centos.org>" Fingerprint: 363F C097 2F64 B699 AED3 968E 1FF6 A217 1D99 7668 From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Extras-SHA512 Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : epel-release-9-2.el9.noarch 1/2 Installing : epel-next-release-9-2.el9.noarch 2/2 Running scriptlet: epel-next-release-9-2.el9.noarch 2/2 Verifying : epel-next-release-9-2.el9.noarch 1/2 Verifying : epel-release-9-2.el9.noarch 2/2 Installed: epel-next-release-9-2.el9.noarch epel-release-9-2.el9.noarch Complete! [root@glnode1 ~]# dnf update -y Last metadata expiration check: 0:00:10 ago on Thu 22 Jun 2023 12:31:15 PM UTC. Dependencies resolved. ========================================================================================================================= Package Architecture Version Repository Size ========================================================================================================================= Upgrading: epel-next-release noarch 9-5.el9 epel 8.1 k epel-release noarch 9-5.el9 epel 18 k Transaction Summary ========================================================================================================================= Upgrade 2 Packages Total download size: 27 k Downloading Packages: (1/2): epel-next-release-9-5.el9.noarch.rpm 181 kB/s | 8.1 kB 00:00 (2/2): epel-release-9-5.el9.noarch.rpm 359 kB/s | 18 kB 00:00 ------------------------------------------------------------------------------------------------------------------------- Total 31 kB/s | 27 kB 00:00 Extra Packages for Enterprise Linux 9 - x86_64 1.6 MB/s | 1.6 kB 00:00 Importing GPG key 0x3228467C: Userid : "Fedora (epel9) <epel@fedoraproject.org>" Fingerprint: FF8A D134 4597 106E CE81 3B91 8A38 72BF 3228 467C From : /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-9 Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Upgrading : epel-release-9-5.el9.noarch 1/4 Running scriptlet: epel-release-9-5.el9.noarch 1/4 Upgrading : epel-next-release-9-5.el9.noarch 2/4 Cleanup : epel-next-release-9-2.el9.noarch 3/4 Cleanup : epel-release-9-2.el9.noarch 4/4 Running scriptlet: epel-release-9-2.el9.noarch 4/4 Verifying : epel-next-release-9-5.el9.noarch 1/4 Verifying : epel-next-release-9-2.el9.noarch 2/4 Verifying : epel-release-9-5.el9.noarch 3/4 Verifying : epel-release-9-2.el9.noarch 4/4 Upgraded: epel-next-release-9-5.el9.noarch epel-release-9-5.el9.noarch Complete!

Install the EPEL package from the official CentOS Stream 9 repository – extras-common and then update it with the one from the EPEL repository.

Then enable the CRB (CodeReady Linux Builder repository) repository. The CRB repository replaces the CentOS 8 PowerTools repository and it is maintained by RedHat, but the support does not cover the packages in it.

[root@glnode1 ~]# dnf config-manager --set-enabled crb

Last, install the GlusterFS repository. There are 4 different versions, which could be easily installed. The administrator may choose an older one, for example.

[root@glnode1 ~]# dnf search gluster Last metadata expiration check: 0:00:37 ago on Thu 22 Jun 2023 12:38:54 PM UTC. ============================================ Name & Summary Matched: gluster ============================================ centos-release-gluster10.noarch : Gluster 10 packages from the CentOS Storage SIG repository centos-release-gluster11.noarch : Gluster 11 packages from the CentOS Storage SIG repository centos-release-gluster9.noarch : Gluster 9 packages from the CentOS Storage SIG repository glusterfs-api.x86_64 : GlusterFS api library glusterfs-cli.x86_64 : GlusterFS CLI glusterfs-client-xlators.x86_64 : GlusterFS client-side translators glusterfs-libs.x86_64 : GlusterFS common libraries glusterfs-rdma.x86_64 : GlusterFS rdma support for ib-verbs pcp-pmda-gluster.x86_64 : Performance Co-Pilot (PCP) metrics for the Gluster filesystem python3-gluster.x86_64 : GlusterFS python library ================================================= Name Matched: gluster ================================================= glusterfs.x86_64 : Distributed File System glusterfs-cloudsync-plugins.x86_64 : Cloudsync Plugins glusterfs-fuse.x86_64 : Fuse client

The default GlusterFS included in the CentOS Stream 9 repository appstream is 6.0 – 6.0-57.4.el9, which does not depend on CRB or any other centos-release-gluster-* repository. There are 3 more versions available – 9, 10, and the latest 11. To install GlusterFS 11 repository just install the centos-release-gluster11.noarch package.

[root@glnode1 ~]# dnf install -y centos-release-gluster11 Last metadata expiration check: 0:03:30 ago on Thu 22 Jun 2023 12:40:33 PM UTC. Dependencies resolved. ========================================================================================================================= Package Architecture Version Repository Size ========================================================================================================================= Installing: centos-release-gluster11 noarch 1.0-1.el9s extras-common 8.5 k Installing dependencies: centos-release-storage-common noarch 2-5.el9s extras-common 8.3 k Transaction Summary ========================================================================================================================= Install 2 Packages Total download size: 17 k Installed size: 2.4 k Downloading Packages: (1/2): centos-release-storage-common-2-5.el9s.noarch.rpm 58 kB/s | 8.3 kB 00:00 (2/2): centos-release-gluster11-1.0-1.el9s.noarch.rpm 57 kB/s | 8.5 kB 00:00 ------------------------------------------------------------------------------------------------------------------------- Total 15 kB/s | 17 kB 00:01 Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : centos-release-storage-common-2-5.el9s.noarch 1/2 Installing : centos-release-gluster11-1.0-1.el9s.noarch 2/2 Running scriptlet: centos-release-gluster11-1.0-1.el9s.noarch 2/2 Failed to preset unit: Unit file glusterd.service does not exist. Verifying : centos-release-gluster11-1.0-1.el9s.noarch 1/2 Verifying : centos-release-storage-common-2-5.el9s.noarch 2/2 Installed: centos-release-gluster11-1.0-1.el9s.noarch centos-release-storage-common-2-5.el9s.noarch Complete! [root@glnode1 ~]# dnf update -y CentOS-9-stream - Gluster 11 16 kB/s | 20 kB 00:01 CentOS-9-stream - Gluster 11 Testing 36 kB/s | 34 kB 00:00 Dependencies resolved. Nothing to do. Complete!

All additional repositories are installed and enabled and the system is up to date.

STEP 2) Install GlusterFS and create the 3 replicas cluster volume.

The servers’ hostnames used here are glnode1, glnode2 and glnode3 and it is time to set them in the DNS of the servers or simply add them in the /etc/hosts file.

All the /etc/hosts of the servers are the same:

192.168.0.10 glnode1 192.168.0.20 glnode2 192.168.0.30 glnode3

Not, install the GlusterFS software with package glusterfs-server and its dependencies. It will pull all the needed packages like GlusterFS CLI, API, libraries, daemons, SELinux rules and so on.

[root@glnode1 ~]# dnf install -y glusterfs-server Last metadata expiration check: 0:21:41 ago on Thu 22 Jun 2023 12:44:13 PM UTC. Dependencies resolved. ========================================================================================================================= Package Architecture Version Repository Size ========================================================================================================================= Installing: glusterfs-server x86_64 11.0-2.el9s centos-gluster11-test 1.2 M Installing dependencies: attr x86_64 2.5.1-3.el9 baseos 61 k checkpolicy x86_64 3.5-1.el9 appstream 347 k glusterfs x86_64 11.0-2.el9s centos-gluster11-test 596 k glusterfs-cli x86_64 11.0-2.el9s centos-gluster11-test 185 k glusterfs-client-xlators x86_64 11.0-2.el9s centos-gluster11-test 785 k glusterfs-fuse x86_64 11.0-2.el9s centos-gluster11-test 136 k glusterfs-selinux noarch 2.0.1-1.el9s centos-gluster11 29 k gperftools-libs x86_64 2.9.1-2.el9 epel 309 k libgfapi0 x86_64 11.0-2.el9s centos-gluster11-test 95 k libgfchangelog0 x86_64 11.0-2.el9s centos-gluster11-test 34 k libgfrpc0 x86_64 11.0-2.el9s centos-gluster11-test 49 k libgfxdr0 x86_64 11.0-2.el9s centos-gluster11-test 24 k libglusterfs0 x86_64 11.0-2.el9s centos-gluster11-test 286 k libtirpc x86_64 1.3.3-2.el9 baseos 93 k libunwind x86_64 1.6.2-1.el9 epel 67 k policycoreutils-python-utils noarch 3.5-1.el9 appstream 77 k python3-audit x86_64 3.0.7-103.el9 appstream 84 k python3-distro noarch 1.5.0-7.el9 baseos 37 k python3-libsemanage x86_64 3.5-2.el9 appstream 80 k python3-policycoreutils noarch 3.5-1.el9 appstream 2.1 M python3-pyxattr x86_64 0.7.2-4.el9 crb 35 k python3-setools x86_64 4.4.2-2.1.el9 baseos 600 k python3-setuptools noarch 53.0.0-12.el9 baseos 944 k rpcbind x86_64 1.2.6-5.el9 baseos 58 k Transaction Summary ========================================================================================================================= Install 25 Packages Total download size: 8.3 M Installed size: 31 M Downloading Packages: (1/25): glusterfs-selinux-2.0.1-1.el9s.noarch.rpm 86 kB/s | 29 kB 00:00 (2/25): glusterfs-cli-11.0-2.el9s.x86_64.rpm 177 kB/s | 185 kB 00:01 (3/25): glusterfs-11.0-2.el9s.x86_64.rpm 524 kB/s | 596 kB 00:01 (4/25): glusterfs-client-xlators-11.0-2.el9s.x86_64.rpm 962 kB/s | 785 kB 00:00 (5/25): glusterfs-fuse-11.0-2.el9s.x86_64.rpm 660 kB/s | 136 kB 00:00 (6/25): libgfapi0-11.0-2.el9s.x86_64.rpm 573 kB/s | 95 kB 00:00 (7/25): glusterfs-server-11.0-2.el9s.x86_64.rpm 4.4 MB/s | 1.2 MB 00:00 (8/25): libgfchangelog0-11.0-2.el9s.x86_64.rpm 177 kB/s | 34 kB 00:00 (9/25): libgfrpc0-11.0-2.el9s.x86_64.rpm 274 kB/s | 49 kB 00:00 (10/25): libgfxdr0-11.0-2.el9s.x86_64.rpm 145 kB/s | 24 kB 00:00 (11/25): libglusterfs0-11.0-2.el9s.x86_64.rpm 1.5 MB/s | 286 kB 00:00 (12/25): libtirpc-1.3.3-2.el9.x86_64.rpm 440 kB/s | 93 kB 00:00 (13/25): python3-distro-1.5.0-7.el9.noarch.rpm 205 kB/s | 37 kB 00:00 (14/25): attr-2.5.1-3.el9.x86_64.rpm 191 kB/s | 61 kB 00:00 (15/25): rpcbind-1.2.6-5.el9.x86_64.rpm 414 kB/s | 58 kB 00:00 (16/25): python3-setools-4.4.2-2.1.el9.x86_64.rpm 3.0 MB/s | 600 kB 00:00 (17/25): python3-setuptools-53.0.0-12.el9.noarch.rpm 3.4 MB/s | 944 kB 00:00 (18/25): policycoreutils-python-utils-3.5-1.el9.noarch.rpm 329 kB/s | 77 kB 00:00 (19/25): checkpolicy-3.5-1.el9.x86_64.rpm 1.1 MB/s | 347 kB 00:00 (20/25): python3-audit-3.0.7-103.el9.x86_64.rpm 403 kB/s | 84 kB 00:00 (21/25): python3-libsemanage-3.5-2.el9.x86_64.rpm 980 kB/s | 80 kB 00:00 (22/25): python3-pyxattr-0.7.2-4.el9.x86_64.rpm 155 kB/s | 35 kB 00:00 (23/25): python3-policycoreutils-3.5-1.el9.noarch.rpm 6.4 MB/s | 2.1 MB 00:00 (24/25): libunwind-1.6.2-1.el9.x86_64.rpm 50 kB/s | 67 kB 00:01 (25/25): gperftools-libs-2.9.1-2.el9.x86_64.rpm 197 kB/s | 309 kB 00:01 ------------------------------------------------------------------------------------------------------------------------- Total 1.1 MB/s | 8.3 MB 00:07 CentOS-9-stream - Gluster 11 1.0 MB/s | 1.0 kB 00:00 Importing GPG key 0xE451E5B5: Userid : "CentOS Storage SIG (http://wiki.centos.org/SpecialInterestGroup/Storage) <security@centos.org>" Fingerprint: 7412 9C0B 173B 071A 3775 951A D4A2 E50B E451 E5B5 From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Storage Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : libtirpc-1.3.3-2.el9.x86_64 1/25 Installing : python3-setuptools-53.0.0-12.el9.noarch 2/25 Installing : python3-distro-1.5.0-7.el9.noarch 3/25 Installing : python3-setools-4.4.2-2.1.el9.x86_64 4/25 Running scriptlet: rpcbind-1.2.6-5.el9.x86_64 5/25 Installing : rpcbind-1.2.6-5.el9.x86_64 5/25 Running scriptlet: rpcbind-1.2.6-5.el9.x86_64 5/25 Created symlink /etc/systemd/system/multi-user.target.wants/rpcbind.service → /usr/lib/systemd/system/rpcbind.service. Created symlink /etc/systemd/system/sockets.target.wants/rpcbind.socket → /usr/lib/systemd/system/rpcbind.socket. Installing : libunwind-1.6.2-1.el9.x86_64 6/25 Installing : gperftools-libs-2.9.1-2.el9.x86_64 7/25 Installing : libgfxdr0-11.0-2.el9s.x86_64 8/25 Running scriptlet: libgfxdr0-11.0-2.el9s.x86_64 8/25 Installing : libglusterfs0-11.0-2.el9s.x86_64 9/25 Running scriptlet: libglusterfs0-11.0-2.el9s.x86_64 9/25 Installing : libgfrpc0-11.0-2.el9s.x86_64 10/25 Running scriptlet: libgfrpc0-11.0-2.el9s.x86_64 10/25 Installing : glusterfs-client-xlators-11.0-2.el9s.x86_64 11/25 Running scriptlet: glusterfs-11.0-2.el9s.x86_64 12/25 Installing : glusterfs-11.0-2.el9s.x86_64 12/25 Running scriptlet: glusterfs-11.0-2.el9s.x86_64 12/25 Installing : libgfapi0-11.0-2.el9s.x86_64 13/25 Running scriptlet: libgfapi0-11.0-2.el9s.x86_64 13/25 Installing : glusterfs-cli-11.0-2.el9s.x86_64 14/25 Installing : libgfchangelog0-11.0-2.el9s.x86_64 15/25 Running scriptlet: libgfchangelog0-11.0-2.el9s.x86_64 15/25 Installing : python3-pyxattr-0.7.2-4.el9.x86_64 16/25 Installing : python3-libsemanage-3.5-2.el9.x86_64 17/25 Installing : python3-audit-3.0.7-103.el9.x86_64 18/25 Installing : checkpolicy-3.5-1.el9.x86_64 19/25 Installing : python3-policycoreutils-3.5-1.el9.noarch 20/25 Installing : policycoreutils-python-utils-3.5-1.el9.noarch 21/25 Running scriptlet: glusterfs-selinux-2.0.1-1.el9s.noarch 22/25 Installing : glusterfs-selinux-2.0.1-1.el9s.noarch 22/25 Running scriptlet: glusterfs-selinux-2.0.1-1.el9s.noarch 22/25 libsemanage.semanage_direct_install_info: Overriding glusterd module at lower priority 100 with module at priority 200. Installing : attr-2.5.1-3.el9.x86_64 23/25 Installing : glusterfs-fuse-11.0-2.el9s.x86_64 24/25 Installing : glusterfs-server-11.0-2.el9s.x86_64 25/25 Running scriptlet: glusterfs-server-11.0-2.el9s.x86_64 25/25 Created symlink /etc/systemd/system/multi-user.target.wants/glusterd.service → /usr/lib/systemd/system/glusterd.service. Running scriptlet: glusterfs-selinux-2.0.1-1.el9s.noarch 25/25 Running scriptlet: glusterfs-server-11.0-2.el9s.x86_64 25/25 Verifying : glusterfs-selinux-2.0.1-1.el9s.noarch 1/25 Verifying : glusterfs-11.0-2.el9s.x86_64 2/25 Verifying : glusterfs-cli-11.0-2.el9s.x86_64 3/25 Verifying : glusterfs-client-xlators-11.0-2.el9s.x86_64 4/25 Verifying : glusterfs-fuse-11.0-2.el9s.x86_64 5/25 Verifying : glusterfs-server-11.0-2.el9s.x86_64 6/25 Verifying : libgfapi0-11.0-2.el9s.x86_64 7/25 Verifying : libgfchangelog0-11.0-2.el9s.x86_64 8/25 Verifying : libgfrpc0-11.0-2.el9s.x86_64 9/25 Verifying : libgfxdr0-11.0-2.el9s.x86_64 10/25 Verifying : libglusterfs0-11.0-2.el9s.x86_64 11/25 Verifying : attr-2.5.1-3.el9.x86_64 12/25 Verifying : libtirpc-1.3.3-2.el9.x86_64 13/25 Verifying : python3-distro-1.5.0-7.el9.noarch 14/25 Verifying : python3-setools-4.4.2-2.1.el9.x86_64 15/25 Verifying : python3-setuptools-53.0.0-12.el9.noarch 16/25 Verifying : rpcbind-1.2.6-5.el9.x86_64 17/25 Verifying : checkpolicy-3.5-1.el9.x86_64 18/25 Verifying : policycoreutils-python-utils-3.5-1.el9.noarch 19/25 Verifying : python3-audit-3.0.7-103.el9.x86_64 20/25 Verifying : python3-libsemanage-3.5-2.el9.x86_64 21/25 Verifying : python3-policycoreutils-3.5-1.el9.noarch 22/25 Verifying : python3-pyxattr-0.7.2-4.el9.x86_64 23/25 Verifying : gperftools-libs-2.9.1-2.el9.x86_64 24/25 Verifying : libunwind-1.6.2-1.el9.x86_64 25/25 Installed: attr-2.5.1-3.el9.x86_64 checkpolicy-3.5-1.el9.x86_64 glusterfs-11.0-2.el9s.x86_64 glusterfs-cli-11.0-2.el9s.x86_64 glusterfs-client-xlators-11.0-2.el9s.x86_64 glusterfs-fuse-11.0-2.el9s.x86_64 glusterfs-selinux-2.0.1-1.el9s.noarch glusterfs-server-11.0-2.el9s.x86_64 gperftools-libs-2.9.1-2.el9.x86_64 libgfapi0-11.0-2.el9s.x86_64 libgfchangelog0-11.0-2.el9s.x86_64 libgfrpc0-11.0-2.el9s.x86_64 libgfxdr0-11.0-2.el9s.x86_64 libglusterfs0-11.0-2.el9s.x86_64 libtirpc-1.3.3-2.el9.x86_64 libunwind-1.6.2-1.el9.x86_64 policycoreutils-python-utils-3.5-1.el9.noarch python3-audit-3.0.7-103.el9.x86_64 python3-distro-1.5.0-7.el9.noarch python3-libsemanage-3.5-2.el9.x86_64 python3-policycoreutils-3.5-1.el9.noarch python3-pyxattr-0.7.2-4.el9.x86_64 python3-setools-4.4.2-2.1.el9.x86_64 python3-setuptools-53.0.0-12.el9.noarch rpcbind-1.2.6-5.el9.x86_64 Complete!

Install it on the three servers. Then enable and start the service, again, under the three nodes.

[root@glnode1 ~]# systemctl enable glusterd

[root@glnode1 ~]# systemctl start glusterd

[root@glnode1 ~]# systemctl status glusterd

● glusterd.service - GlusterFS, a clustered file-system server

Loaded: loaded (/usr/lib/systemd/system/glusterd.service; enabled; preset: enabled)

Active: active (running) since Thu 2023-06-22 13:09:02 UTC; 3s ago

Docs: man:glusterd(8)

Process: 5682 ExecStart=/usr/sbin/glusterd -p /var/run/glusterd.pid --log-level $LOG_LEVEL $GLUSTERD_OPTIONS (code=e>

Main PID: 5683 (glusterd)

Tasks: 8 (limit: 23060)

Memory: 13.5M

CPU: 39ms

CGroup: /system.slice/glusterd.service

└─5683 /usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO

Jun 22 13:09:02 srv systemd[1]: Starting GlusterFS, a clustered file-system server...

Jun 22 13:09:02 srv systemd[1]: Started GlusterFS, a clustered file-system server.

And last, for this step, make the GlusterFS directory to use for the cluster volumes. One cluster volume may use one brick (i.e. a directory) from the server.

mount /mnt/storage/ mkdir -p /mnt/storage/glusterfs/brick1

It is under storage (/mnt/storage) for GlusterFS files and the directory 1 with name brick1. Another directory with another naming is perfectly fine, too.

STEP 3) Tune the firewall to allow GlusterFS service.

CentOS Stream 9 uses firewalld and it is introduced a new FirewallD zone – glusternodes, in which all the IPs have access to the GlusterFS service. The three IPs of the nodes are also added in the new zone:

[root@glnode1 ~]# firewall-cmd --permanent --new-zone=glusternodes success [root@glnode1 ~]# firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.10 success [root@glnode1 ~]# firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.20 success [root@glnode1 ~]# firewall-cmd --permanent --zone=glusternodes --add-source=192.168.0.30 success [root@glnode1 ~]# firewall-cmd --permanent --zone=glusternodes --add-service=glusterfs success [root@glnode1 ~]# firewall-cmd --reload success [root@glnode1 ~]# firewall-cmd --list-all --zone=glusternodes glusternodes (active) target: default icmp-block-inversion: no interfaces: sources: 192.168.0.10 192.168.0.20 192.168.0.30 services: glusterfs ports: protocols: forward: no masquerade: no forward-ports: source-ports: icmp-blocks: rich rules:

STEP 4) Create the 3 replicas cluster volume.

Add peers to the GlusterFS cluster and create a 3-node replica volume. Login in the first server glnode1 and ping the other peers, then create the GlusterFS volume.

[root@glnode1 ~]# gluster peer probe glnode1 peer probe: Probe on localhost not needed [root@glnode1 ~]# gluster peer probe glnode2 peer probe: success [root@glnode1 ~]# gluster peer probe glnode3 peer probe: success [root@glnode1 ~]# gluster peer status Number of Peers: 2 Hostname: glnode2 Uuid: 437874fc-9041-4792-8fa2-733b3dd77ca8 State: Peer in Cluster (Connected) Hostname: glnode3 Uuid: c102fed3-77e9-499b-be07-dbca6313d875 State: Peer in Cluster (Connected)

The other two servers are accessible and the GlusterFS service is up and running. The peer status command shows the two remote nodes – glnode2 and glnode3.

Now, create the 3 node file replicas cluster volume:

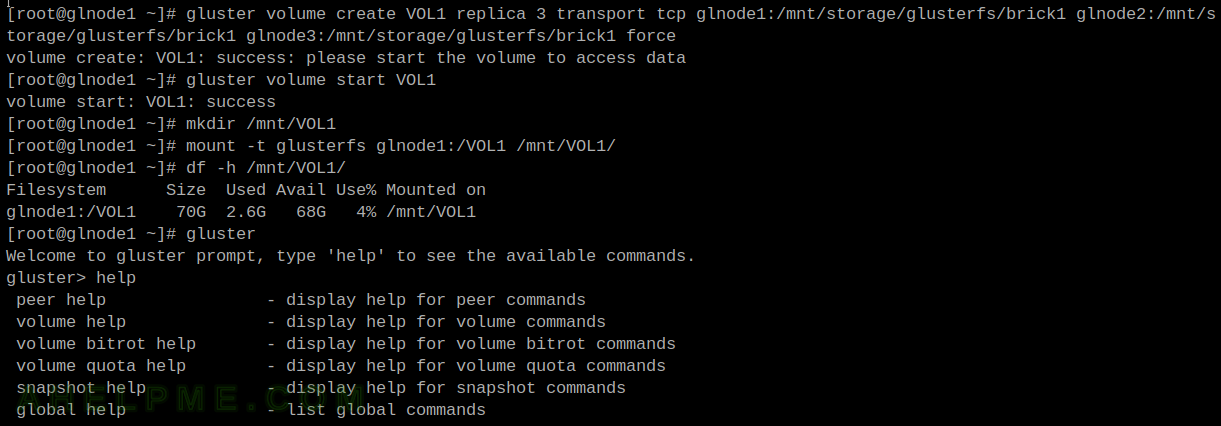

[root@glnode1 ~]# gluster volume create VOL1 replica 3 transport tcp glnode1:/mnt/storage/glusterfs/brick1 glnode2:/mnt/storage/glusterfs/brick1 glnode3:/mnt/storage/glusterfs/brick1 volume create: VOL1: success: please start the volume to access data [root@glnode1 ~]# gluster volume start VOL1 volume start: VOL1: success

To start using the volume as storage, it should be started with the start command.

The GlusterFS volume can be mounted with:

[root@glnode1 ~]# mount -t glusterfs glnode1:/VOL1 /mnt/VOL1/ [root@glnode1 ~]# df -h /mnt/VOL1/ Filesystem Size Used Avail Use% Mounted on glnode1:/VOL1 70G 2.6G 68G 4% /mnt/VOL1

And /etc/fstab sample line:

glnode1:/VOL1 /mnt/VOL1 glusterfs defaults,noatime,direct-io-mode=disable 0 0

In a cluster with only replicas, the local application will just continue using the mounted GlusterFS volume (or native GlusterFS clients) relying only on the local Gluster brick till the main Internet connection comes back. So it is a good idea to resolve the local glnode(1|2|3) name with 127.0.0.1 for each server. More on the subject here – glusterfs with localhost (127.0.0.1) nodes on different servers – glusterfs volume with 3 replicas

Here are two handful commands on the newly created volume:

[root@glnode1 ~]# gluster volume status Status of volume: VOL1 Gluster process TCP Port RDMA Port Online Pid ------------------------------------------------------------------------------ Brick glnode1:/mnt/storage/glusterfs/brick1 56257 0 Y 1518 Brick glnode2:/mnt/storage/glusterfs/brick1 59593 0 Y 1429 Brick glnode3:/mnt/storage/glusterfs/brick1 52673 0 Y 1461 Self-heal Daemon on localhost N/A N/A Y 1534 Self-heal Daemon on glnode3 N/A N/A Y 1477 Self-heal Daemon on glnode2 N/A N/A Y 1445 Task Status of Volume VOL1 ------------------------------------------------------------------------------ There are no active volume tasks [root@glnode1 ~]# gluster volume info VOL1 Volume Name: VOL1 Type: Replicate Volume ID: 1cfc2835-408b-452b-a1a6-c29c72f7fe68 Status: Started Snapshot Count: 0 Number of Bricks: 1 x 3 = 3 Transport-type: tcp Bricks: Brick1: glnode1:/mnt/storage/glusterfs/brick1 Brick2: glnode2:/mnt/storage/glusterfs/brick1 Brick3: glnode3:/mnt/storage/glusterfs/brick1 Options Reconfigured: cluster.granular-entry-heal: on storage.fips-mode-rchecksum: on transport.address-family: inet nfs.disable: on performance.client-io-threads: off [root@glnode1 ~]#

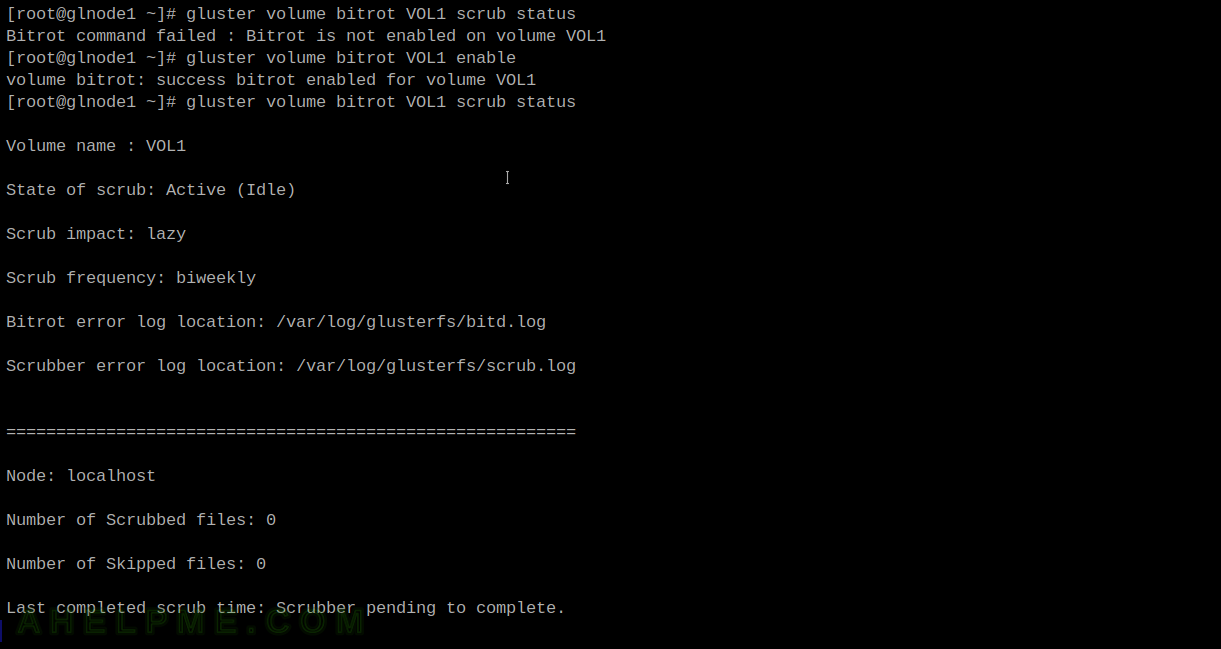

GlusterFS bitrot

It may be a good idea to enable GlusterFS bitrot service (it’s a silent detection of corrupted data on the different nodes.):

[root@glnode1 ~]# gluster volume bitrot VOL1 scrub status Bitrot command failed : Bitrot is not enabled on volume VOL1 [root@glnode1 ~]# gluster volume bitrot VOL1 enable volume bitrot: success bitrot enabled for volume VOL1 [root@glnode1 ~]# gluster volume bitrot VOL1 scrub status Volume name : VOL1 State of scrub: Active (Idle) Scrub impact: lazy Scrub frequency: biweekly Bitrot error log location: /var/log/glusterfs/bitd.log Scrubber error log location: /var/log/glusterfs/scrub.log ========================================================= Node: localhost Number of Scrubbed files: 0 Number of Skipped files: 0 Last completed scrub time: Scrubber pending to complete. Duration of last scrub (D:M:H:M:S): 0:0:0:0 Error count: 0 ========================================================= Node: glnode3 Number of Scrubbed files: 0 Number of Skipped files: 0 Last completed scrub time: Scrubber pending to complete. Duration of last scrub (D:M:H:M:S): 0:0:0:0 Error count: 0 ========================================================= Node: glnode2 Number of Scrubbed files: 0 Number of Skipped files: 0 Last completed scrub time: Scrubber pending to complete. Duration of last scrub (D:M:H:M:S): 0:0:0:0 Error count: 0 =========================================================