This article demonstrates how to move part of the data from one InfluxDB server to another InfluxDB sThect, the data is split by criteria to another server. The InfluxDB server is version 1.8 and the InfluxQL language is used. All useful InfluxQL queries will be included. All queries are executed in the influx command-line tool, which connects to the default InfluxDB location – http://localhost:8086. It is important to be able to connect to the InfluxDB using the influx command-line tool. Unfortunately, it is not possible to use the influxd backup command to select only certain data from a database despite it being easily selectable by a unique tag value such as the hostname of the reporting server. The whole setup is following this article Monitor and analyze with Grafana, influxdb 1.8 and collectd under CentOS Stream 9

The initial setup – get known the database scheme

There is the initial setup of the first InfluxDB server. Multiple servers (i.e. hosts) report data to this InfluxDB server and the target is to move all measurement data of a single reporting server to another InfluxDB server, which has already been accepting the new data. So moving the old data from the first InfluxDB server to the other InfluxDB server the historical data is preserved for this reporting server (i.e. hosts).

- InfluxDB database with name collectd.

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SHOW DATABASES name: databases name ---- _internal collectd >

It is important to show the retention policy, too. The retention policy is used to build the queries.

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SHOW RETENTION POLICIES ON "collectd" name duration shardGroupDuration replicaN default ---- -------- ------------------ -------- ------- default 0s 168h0m0s 1 true

The retention policy name of the database name “collectd” is “default”. Always check the retention policy, because it might be with a different name. For example, creating a database without specifying a retention policy will add a retention policy with the default name “autogen”.

- There are multiple measurements in the collectd database. Show all measurements associated with this database (i.e. collectd)

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SHOW MEASUREMENTS LIMIT 10 name: measurements name ---- clickhouse_value conntrack_value cpu_value dbi_value df_value disk_io_time disk_read disk_value disk_weighted_io_time disk_write

There is a limit clause – “LIMIT 10” to show only the first 10 measurements because the whole list may be too big. The limit clause could be missed to show the whole list of measurements associated with the database collectd.

Agents gather data on multiple servers and report the data to the InfluxDB server in the collectd database. The measurements include CPU, disk, network, and many more data.

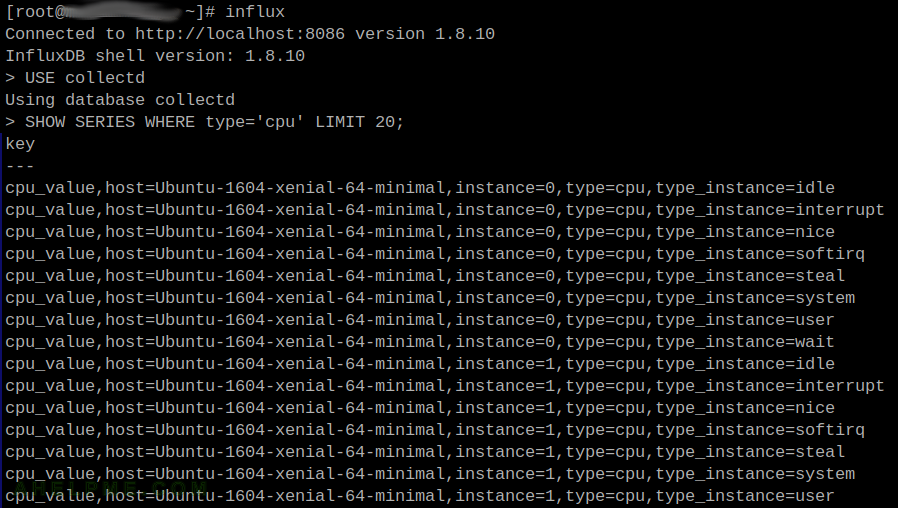

To show a little bit more information about the database scheme with the name collectd it is a good idea to print the series in the database.[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SHOW SERIES limit 10; key --- cpu_value,host=srv100.example.com,instance=0,type=cpu,type_instance=idle cpu_value,host=srv100.example.com,instance=0,type=cpu,type_instance=interrupt cpu_value,host=srv100.example.com,instance=0,type=cpu,type_instance=nice cpu_value,host=srv100.example.com,instance=0,type=cpu,type_instance=softirq cpu_value,host=srv100.example.com,instance=0,type=cpu,type_instance=steal cpu_value,host=srv100.example.com,instance=0,type=cpu,type_instance=system cpu_value,host=srv200.example.com,instance=0,type=cpu,type_instance=user cpu_value,host=srv200.example.com,instance=0,type=cpu,type_instance=wait cpu_value,host=srv201.example.com,instance=1,type=cpu,type_instance=idle cpu_value,host=srv201.example.com,instance=1,type=cpu,type_instance=interrupt

The first column is the measurement name (i.e. cpu_value) and all after the first comma are the tags of the measurement. Each line is a unique line of measurement, tag set, and a field key.

The tag name host is very important for the purpose of this article. To backup or move all the data of reporting server with the name srv100.example.com to another database in another server the backup/dump query should include a WHERE clause with the tag name host and tag value “srv100.example.com”.

Moving all data selected by a tag value to another server

InfluxDB backup and restore functionality is specific and is explained in the process of moving the data one server to the other below and in detail in the official documents – https://docs.influxdata.com/influxdb/v1.8/administration/backup_and_restore/

The process is relatively simple:

- Create a new database

- Select all the measurements from the original database and insert them in the new database. SELECT…INSERT…WHERE clause is used.

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SELECT * INTO "collectd_tmp"."autogen".:MEASUREMENT FROM "collectd"."default"./.*/ where host='srv100.example.com' GROUP BY * name: result time written ---- ------- 0 485582634

Select all the measurements from the old database collectd where the tag key host has the tag value “srv100.example.com” and insert them in the database collectd_tmp. After successfully completing the query, all needed data will be in the database collectd_tmp (and because it just copies the data, it still remains in collectd, do not forget to delete it if not needed).

- Use the influxd from the command line to dump the whole new database in the disk. influxd is the actual InfluxDB server program and it includes functionality to make a snapshot of a database and saves it to the disk, and restore it to use a snapshot from the disk to rebuild an InfluxDB node or a single database.

[root@srv ~]# influxd backup -portable -db collectd_tmp /root/collectd_tmp.influxdb.dump 2023/02/03 11:17:09 backing up metastore to /root/collectd_tmp.influxdb.dump/meta.00 2023/02/03 11:17:09 backing up db=collectd_tmp 2023/02/03 11:17:09 backing up db=collectd_tmp rp=autogen shard=3121 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03121.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:09 backing up db=collectd_tmp rp=autogen shard=3122 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03122.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:10 backing up db=collectd_tmp rp=autogen shard=3123 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03123.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:10 backing up db=collectd_tmp rp=autogen shard=3124 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03124.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:11 backing up db=collectd_tmp rp=autogen shard=3125 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03125.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:11 backing up db=collectd_tmp rp=autogen shard=3126 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03126.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:11 backing up db=collectd_tmp rp=autogen shard=3127 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03127.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:12 backing up db=collectd_tmp rp=autogen shard=3128 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03128.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:12 backing up db=collectd_tmp rp=autogen shard=3129 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03129.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:13 backing up db=collectd_tmp rp=autogen shard=3130 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03130.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:13 backing up db=collectd_tmp rp=autogen shard=3131 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03131.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:14 backing up db=collectd_tmp rp=autogen shard=3132 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03132.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:15 backing up db=collectd_tmp rp=autogen shard=3133 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03133.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:15 backing up db=collectd_tmp rp=autogen shard=3134 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03134.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:16 backing up db=collectd_tmp rp=autogen shard=3135 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03135.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:17 backing up db=collectd_tmp rp=autogen shard=3136 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03136.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:17 backing up db=collectd_tmp rp=autogen shard=3137 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03137.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:17 backing up db=collectd_tmp rp=autogen shard=3138 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03138.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:18 backing up db=collectd_tmp rp=autogen shard=3139 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03139.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:18 backing up db=collectd_tmp rp=autogen shard=3140 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03140.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:19 backing up db=collectd_tmp rp=autogen shard=3141 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03141.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:19 backing up db=collectd_tmp rp=autogen shard=3142 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03142.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:20 backing up db=collectd_tmp rp=autogen shard=3143 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03143.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:20 backing up db=collectd_tmp rp=autogen shard=3144 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03144.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:21 backing up db=collectd_tmp rp=autogen shard=3145 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03145.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:21 backing up db=collectd_tmp rp=autogen shard=3146 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03146.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:22 backing up db=collectd_tmp rp=autogen shard=3147 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03147.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:22 backing up db=collectd_tmp rp=autogen shard=3148 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03148.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:23 backing up db=collectd_tmp rp=autogen shard=3149 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03149.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:23 backing up db=collectd_tmp rp=autogen shard=3150 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03150.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:24 backing up db=collectd_tmp rp=autogen shard=3151 to /root/collectd_tmp.influxdb.dump/collectd_tmp.autogen.03151.00 since 0001-01-01T00:00:00Z 2023/02/03 11:17:24 backup complete: 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.meta 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3121.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3122.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3123.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3124.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3125.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3126.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3127.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3128.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3129.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3130.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3131.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3132.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3133.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3134.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3135.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3136.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3137.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3138.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3139.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3140.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3141.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3142.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3143.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3144.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3145.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3146.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3147.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3148.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3149.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3150.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.s3151.tar.gz 2023/02/03 11:17:24 /root/collectd_tmp.influxdb.dump/20230203T111709Z.manifest

The tool backups up all shards in separate files. The snapshot is compressed with gzip.

- Use the influxd, again, to import the InfluxDB dump files into the new database on the new InfluxDB server.

[root@srv2 ~]# influxd restore -portable -db collectd_tmp /root/collectd_tmp.influxdb.dump 2023/02/03 12:05:04 Restoring shard 3133 live from backup 20230203T111709Z.s3133.tar.gz 2023/02/03 12:05:05 Restoring shard 3149 live from backup 20230203T111709Z.s3149.tar.gz 2023/02/03 12:05:06 Restoring shard 3150 live from backup 20230203T111709Z.s3150.tar.gz 2023/02/03 12:05:08 Restoring shard 3142 live from backup 20230203T111709Z.s3142.tar.gz 2023/02/03 12:05:09 Restoring shard 3122 live from backup 20230203T111709Z.s3122.tar.gz 2023/02/03 12:05:10 Restoring shard 3134 live from backup 20230203T111709Z.s3134.tar.gz 2023/02/03 12:05:12 Restoring shard 3135 live from backup 20230203T111709Z.s3135.tar.gz 2023/02/03 12:05:13 Restoring shard 3145 live from backup 20230203T111709Z.s3145.tar.gz 2023/02/03 12:05:14 Restoring shard 3148 live from backup 20230203T111709Z.s3148.tar.gz 2023/02/03 12:05:16 Restoring shard 3125 live from backup 20230203T111709Z.s3125.tar.gz 2023/02/03 12:05:17 Restoring shard 3137 live from backup 20230203T111709Z.s3137.tar.gz 2023/02/03 12:05:19 Restoring shard 3139 live from backup 20230203T111709Z.s3139.tar.gz 2023/02/03 12:05:20 Restoring shard 3138 live from backup 20230203T111709Z.s3138.tar.gz 2023/02/03 12:05:22 Restoring shard 3141 live from backup 20230203T111709Z.s3141.tar.gz 2023/02/03 12:05:23 Restoring shard 3123 live from backup 20230203T111709Z.s3123.tar.gz 2023/02/03 12:05:24 Restoring shard 3124 live from backup 20230203T111709Z.s3124.tar.gz 2023/02/03 12:05:26 Restoring shard 3126 live from backup 20230203T111709Z.s3126.tar.gz 2023/02/03 12:05:27 Restoring shard 3140 live from backup 20230203T111709Z.s3140.tar.gz 2023/02/03 12:05:28 Restoring shard 3127 live from backup 20230203T111709Z.s3127.tar.gz 2023/02/03 12:05:30 Restoring shard 3128 live from backup 20230203T111709Z.s3128.tar.gz 2023/02/03 12:05:31 Restoring shard 3129 live from backup 20230203T111709Z.s3129.tar.gz 2023/02/03 12:05:32 Restoring shard 3121 live from backup 20230203T111709Z.s3121.tar.gz 2023/02/03 12:05:33 Restoring shard 3131 live from backup 20230203T111709Z.s3131.tar.gz 2023/02/03 12:05:34 Restoring shard 3151 live from backup 20230203T111709Z.s3151.tar.gz 2023/02/03 12:05:35 Restoring shard 3143 live from backup 20230203T111709Z.s3143.tar.gz 2023/02/03 12:05:36 Restoring shard 3144 live from backup 20230203T111709Z.s3144.tar.gz 2023/02/03 12:05:38 Restoring shard 3146 live from backup 20230203T111709Z.s3146.tar.gz 2023/02/03 12:05:39 Restoring shard 3147 live from backup 20230203T111709Z.s3147.tar.gz 2023/02/03 12:05:40 Restoring shard 3130 live from backup 20230203T111709Z.s3130.tar.gz 2023/02/03 12:05:42 Restoring shard 3132 live from backup 20230203T111709Z.s3132.tar.gz 2023/02/03 12:05:43 Restoring shard 3136 live from backup 20230203T111709Z.s3136.tar.gz

First, copy the dump file to the second server and then restore the snapshotfiles into the new database on the new with the above command.

- Check the retention policies of the two databases in the new InfluxDB server. The import is also made with the help of a temporary table, but the import process creates it automatically. Do not create or use an existing table to import the disk snapshot, because the import process would fail. Use credentials if needed for influx command line tool.

[root@srv2 ~]# influx -username admin -password "11111111111111" Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SHOW DATABASES name: databases name ---- _internal collectd collectd_tmp > SHOW RETENTION POLICIES ON "collectd_tmp" name duration shardGroupDuration replicaN default ---- -------- ------------------ -------- ------- autogen 0s 168h0m0s 1 true > SHOW RETENTION POLICIES ON "collectd" name duration shardGroupDuration replicaN default ---- -------- ------------------ -------- ------- autogen 0s 168h0m0s 1 true

On the second server, the retention policy name is the same “autogen” for the two databases collectd and the temporary one collectd_tmp.

- Select all the measurements from the original database and insert them into the original database in the new InfluxDB server. SELECT…INSERT…WHERE clause is used. This step is needed because the influx command cannot import directly data to an existing database and preserve the existing data in it. As mentioned earlier, the reporting server has already been reporting to the new InfluxDB server, so there is editing and important data in the new InfluxDB server’s database and the real goal is to add the historical data to the existing one (not to replace or start from a new one). For more on the topic of InfluxDB backup and restore follow the above link.

[root@srv2 ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 SELECT * INTO "collectd".autogen.:MEASUREMENT FROM "collectd_tmp".autogen./.*/ GROUP BY * name: result time written ---- ------- 0 485582634

the result is the same written records as before.

Now, the new InfluxDB server has the historical data of all measurements of host srv100.example.com. - Delete the two collectd_tmp databases on both servers and delete the data from the old InfluxDB server because it is a move operation. If you just want to copy the data, do not follow the second part of this step about the data removal from collectd.

Execute DROP DATABASE on both servers.[root@srv ~]influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > DROP DATABASE "collectd_tmp"

[root@srv2 ~]influx -username admin -password "11111111111111" Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > DROP DATABASE "collectd_tmp"

And remove the data from the original database in the original InfluxDB server:

[root@srv ~]influx -username admin -password "11111111111111" Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > DELETE FROM /.*/ where host='srv100.example.com' >

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > CREATE DATABASE collectd_tmp > SHOW RETENTION POLICIES ON "collectd_tmp" name duration shardGroupDuration replicaN default ---- -------- ------------------ -------- ------- autogen 0s 168h0m0s 1 true >

The retention policy for the collectd database is with the name “default” (shown earlier) and the retention policy for the database collectd_tmp is with the name “autogen”.

Progress of the SELECT…INSERT query

Some kind of progress might be tracked by the directory occupation:

[root@srv data]# du -h -d 1 -x /var/lib/influxdb/data 2.9T /var/lib/influxdb/data/collectd 5.7G /var/lib/influxdb/data/collectd_tmp 271M /var/lib/influxdb/data/_internal 2.9T /var/lib/influxdb/data [root@srv data]# ls -altrh collectd_tmp/autogen/|tail drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:56 3045 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:57 3070 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:57 3071 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:57 3073 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:57 3079 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:57 3080 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:57 3098 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 18:59 2802 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 19:03 3072 drwxr-xr-x. 2 influxdb influxdb 4.0K Feb 2 19:08 3061 [root@srv data]# du -h collectd_tmp/autogen/|tail 54M collectd_tmp/autogen/2997 54M collectd_tmp/autogen/2865 54M collectd_tmp/autogen/2973 54M collectd_tmp/autogen/2962 54M collectd_tmp/autogen/3040 54M collectd_tmp/autogen/3085 54M collectd_tmp/autogen/3004 32M collectd_tmp/autogen/2789 54M collectd_tmp/autogen/3115 17G collectd_tmp/autogen/

Troubleshooting – error about the retention policy

The most common error is related to the retention policy name because when there are two databases mentioned in a query the error does not show which database uses the wrong name. The following statement fails but it is unclear, which database name is used with the wrong retention policy – collectd or collectd_tmp?

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SELECT * INTO "collectd_tmp"."default".:MEASUREMENT FROM "collectd"."default"./.*/ where host='srv100.example.com' and time > now() - 1d GROUP BY * ERR: retention policy not found: default

Just show the retention policy for the two database names used in the query and then use the right names:

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 > SHOW RETENTION POLICIES ON "collectd" name duration shardGroupDuration replicaN default ---- -------- ------------------ -------- ------- default 0s 168h0m0s 1 true > SHOW RETENTION POLICIES ON "collectd_tmp" name duration shardGroupDuration replicaN default ---- -------- ------------------ -------- ------- autogen 0s 168h0m0s 1 true

And the right query is

[root@srv ~]# influx Connected to http://localhost:8086 version 1.8.10 InfluxDB shell version: 1.8.10 SELECT * INTO "collectd_tmp"."autogen".:MEASUREMENT FROM "collectd"."default"./.*/ where host='srv100.example.com' and time > now() - 1d GROUP BY *

Troubleshooting about query time out

Querying big databases and nodes may lead to a query timeout for the SELECT…INSERT…WHERE query:

SELECT * INTO "collectd_tmp"."autogen".:MEASUREMENT FROM "collectd"."default"./.*/ where host='srv100.example.com' GROUP BY * ERR: timeout

The solution is to use multiple queries with small time ranges. Note, the time is an absolute time in UTC.

SELECT * INTO "collectd_tmp"."autogen".:MEASUREMENT FROM "collectd"."default"./.*/ where host='srv100.example.com' AND time <= '2017-01-01T00:00:00.000000000Z' GROUP BY *

The DELETE query will be:

DELETE FROM /.*/ where host='srv100.example.com' AND time <= '2017-01-01T00:00:00.000000000Z'