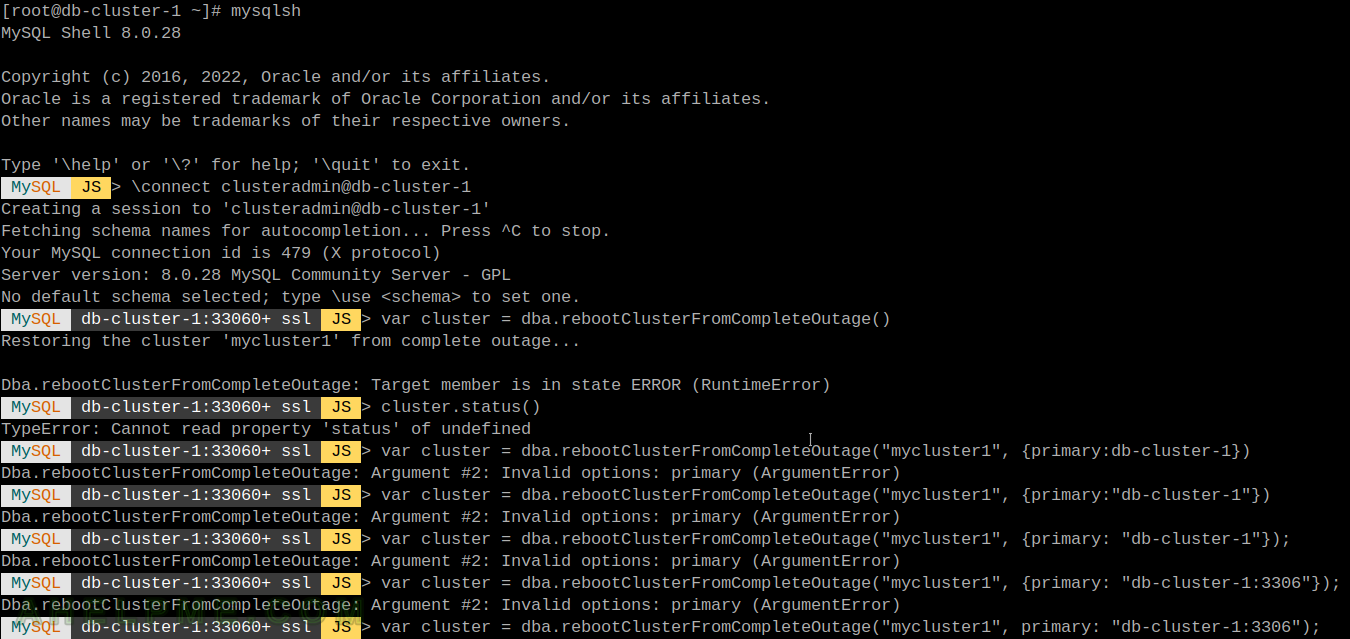

Recent version of MySQL 8 implemented more options to the rebootClusterFromCompleteOutage function! Definitely check the link’s manual above and most of the handy second options are implemented in MySQL 8.0.30, so the user’s MySQL InnoDB Cluster crashed and if rebootClusterFromCompleteOutage should be used, but it outputs an error sort of:

MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.rebootClusterFromCompleteOutage() Restoring the cluster 'mycluster1' from complete outage... Dba.rebootClusterFromCompleteOutage: Target member is in state ERROR (RuntimeError)

And when trying to use the node, which was healthy before the crash with this function, there is an error, too:

MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.rebootClusterFromCompleteOutage("mycluster1", {primary: "db-cluster-1:3306"});

Dba.rebootClusterFromCompleteOutage: Argument #2: Invalid options: primary (ArgumentError)

So no cluster is available and the database and its data is inaccessible.

Indeed, the initial state of the cluster was really bad and before the restart, the two of three servers were missing or in bad state.

[root@db-cluster-1 ~]# mysqlsh

MySQL Shell 8.0.28

Copyright (c) 2016, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its affiliates.

Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit.

MySQL JS > \connect clusteradmin@db-cluster-1

Creating a session to 'clusteradmin@db-cluster-1'

Fetching schema names for autocompletion... Press ^C to stop.

Your MySQL connection id is 241708346 (X protocol)

Server version: 8.0.28 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.getCluster()

MySQL db-cluster-1:33060+ ssl JS > cluster.status()

{

"clusterName": "mycluster1",

"defaultReplicaSet": {

"name": "default",

"primary": "db-cluster-1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures. 2 members are not active.",

"topology": {

"db-cluster-1:3306": {

"address": "db-cluster-1:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": null,

"role": "HA",

"status": "ONLINE",

"version": "8.0.28"

},

"db-cluster-2:3306": {

"address": "db-cluster-2:3306",

"instanceErrors": [

"NOTE: group_replication is stopped."

],

"memberRole": "SECONDARY",

"memberState": "OFFLINE",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "(MISSING)",

"version": "8.0.28"

},

"db-cluster-3:3306": {

"address": "db-cluster-3:3306",

"instanceErrors": [

"ERROR: GR Recovery channel receiver stopped with an error: error connecting to master 'mysql_innodb_cluster_2324239842@db-cluster-1:3306' - retry-time: 60 retries: 1 message: Access denied for user 'mysql_innodb_cluster_2324239842'@'10.10.10.11' (using password: YES) (1045) at 2023-09-19 04:37:00.076960",

"ERROR: group_replication has stopped with an error."

],

"memberRole": "SECONDARY",

"memberState": "ERROR",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "(MISSING)",

"version": "8.0.28"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db-cluster-1:3306"

}

The problem here is the MySQL version is 8.0.28, but after MySQL 8.0.30 there are much more features, which can be used in the second argument of rebootClusterFromCompleteOutage including, which server should be considered primary therefore healthy. In fact, the updated rebootClusterFromCompleteOutage of MySQL 8.0.34 version even auto-detected the correct and healthy node and booted the MySQL InnoDB Cluster.

There were no problems with the update from MySQL 8.0.28 to MySQL 8.0.34 and after the MySQL 8.0.34 started, the rebootClusterFromCompleteOutage reconfigured and started the cluster with the right and healthy server auto-detected. In fact, it is safer to use the second argument and set the option, which is the healthy server “{primary: “db-cluster-1:3306″}”.

[root@db-cluster-1 ~]# mysqlsh MySQL Shell 8.0.34 Copyright (c) 2016, 2023, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type '\help' or '\?' for help; '\quit' to exit. Creating a Classic session to 'root@localhost' Fetching schema names for auto-completion... Press ^C to stop. Your MySQL connection id is 162 Server version: 8.0.34 MySQL Community Server - GPL No default schema selected; type \use <schema> to set one. MySQL localhost JS > \connect clusteradmin@db-cluster-1 Creating a session to 'clusteradmin@db-cluster-1' Fetching schema names for auto-completion... Press ^C to stop. Closing old connection... Your MySQL connection id is 171 (X protocol) Server version: 8.0.34 MySQL Community Server - GPL No default schema selected; type \use <schema> to set one. MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.getCluster() Dba.getCluster: This function is not available through a session to a standalone instance (metadata exists, instance belongs to that metadata, but GR is not active) (MYSQLSH 51314) MySQL db-cluster-1:33060+ ssl JS > dba.rebootClusterFromCompleteOutage(); Restoring the Cluster 'mycluster1' from complete outage... Cluster instances: 'db-cluster-1:3306' (OFFLINE), 'db-cluster-2:3306' (OFFLINE), 'db-cluster-3:3306' (ERROR) Stopping Group Replication on 'db-cluster-3:3306'... Waiting for instances to apply pending received transactions... Validating instance configuration at db-cluster-1:3306... This instance reports its own address as db-cluster-1:3306 Instance configuration is suitable. * Waiting for seed instance to become ONLINE... db-cluster-1:3306 was restored. Validating instance configuration at db-cluster-2:3306... This instance reports its own address as db-cluster-2:3306 Instance configuration is suitable. Rejoining instance 'db-cluster-2:3306' to cluster 'mycluster1'... ERROR: Unable to start Group Replication for instance 'db-cluster-2:3306'. The MySQL error_log contains the following messages: 2023-10-02 18:35:25.041820 [System] [MY-013587] Plugin group_replication reported: 'Plugin 'group_replication' is starting.' 2023-10-02 18:35:25.043811 [System] [MY-011565] Plugin group_replication reported: 'Setting super_read_only=ON.' 2023-10-02 18:35:25.053139 [System] [MY-010597] 'CHANGE REPLICATION SOURCE TO FOR CHANNEL 'group_replication_applier' executed'. Previous state source_host='<NULL>', source_port= 0, source_log_file='', source_log_pos= 4, source_bind=''. New state source_host='<NULL>', source_port= 0, source_log_file='', source_log_pos= 4, source_bind=''. 2023-10-02 18:35:25.176192 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-1:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.182734 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-3:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.188666 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-1:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.192044 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-3:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.198458 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-1:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.201957 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-3:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.208846 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-1:33061 when joining a group. My local port is: 33061.' 2023-10-02 18:35:25.216387 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error on opening a connection to peer node db-cluster-3:33061 when joining a group. My local port is: 33061.' ...... ...... 2023-10-02 18:36:13.416496 [Error] [MY-011735] Plugin group_replication reported: '[GCS] Error connecting to all peers. Member join failed. Local port: 33061' 2023-10-02 18:36:13.515072 [Error] [MY-011735] Plugin group_replication reported: '[GCS] The member was unable to join the group. Local port: 33061' 2023-10-02 18:36:25.071036 [Error] [MY-011640] Plugin group_replication reported: 'Timeout on wait for view after joining group' 2023-10-02 18:36:25.072967 [Error] [MY-011735] Plugin group_replication reported: '[GCS] The member is leaving a group without being on one.' ERROR: RuntimeError: Group Replication failed to start: MySQL Error 3092 (HY000): db-cluster-2:3306: The server is not configured properly to be an active member of the group. Please see more details on error log. WARNING: db-cluster-2:3306: RuntimeError: Group Replication failed to start: MySQL Error 3092 (HY000): db-cluster-2:3306: The server is not configured properly to be an active member of the group. Please see more details on error log. NOTE: Unable to rejoin instance 'db-cluster-2:3306' to the Cluster but the dba.rebootClusterFromCompleteOutage() operation will continue. ERROR: Cannot join instance 'db-cluster-3:3306' to cluster: instance version is incompatible with the cluster. ERROR: Instance version '8.0.28' cannot be lower than the cluster lowest version '8.0.34'. ERROR: Instance version '8.0.28' cannot be lower than the cluster lowest version '8.0.34'. Dba.rebootClusterFromCompleteOutage: Instance version '8.0.28' cannot be lower than the cluster lowest version '8.0.34'. (RuntimeError)

So only cluster mycluster1 is up with one node db-cluster-1 and is healthy and it is used by the software. The other two nodes are in bad state (MISSING and OFFLINE).

[root@db-cluster-1 ~]# mysqlsh

MySQL Shell 8.0.34

Copyright (c) 2016, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its affiliates.

Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit.

Creating a Classic session to 'root@localhost'

Fetching schema names for auto-completion... Press ^C to stop.

Your MySQL connection id is 162

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL localhost JS > \connect clusteradmin@db-cluster-1

Creating a session to 'clusteradmin@db-cluster-1'

Fetching schema names for auto-completion... Press ^C to stop.

Closing old connection...

Your MySQL connection id is 171 (X protocol)

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.getCluster()

MySQL db-cluster-1:33060+ ssl JS > cluster.status()

{

"clusterName": "mycluster1",

"defaultReplicaSet": {

"clusterErrors": [

"WARNING: Cluster's transaction size limit is not registered in the metadata. Use cluster.rescan() to update the metadata."

],

"name": "default",

"primary": "db-cluster-1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE_PARTIAL",

"statusText": "Cluster is NOT tolerant to any failures. 2 members are not active.",

"topology": {

"db-cluster-1:3306": {

"address": "db-cluster-1:3306",

"instanceErrors": [

"WARNING: Detected unused recovery accounts: mysql_innodb_cluster_3477794189, mysql_innodb_cluster_479920769. Use Cluster.rescan() to clean up."

],

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.34"

},

"db-cluster-2:3306": {

"address": "db-cluster-2:3306",

"instanceErrors": [

"NOTE: group_replication is stopped."

],

"memberRole": "SECONDARY",

"memberState": "OFFLINE",

"mode": "n/a",

"readReplicas": {},

"role": "HA",

"status": "(MISSING)",

"version": "8.0.34"

},

"db-cluster-3:3306": {

"address": "db-cluster-3:3306",

"instanceErrors": [

"NOTE: group_replication is stopped."

],

"memberRole": "SECONDARY",

"memberState": "OFFLINE",

"mode": "n/a",

"readReplicas": {},

"role": "HA",

"status": "(MISSING)",

"version": "8.0.28"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db-cluster-1:3306"

}

Then the rescan command removed the bad nodes, because it is easy to just reset the other two to initial state (just remove the MySQL data directory and restart the server to populate it with an initial state) and clone them from healthy one (just use dba.addInstance).

[root@db-cluster-1 ~]# mysqlsh

MySQL Shell 8.0.34

Copyright (c) 2016, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its affiliates.

Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit.

Creating a Classic session to 'root@localhost'

Fetching schema names for auto-completion... Press ^C to stop.

Your MySQL connection id is 162

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL localhost JS > \connect clusteradmin@db-cluster-1

Creating a session to 'clusteradmin@db-cluster-1'

Fetching schema names for auto-completion... Press ^C to stop.

Closing old connection...

Your MySQL connection id is 171 (X protocol)

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.getCluster()

MySQL db-cluster-1:33060+ ssl JS > cluster.rescan()

Rescanning the cluster...

Result of the rescanning operation for the 'mycluster1' cluster:

{

"name": "mycluster1",

"newTopologyMode": null,

"newlyDiscoveredInstances": [],

"unavailableInstances": [

{

"host": "db-cluster-2:3306",

"label": "db-cluster-2:3306",

"member_id": "05b6c7c7-f285-11ec-adfc-00163e0b38ff"

},

{

"host": "db-cluster-3:3306",

"label": "db-cluster-3:3306",

"member_id": "99856952-90ae-11ec-9a5f-fafd8f1acc17"

}

],

"updatedInstances": []

}

The instance 'db-cluster-2:3306' is no longer part of the cluster.

The instance is either offline or left the HA group. You can try to add it to the cluster again with the cluster.rejoinInstance('db-cluster-2:3306') command or you can remove it from the cluster configuration.

Would you like to remove it from the cluster metadata? [Y/n]: Y

Removing instance from the cluster metadata...

The instance 'db-cluster-2:3306' was successfully removed from the cluster metadata.

The instance 'db-cluster-3:3306' is no longer part of the cluster.

The instance is either offline or left the HA group. You can try to add it to the cluster again with the cluster.rejoinInstance('db-cluster-3:3306') command or you can remove it from the cluster configuration.

Would you like to remove it from the cluster metadata? [Y/n]: Y

Removing instance from the cluster metadata...

The instance 'db-cluster-3:3306' was successfully removed from the cluster metadata.

Updating group_replication_transaction_size_limit in the Cluster's metadata...

Dropping unused recovery account: 'mysql_innodb_cluster_3045460656'@'%'

Dropping unused recovery account: 'mysql_innodb_cluster_3477794189'@'%'

Dropping unused recovery account: 'mysql_innodb_cluster_479920769'@'%'

MySQL db-cluster-1:33060+ ssl JS > cluster.status()

{

"clusterName": "mycluster1",

"defaultReplicaSet": {

"name": "default",

"primary": "db-cluster-1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

"topology": {

"db-cluster-1:3306": {

"address": "db-cluster-1:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.34"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db-cluster-1:3306"

Now, the cluster has only one member.

Recover the other two by removing all the MySQL data from the datadir (in this case /var/lib/mysql) and restart the service. Change the root password (there is a temporary one in /var/log/mysqld.log) Add the management user

[root@db-cluster-3 ~]# dnf update -y

[root@db-cluster-3 ~]# rm -R /var/lib/mysql/*

[root@db-cluster-3 ~]# systemctl restart mysqld

[root@db-cluster-3 ~]# grep "temporary password" /var/log/mysqld.log

2023-10-02T20:45:58.322340Z 6 [Note] [MY-010454] [Server] A temporary password is generated for root@localhost: Jn8Dfi*L?;MT

[root@db-cluster-3 ~]# mysql -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 11

Server version: 8.0.34

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY '11111111111';

Query OK, 0 rows affected (0.01 sec)

root@db-cluster-3 ~]# mysqlsh

MySQL Shell 8.0.34

Copyright (c) 2016, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its affiliates.

Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit.

Creating a Classic session to 'root@localhost'

Fetching schema names for auto-completion... Press ^C to stop.

Your MySQL connection id is 12

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL localhost JS > dba.configureInstance("root@localhost")

Configuring local MySQL instance listening at port 3306 for use in an InnoDB cluster...

This instance reports its own address as db-cluster-3:3306

Clients and other cluster members will communicate with it through this address by default. If this is not correct, the report_host MySQL system variable should be changed.

ERROR: User 'root' can only connect from 'localhost'. New account(s) with proper source address specification to allow remote connection from all instances must be created to manage the cluster.

1) Create remotely usable account for 'root' with same grants and password

2) Create a new admin account for InnoDB cluster with minimal required grants

3) Ignore and continue

4) Cancel

Please select an option [1]: 2

Please provide an account name (e.g: icroot@%) to have it created with the necessary

privileges or leave empty and press Enter to cancel.

Account Name: clusteradmin@%

Password for new account: ********************

Confirm password: ********************

applierWorkerThreads will be set to the default value of 4.

NOTE: Some configuration options need to be fixed:

+----------------------------------------+---------------+----------------+--------------------------------------------------+

| Variable | Current Value | Required Value | Note |

+----------------------------------------+---------------+----------------+--------------------------------------------------+

| binlog_transaction_dependency_tracking | COMMIT_ORDER | WRITESET | Update the server variable |

| enforce_gtid_consistency | OFF | ON | Update read-only variable and restart the server |

| gtid_mode | OFF | ON | Update read-only variable and restart the server |

| server_id | 1 | <unique ID> | Update read-only variable and restart the server |

+----------------------------------------+---------------+----------------+--------------------------------------------------+

Some variables need to be changed, but cannot be done dynamically on the server.

Do you want to perform the required configuration changes? [y/n]: y

Do you want to restart the instance after configuring it? [y/n]: y

Creating user clusteradmin@%.

Account clusteradmin@% was successfully created.

Configuring instance...

The instance 'db-cluster-3:3306' was configured to be used in an InnoDB cluster.

Restarting MySQL...

NOTE: MySQL server at db-cluster-3:3306 was restarted.

And then add it to the cluster:

[root@db-cluster-1 ~]# mysqlsh

MySQL Shell 8.0.34

Copyright (c) 2016, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its affiliates.

Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit.

Creating a Classic session to 'root@localhost'

Fetching schema names for auto-completion... Press ^C to stop.

Your MySQL connection id is 48760

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL localhost JS > \connect clusteradmin@db-cluster-1

Creating a session to 'clusteradmin@db-cluster-1'

Fetching schema names for auto-completion... Press ^C to stop.

Closing old connection...

Your MySQL connection id is 48826 (X protocol)

Server version: 8.0.34 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

MySQL db-cluster-1:33060+ ssl JS > var cluster = dba.getCluster()

MySQL db-cluster-1:33060+ ssl JS > cluster.status()

{

"clusterName": "mycluster1",

"defaultReplicaSet": {

"name": "default",

"primary": "db-cluster-1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

"topology": {

"db-cluster-1:3306": {

"address": "db-cluster-1:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.34"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db-cluster-1:3306"

}

MySQL db-cluster-1:33060+ ssl JS > cluster.addInstance('db-cluster-3:3306')

NOTE: A GTID set check of the MySQL instance at 'db-cluster-3:3306' determined that it is missing transactions that were purged from all cluster members.

NOTE: The target instance 'db-cluster-3:3306' has not been pre-provisioned (GTID set is empty). The Shell is unable to determine whether the instance has pre-existing data that would be overwritten with clone based recovery.

The safest and most convenient way to provision a new instance is through automatic clone provisioning, which will completely overwrite the state of 'db-cluster-3:3306' with a physical snapshot from an existing cluster member. To use this method by default, set the 'recoveryMethod' option to 'clone'.

Please select a recovery method [C]lone/[A]bort (default Clone): C

Validating instance configuration at db-cluster-3:3306...

This instance reports its own address as db-cluster-3:3306

Instance configuration is suitable.

NOTE: Group Replication will communicate with other members using 'db-cluster-3:33061'. Use the localAddress option to override.

* Checking connectivity and SSL configuration...

A new instance will be added to the InnoDB Cluster. Depending on the amount of

data on the cluster this might take from a few seconds to several hours.

Adding instance to the cluster...

Monitoring recovery process of the new cluster member. Press ^C to stop monitoring and let it continue in background.

Clone based state recovery is now in progress.

NOTE: A server restart is expected to happen as part of the clone process. If the

server does not support the RESTART command or does not come back after a

while, you may need to manually start it back.

* Waiting for clone to finish...

NOTE: db-cluster-3:3306 is being cloned from db-cluster-1:3306

** Stage DROP DATA: Completed

** Clone Transfer

FILE COPY ############################################################ 100% Completed

PAGE COPY ############################################################ 100% Completed

REDO COPY ############################################################ 100% Completed

NOTE: db-cluster-3:3306 is shutting down...

* Waiting for server restart... ready

* db-cluster-3:3306 has restarted, waiting for clone to finish...

** Stage RESTART: Completed

* Clone process has finished: 438.41 MB transferred in 5 sec (87.68 MB/s)

State recovery already finished for 'db-cluster-3:3306'

The instance 'db-cluster-3:3306' was successfully added to the cluster.

MySQL db-cluster-1:33060+ ssl JS > cluster.status()

{

"clusterName": "mycluster1",

"defaultReplicaSet": {

"name": "default",

"primary": "db-cluster-1:3306",

"ssl": "REQUIRED",

"status": "OK_NO_TOLERANCE",

"statusText": "Cluster is NOT tolerant to any failures.",

"topology": {

"db-cluster-1:3306": {

"address": "db-cluster-1:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.34"

},

"db-cluster-3:3306": {

"address": "db-cluster-3:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.34"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "db-cluster-1:3306"

}

To recover the db-cluster-2 is the same as db-cluster-3. More on the MySQL could be found here – https://ahelpme.com/tag/MySQL/.