Pass-through the NVIDIA card to be used in the LXC container is simple enough and there are three simple rules to watch for:

- mount bind the NVIDIA devices in /dev to the LXC container’s /dev

- Allow cgroup access for the bound /dev devices.

- Install the same version of the NVIDIA driver/software under the host and the LXC container or there will be multiple errors of the sort – version mismatch

When using the LXC container pass-through, i.e. mount bind, the video card may be used simultaneously on the host and on all the LXC containers where it is mount bind. Multiple LXC containers share the video device(s).

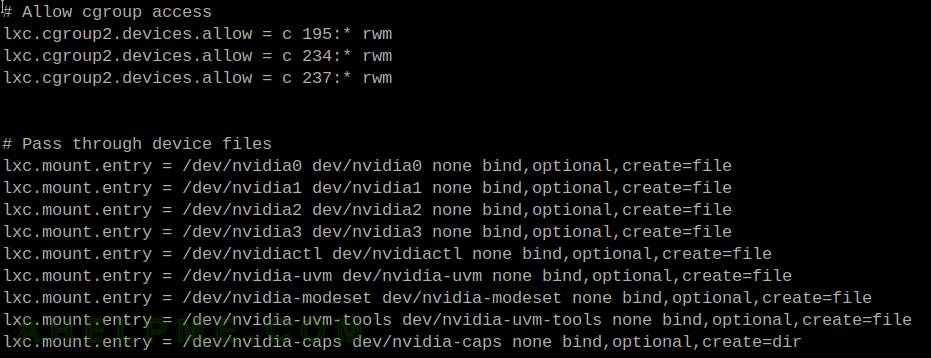

This is a working LXC 4.0.12 configuration:

# Distribution configuration lxc.include = /usr/share/lxc/config/common.conf lxc.arch = x86_64 # Container specific configuration lxc.rootfs.path = dir:/mnt/storage1/servers/gpu1u/rootfs lxc.uts.name = gpu1u # Network configuration lxc.net.0.type = macvlan lxc.net.0.link = enp1s0f1 lxc.net.0.macvlan.mode = bridge lxc.net.0.flags = up lxc.net.0.name = eth0 lxc.net.0.hwaddr = fe:77:3f:27:15:60 # Allow cgroup access lxc.cgroup2.devices.allow = c 195:* rwm lxc.cgroup2.devices.allow = c 234:* rwm lxc.cgroup2.devices.allow = c 237:* rwm # Pass through device files lxc.mount.entry = /dev/nvidia0 dev/nvidia0 none bind,optional,create=file lxc.mount.entry = /dev/nvidia1 dev/nvidia1 none bind,optional,create=file lxc.mount.entry = /dev/nvidia2 dev/nvidia2 none bind,optional,create=file lxc.mount.entry = /dev/nvidia3 dev/nvidia3 none bind,optional,create=file lxc.mount.entry = /dev/nvidiactl dev/nvidiactl none bind,optional,create=file lxc.mount.entry = /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file lxc.mount.entry = /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file lxc.mount.entry = /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file lxc.mount.entry = /dev/nvidia-caps dev/nvidia-caps none bind,optional,create=dir # Autostart lxc.group = onboot lxc.start.auto = 1 lxc.start.delay = 10

The above configuration binds NVIDIA RTX A4000 cards to a LXC container with gpu1u. Attaching to the container and executing the SMI software will verify the proper working:

[root@gpu1 ~]# lxc-attach -n gpu1u

gpu1u ~ # . /etc/profile

gpu1u ~ # nvidia-smi

Wed Oct 4 10:57:36 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA RTX A4000 Off | 00000000:17:00.0 Off | Off |

| 41% 36C P8 7W / 140W | 9833MiB / 16376MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA RTX A4000 Off | 00000000:31:00.0 Off | Off |

| 41% 36C P8 8W / 140W | 9833MiB / 16376MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA RTX A4000 Off | 00000000:B1:00.0 Off | Off |

| 41% 36C P8 9W / 140W | 9833MiB / 16376MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA RTX A4000 Off | 00000000:CA:00.0 Off | Off |

| 41% 37C P8 11W / 140W | 9833MiB / 16376MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

+---------------------------------------------------------------------------------------+

The NVIDIA driver package in the host server (gpu1) and the LXC container (gpu1u) is the same version and the nvidia-smi tool reports no error.

If the drivers are different versions there will be the following problems and errors:

[root@gpu1 ~]# lxc-attach -n gpu2u gpu2u ~ # . /etc/profile gpu2u ~ # nvidia-smi Failed to initialize NVML: Driver/library version mismatch NVML library version: 535.113

The driver version loaded by the server is 535.104.05, but the NVIDIA drivers installed under the LXC container gpu2u is 535.113.01.

Device numbers

It’s easy to find the device numbers on any Linux system.

[root@gpu1 ~]# ls -al /dev/|grep nvidia crw-rw-rw-. 1 root root 195, 0 Sep 13 09:12 nvidia0 crw-rw-rw-. 1 root root 195, 1 Sep 13 09:12 nvidia1 crw-rw-rw-. 1 root root 195, 2 Sep 13 09:12 nvidia2 crw-rw-rw-. 1 root root 195, 3 Sep 13 09:12 nvidia3 drwxr-xr-x. 2 root root 80 Sep 13 09:14 nvidia-caps crw-rw-rw-. 1 root root 195, 255 Sep 13 09:12 nvidiactl crw-rw-rw-. 1 root root 195, 254 Sep 13 09:12 nvidia-modeset crw-rw-rw-. 1 root root 234, 0 Sep 13 09:12 nvidia-uvm crw-rw-rw-. 1 root root 234, 1 Sep 13 09:12 nvidia-uvm-tools

In general, these are the devices, which should be bound to the LXC container. If in the future there are more devices or with another names, probably they should be included in the LXC configuration as well.

Extras – verification PyTorch – machine learning framework works properly

Most of the NVIDIA cards used in a server environment are used for machine learning. So it is interesting in how to verify if the software such as PyTorch could use the NVIDIA card under the LXC container.

Here is a LXC container with pass-through NVIDIA cards and PyTorch able to use the cards:

[root@gpu1 ~]# lxc-attach -n gpu1u gpu1u ~ # . /etc/profile gpu1u ~ # python Python 3.11.5 (main, Aug 27 2023, 17:24:15) [GCC 12.3.1 20230526] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import torch >>> torch.cuda.is_available() True >>> torch.cuda.device_count() 4 >>> torch.cuda.current_device() 0 >>> torch.cuda.device(0) <torch.cuda.device object at 0x7ff76d6105d0> >>> torch.cuda.get_device_name(0) 'NVIDIA RTX A4000' >>>

Here is another LXC container with updated NVIDIA driver and the versions are different with the loaded one in the system.

[root@gpu1 ~]# lxc-attach -n gpu2u

gpu2u ~ # . /etc/profile

gpu2u ~ # python

Python 3.11.5 (main, Aug 27 2023, 17:24:15) [GCC 12.3.1 20230526] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.cuda.is_available()

True

>>> torch.cuda.device_count()

/usr/lib/python3.11/site-packages/torch/cuda/__init__.py:546: UserWarning: Can't initialize NVML

warnings.warn("Can't initialize NVML")

4

More on LXC containers – here.