This article shows the CPU-only inference with a modern server processor – AMD Epyc 9554. For the LLM model the Alibaba’s Qwen3 Coder 30B A3B with different quantization are used to show the difference in token generation per second and memory consumption. Qwen3 Coder 30B A3B is an interesting LLM model, which main target is the software engineers and the tools they use for advanced auto-completion and code assistant. It is published by the Alibaba giant, and in many cases it can be considered to offload some LLM work locally for free, especially IT. The model is MoE (Mixture of Experts) with 30B total parameters and 128 experts, and 3.3B activated parameters and 8 activated experts. Bare in mind, the idea of this model is to be small and fast alternative to the bigger one – Qwen3-Coder-480B-A35B-Instruct, which is really big LLM with 300 GiB memory for the Q4 at least, but fighting with the very expensive Claude 4. The Qwen3 Coder 30B A3B is light-weight and fast model, which with enough context data may result in strong code assistant help in the IT. The article is focused only showing the benchmark of the LLM tokens generations per second and there are other papers on the quality of the output for the different quantized version.

The testing bench is:

- Single socket AMD EPYC 9554 CPU – 64 core CPU / 128 threads

- 196GB RAM in 12 channel, all 12 CPU channels are populated with 16GB DDR5 5600MHz Samsung.

- ASUS K14PA-U24-T Series motherboard

- Testing with LLAMA.CPP – llama-bench

- theoretical memory bandwidth 460.8 GB/s (according to the official documents form AMD)

- the context window is the default 4K of the llama-bench tool. The memory consumption could vary greatly if context window is increased.

- More information for the setup and benchmarks – LLM inference benchmarks with llamacpp and AMD EPYC 9554 CPU

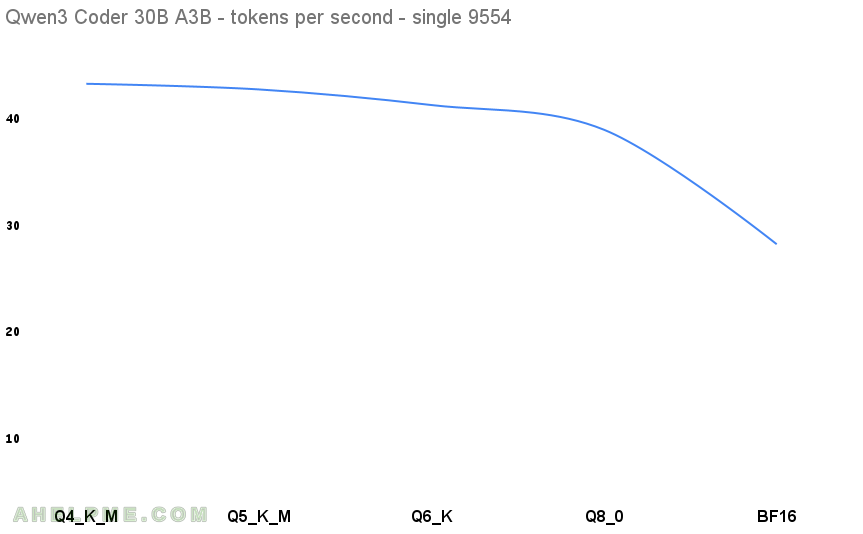

Here are the results. The first benchmark test is Q4 and is used as a baseline for the diff column below, because Q4 are really popular and they offer a good quality and really small footprint related to the full sized model version.

| N | model | parameters | quantization | memory | diff t/s % | tokens/s |

|---|---|---|---|---|---|---|

| 1 | Qwen3 Coder 30B A3B | 30B | Q4_K_M | 17.28 GiB | 0 | 42.154 |

| 2 | Qwen3 Coder 30B A3B | 30B | Q5_0 | 20.23 GiB | 1.25 | 41.626 |

| 3 | Qwen3 Coder 30B A3B | 30B | Q6_K | 23.36 GiB | 3.51 | 40.164 |

| 4 | Qwen3 Coder 30B A3B | 30B | Q8_0 | 30.25 GiB | 5.76 | 37.848 |

| 4 | Qwen3 Coder 30B A3B | 30B | BF16 | 56.89 GiB | 28.31 | 27.132 |

The difference between the Q4 and BF16 in the tokens per second is 35.63% speed degradation and even the BF16 is usable with tokens generation between 25-27 per second. Around 25 tokens per second is good and usable for daily use for a single user, which is what the CPU inference would offer. There are two interesting things here – the degradation from Q4 to Q8 is so small (below 5%) that probably it’s not worth using the Q4, Q5 and Q6, and second, there is definitely a degradation of the token generation related to the output of the answer – bigger answer means slower tokens per second for all quantizations.

Here are all the tests output:

1. Qwen3 32B Q4_K_M

Using unsloth Qwen3-Coder-30B-A3B-Instruct-Q4_K_M.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/Qwen3-Coder-30B-A3B-Instruct-Q4_K_M.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | --------------: | -------------------: | | qwen3moe 30B.A3B Q4_K - Medium | 17.28 GiB | 30.53 B | BLAS,RPC | 64 | tg128 | 43.44 ± 0.02 | | qwen3moe 30B.A3B Q4_K - Medium | 17.28 GiB | 30.53 B | BLAS,RPC | 64 | tg256 | 43.25 ± 0.00 | | qwen3moe 30B.A3B Q4_K - Medium | 17.28 GiB | 30.53 B | BLAS,RPC | 64 | tg512 | 42.74 ± 0.01 | | qwen3moe 30B.A3B Q4_K - Medium | 17.28 GiB | 30.53 B | BLAS,RPC | 64 | tg1024 | 41.80 ± 0.02 | | qwen3moe 30B.A3B Q4_K - Medium | 17.28 GiB | 30.53 B | BLAS,RPC | 64 | tg2048 | 39.54 ± 0.02 | build: 66625a59 (6040)

2. Qwen3 32B Q5_K_M

Using unsloth Qwen3-Coder-30B-A3B-Instruct-Q5_K_M.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/Qwen3-Coder-30B-A3B-Instruct-Q5_K_M.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | --------------: | -------------------: | | qwen3moe 30B.A3B Q5_K - Medium | 20.23 GiB | 30.53 B | BLAS,RPC | 64 | tg128 | 43.04 ± 0.05 | | qwen3moe 30B.A3B Q5_K - Medium | 20.23 GiB | 30.53 B | BLAS,RPC | 64 | tg256 | 42.77 ± 0.01 | | qwen3moe 30B.A3B Q5_K - Medium | 20.23 GiB | 30.53 B | BLAS,RPC | 64 | tg512 | 42.26 ± 0.01 | | qwen3moe 30B.A3B Q5_K - Medium | 20.23 GiB | 30.53 B | BLAS,RPC | 64 | tg1024 | 41.21 ± 0.03 | | qwen3moe 30B.A3B Q5_K - Medium | 20.23 GiB | 30.53 B | BLAS,RPC | 64 | tg2048 | 38.85 ± 0.01 | build: 66625a59 (6040)

3. Qwen3 32B Q6_K

Using unsloth Qwen3-Coder-30B-A3B-Instruct-Q6_K.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/Qwen3-Coder-30B-A3B-Instruct-Q6_K.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | --------------: | -------------------: | | qwen3moe 30B.A3B Q6_K | 23.36 GiB | 30.53 B | BLAS,RPC | 64 | tg128 | 41.17 ± 0.07 | | qwen3moe 30B.A3B Q6_K | 23.36 GiB | 30.53 B | BLAS,RPC | 64 | tg256 | 41.27 ± 0.03 | | qwen3moe 30B.A3B Q6_K | 23.36 GiB | 30.53 B | BLAS,RPC | 64 | tg512 | 40.73 ± 0.03 | | qwen3moe 30B.A3B Q6_K | 23.36 GiB | 30.53 B | BLAS,RPC | 64 | tg1024 | 39.80 ± 0.01 | | qwen3moe 30B.A3B Q6_K | 23.36 GiB | 30.53 B | BLAS,RPC | 64 | tg2048 | 37.85 ± 0.02 | build: 66625a59 (6040)

4. Qwen3 32B Q8_0

Using unsloth Qwen3-Coder-30B-A3B-Instruct-Q8_0.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/Qwen3-Coder-30B-A3B-Instruct-Q8_0.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | --------------: | -------------------: | | qwen3moe 30B.A3B Q8_0 | 30.25 GiB | 30.53 B | BLAS,RPC | 64 | tg128 | 38.90 ± 0.01 | | qwen3moe 30B.A3B Q8_0 | 30.25 GiB | 30.53 B | BLAS,RPC | 64 | tg256 | 38.84 ± 0.01 | | qwen3moe 30B.A3B Q8_0 | 30.25 GiB | 30.53 B | BLAS,RPC | 64 | tg512 | 38.59 ± 0.07 | | qwen3moe 30B.A3B Q8_0 | 30.25 GiB | 30.53 B | BLAS,RPC | 64 | tg1024 | 37.37 ± 0.09 | | qwen3moe 30B.A3B Q8_0 | 30.25 GiB | 30.53 B | BLAS,RPC | 64 | tg2048 | 35.54 ± 0.02 | build: 66625a59 (6040)

5. Qwen3 32B BF16

Using unsloth Qwen3-Coder-30B-A3B-Instruct-BF16-00001-of-00002.gguf and Qwen3-Coder-30B-A3B-Instruct-BF16-00002-of-00002.gguf files.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/Qwen3-Coder-30B-A3B-Instruct-BF16-00001-of-00002.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | --------------: | -------------------: | | qwen3moe 30B.A3B BF16 | 56.89 GiB | 30.53 B | BLAS,RPC | 64 | tg128 | 27.70 ± 0.01 | | qwen3moe 30B.A3B BF16 | 56.89 GiB | 30.53 B | BLAS,RPC | 64 | tg256 | 27.62 ± 0.01 | | qwen3moe 30B.A3B BF16 | 56.89 GiB | 30.53 B | BLAS,RPC | 64 | tg512 | 27.44 ± 0.00 | | qwen3moe 30B.A3B BF16 | 56.89 GiB | 30.53 B | BLAS,RPC | 64 | tg1024 | 26.96 ± 0.04 | | qwen3moe 30B.A3B BF16 | 56.89 GiB | 30.53 B | BLAS,RPC | 64 | tg2048 | 25.94 ± 0.00 | build: 66625a59 (6040)

Before all tests the cleaning cache commands were executed:

echo 0 > /proc/sys/kernel/numa_balancing echo 3 > /proc/sys/vm/drop_caches