This article shows the GPU-only inference with a relatively old GPU from 2018 year – AMD Radeon Instinct Mi50 32Gb. For the LLM model Meta LLama 3.1 8B meta-llama/Llama-3.1-8B with different quantization are used to show the difference in token generation per second and memory consumption. LLama 8B is a small dense LLM model, which is primary used for text summarization and benchmark tests, because it has good results for the memory footprint it has and the tokens generation speed. It is published by the Meta giant, and in many cases it can be considered to offload some LLM work locally for free. The article is focused only showing the benchmark of the LLM tokens generations per second and there are other papers on the quality of the output for the different quantized version. Due to the small memory footprint it is used in many GPU benchmark tests with low RAM memory. More llama-bench here.

The testing bench is:

- Single GPU AMD Radeon Instinct Mi50 32Gb – 3840 cores

- 32GB RAM HBM2, with 4096 bit bus width.

- Test server – Gigabyte MS73-HB1 with dual XEON 8480+.

- Link Speed 16GT/s, Width x16

- Testing with LLAMA.CPP – llama-bench

- theoretical memory bandwidth 1.02 TB/s (according to the official documents from AMD)

- the context window is the default 4K of the llama-bench tool. The memory consumption could vary greatly if context window is increased.

- Price: around $250 in ebay.com (Q4 2025).

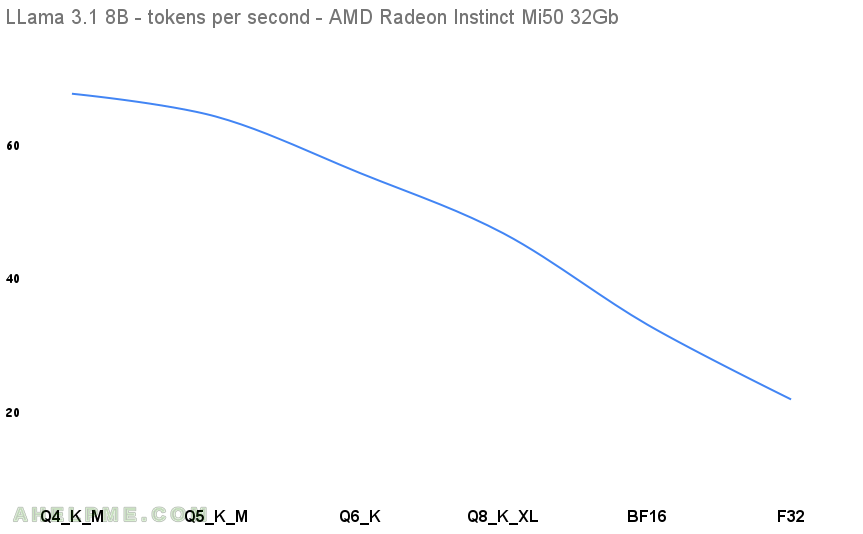

Here are the results. The first benchmark test is Q4 and is used as a baseline for the diff column below, because Q4 are really popular and they offer a good quality and really small footprint related to the full sized model version.

| N | model | parameters | quantization | memory | diff t/s % | tokens/s |

|---|---|---|---|---|---|---|

| 1 | llama 8B | 8.03B | Q4_K_M | 4.58 GiB | 0 | 65.958 |

| 2 | llama 8B | 8.03B | Q5_0 | 5.33 GiB | 5.176 | 62.544 |

| 3 | llama 8B | 8.03B | Q6_K | 6.14 GiB | 13.5 | 54.1 |

| 4 | llama 8B | 8.03B | Q8_K_XL | 9.84 GiB | 16.74 | 45.04 |

| 5 | llama 8B | 8.03B | BF16 | 14.96 GiB | 30.337 | 31.376 |

| 5 | llama 8B | 8.03B | F32 | 29.92 GiB | 35.734 | 20.164 |

It’s worth noting when the total output tokens increase, the tokens per second also might decrease significantly! So the above table with numbers are the expected performance for the desired total output size of the tests below taking the average between 128,256,512,1024 and 2048 tokens.

The difference between the Q4 and F32 in the tokens per second is 69.42% speed degradation and even the F32 is usable with tokens generation around 20 per second. Around 15 tokens per second is good and usable for daily use for a single user, which is what the GPU inference would offer easily.

Here are all the tests output:

1. Meta Llama 3.1 8B Instruct Q4_K_M

Using unsloth Llama-3.1-8B-Instruct-Q4_K_M.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 112 -p 0 -n 128,256,512,1024,2048 -m /root/models/unsloth/Llama-3.1-8B-Instruct-Q4_K_M.gguf -ngl 99 ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 ROCm devices: Device 0: AMD Radeon Graphics, gfx906:sramecc+:xnack- (0x906), VMM: no, Wave Size: 64 | model | size | params | backend | ngl | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | --: | --------------: | -------------------: | | llama 8B Q4_K - Medium | 4.58 GiB | 8.03 B | ROCm,RPC | 99 | tg128 | 71.07 ± 0.06 | | llama 8B Q4_K - Medium | 4.58 GiB | 8.03 B | ROCm,RPC | 99 | tg256 | 69.76 ± 0.08 | | llama 8B Q4_K - Medium | 4.58 GiB | 8.03 B | ROCm,RPC | 99 | tg512 | 67.80 ± 0.08 | | llama 8B Q4_K - Medium | 4.58 GiB | 8.03 B | ROCm,RPC | 99 | tg1024 | 63.62 ± 0.44 | | llama 8B Q4_K - Medium | 4.58 GiB | 8.03 B | ROCm,RPC | 99 | tg2048 | 57.54 ± 0.04 | build: 618575c5 (6192)

2. Meta Llama 3.1 8B Instruct Q5_K_M

Using unsloth Llama-3.1-8B-Instruct-Q5_K_M.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 112 -p 0 -n 128,256,512,1024,2048 -m /root/models/unsloth/Llama-3.1-8B-Instruct-Q5_K_M.gguf -ngl 99 ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 ROCm devices: Device 0: AMD Radeon Graphics, gfx906:sramecc+:xnack- (0x906), VMM: no, Wave Size: 64 | model | size | params | backend | ngl | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | --: | --------------: | -------------------: | | llama 8B Q5_K - Medium | 5.33 GiB | 8.03 B | ROCm,RPC | 99 | tg128 | 67.04 ± 0.22 | | llama 8B Q5_K - Medium | 5.33 GiB | 8.03 B | ROCm,RPC | 99 | tg256 | 66.07 ± 0.09 | | llama 8B Q5_K - Medium | 5.33 GiB | 8.03 B | ROCm,RPC | 99 | tg512 | 64.33 ± 0.14 | | llama 8B Q5_K - Medium | 5.33 GiB | 8.03 B | ROCm,RPC | 99 | tg1024 | 60.31 ± 0.30 | | llama 8B Q5_K - Medium | 5.33 GiB | 8.03 B | ROCm,RPC | 99 | tg2048 | 54.97 ± 0.03 | build: 618575c5 (6192)

3. Meta Llama 3.1 8B Instruct Q6_K

Using unsloth Llama-3.1-8B-Instruct-Q6_K.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 112 -p 0 -n 128,256,512,1024,2048 -m /root/models/unsloth/Llama-3.1-8B-Instruct-Q6_K.gguf -ngl 99 ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 ROCm devices: Device 0: AMD Radeon Graphics, gfx906:sramecc+:xnack- (0x906), VMM: no, Wave Size: 64 | model | size | params | backend | ngl | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | --: | --------------: | -------------------: | | llama 8B Q6_K | 6.14 GiB | 8.03 B | ROCm,RPC | 99 | tg128 | 58.14 ± 0.06 | | llama 8B Q6_K | 6.14 GiB | 8.03 B | ROCm,RPC | 99 | tg256 | 57.07 ± 0.14 | | llama 8B Q6_K | 6.14 GiB | 8.03 B | ROCm,RPC | 99 | tg512 | 55.59 ± 0.29 | | llama 8B Q6_K | 6.14 GiB | 8.03 B | ROCm,RPC | 99 | tg1024 | 51.87 ± 0.34 | | llama 8B Q6_K | 6.14 GiB | 8.03 B | ROCm,RPC | 99 | tg2048 | 47.83 ± 0.07 | build: 618575c5 (6192)

4. Meta Llama 3.1 8B Instruct Q8_K_XL

Using unsloth Llama-3.1-8B-Instruct-UD-Q8_K_XL.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 112 -p 0 -n 128,256,512,1024,2048 -m /root/models/unsloth/Llama-3.1-8B-Instruct-UD-Q8_K_XL.gguf -ngl 99 ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 ROCm devices: Device 0: AMD Radeon Graphics, gfx906:sramecc+:xnack- (0x906), VMM: no, Wave Size: 64 | model | size | params | backend | ngl | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | --: | --------------: | -------------------: | | llama 8B Q8_0 | 9.84 GiB | 8.03 B | ROCm,RPC | 99 | tg128 | 47.22 ± 0.04 | | llama 8B Q8_0 | 9.84 GiB | 8.03 B | ROCm,RPC | 99 | tg256 | 46.95 ± 0.03 | | llama 8B Q8_0 | 9.84 GiB | 8.03 B | ROCm,RPC | 99 | tg512 | 46.04 ± 0.14 | | llama 8B Q8_0 | 9.84 GiB | 8.03 B | ROCm,RPC | 99 | tg1024 | 43.90 ± 0.09 | | llama 8B Q8_0 | 9.84 GiB | 8.03 B | ROCm,RPC | 99 | tg2048 | 41.09 ± 0.02 | build: 618575c5 (6192)

5. Meta Llama 3.1 8B Instruct BF16

Using unsloth Llama-3.1-8B-Instruct-BF16.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 112 -p 0 -n 128,256,512,1024,2048 -m /root/models/unsloth/Llama-3.1-8B-Instruct-BF16.gguf -ngl 99 ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 ROCm devices: Device 0: AMD Radeon Graphics, gfx906:sramecc+:xnack- (0x906), VMM: no, Wave Size: 64 | model | size | params | backend | ngl | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | --: | --------------: | -------------------: | | llama 8B BF16 | 14.96 GiB | 8.03 B | ROCm,RPC | 99 | tg128 | 32.43 ± 0.01 | | llama 8B BF16 | 14.96 GiB | 8.03 B | ROCm,RPC | 99 | tg256 | 32.36 ± 0.03 | | llama 8B BF16 | 14.96 GiB | 8.03 B | ROCm,RPC | 99 | tg512 | 31.81 ± 0.09 | | llama 8B BF16 | 14.96 GiB | 8.03 B | ROCm,RPC | 99 | tg1024 | 30.84 ± 0.02 | | llama 8B BF16 | 14.96 GiB | 8.03 B | ROCm,RPC | 99 | tg2048 | 29.44 ± 0.03 | build: 618575c5 (6192)

6. Meta Llama 3.1 8B Instruct F32

Using bartowski Meta-Llama-3.1-8B-Instruct-f32.gguf file.

/root/llama.cpp/build/bin/llama-bench --numa distribute -t 112 -ngl 99 -p 0 -n 128,256,512,1024,2048 -m /root/models/bartowski/Meta-Llama-3.1-8B-Instruct-f32.gguf ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 ROCm devices: Device 0: AMD Radeon Graphics, gfx906:sramecc+:xnack- (0x906), VMM: no, Wave Size: 64 | model | size | params | backend | ngl | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | --: | --------------: | -------------------: | | llama 8B all F32 | 29.92 GiB | 8.03 B | ROCm,RPC | 99 | tg128 | 20.48 ± 0.03 | | llama 8B all F32 | 29.92 GiB | 8.03 B | ROCm,RPC | 99 | tg256 | 20.50 ± 0.01 | | llama 8B all F32 | 29.92 GiB | 8.03 B | ROCm,RPC | 99 | tg512 | 20.36 ± 0.00 | | llama 8B all F32 | 29.92 GiB | 8.03 B | ROCm,RPC | 99 | tg1024 | 20.04 ± 0.00 | | llama 8B all F32 | 29.92 GiB | 8.03 B | ROCm,RPC | 99 | tg2048 | 19.44 ± 0.00 | build: 618575c5 (6192)

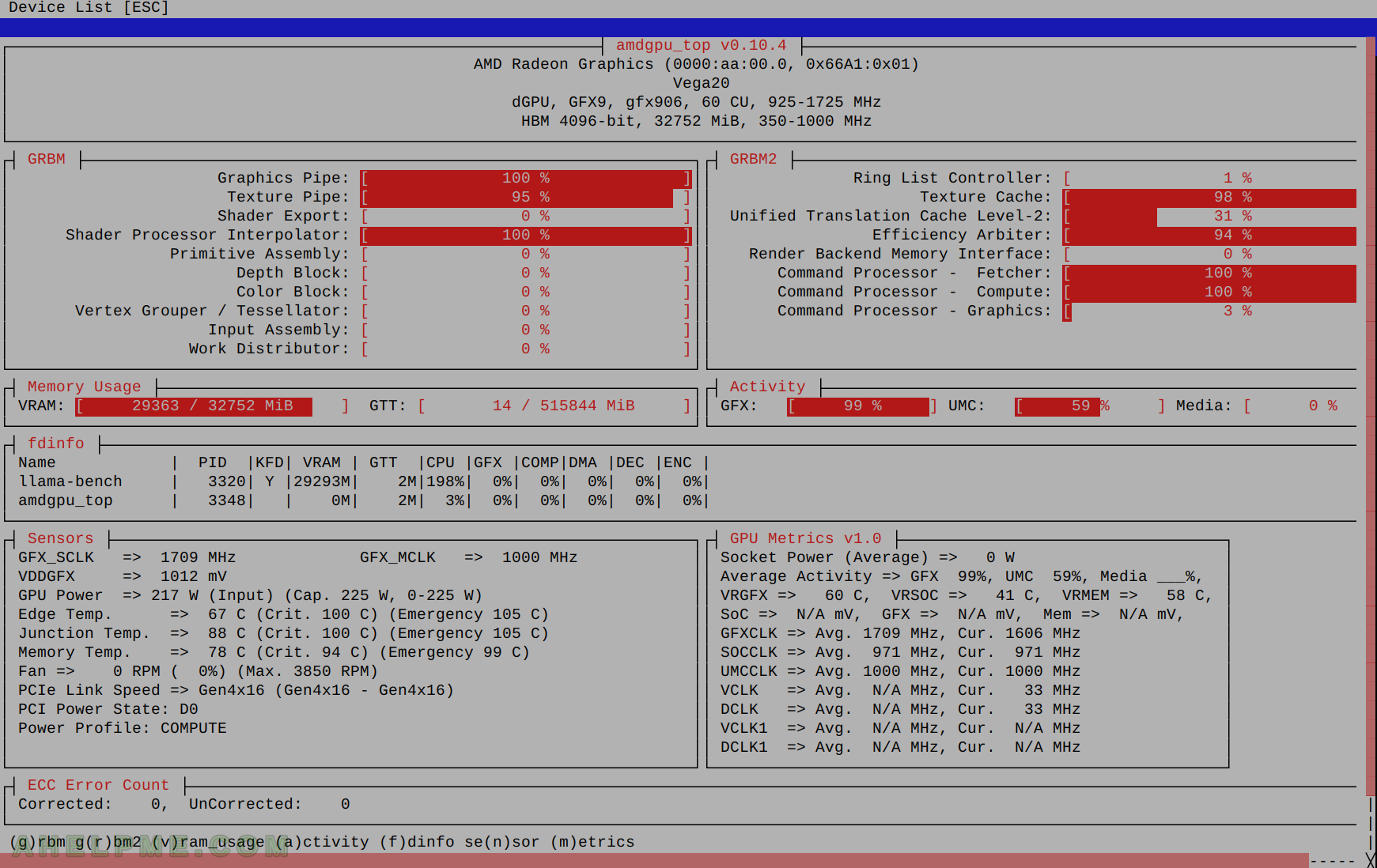

SCREENSHOT 1) The AMDGPU top utility with temperatures, GPU activity and memory usage.

Before all tests the cleaning cache commands were executed:

echo 0 > /proc/sys/kernel/numa_balancing echo 3 > /proc/sys/vm/drop_caches