This article shows the CPU-only inference with a modern server processor – AMD Epyc 9554. For the LLM model the DeepSeek R1 Distill Llama 70B with different quantization are used to show the difference in token generation per second and memory consumption. DeepSeek R1 Distill Llama 70B is a pretty solid LLM model, which can successfully replace the current paid LLM options. The article is focused only showing the benchmark of the LLM tokens generations per second and there are other papers on the quality of the output for the different quantized version.

The testing bench is:

- Single socket AMD EPYC 9554 CPU – 64 core CPU / 128 threads

- 196GB RAM in 12 channel, all 12 CPU channels are populated with 16GB DDR5 5600MHz Samsung.

- ASUS K14PA-U24-T Series motherboard

- Testing with LLAMA.CPP – llama-bench

- theoretical memory bandwidth 460.8 GB/s (according to the official documents form AMD)

- the context window is the default 4K of the llama-bench tool. The memory consumption could vary greatly if context window is increased.

- More information for the setup and benchmarks – LLM inference benchmarks with llamacpp and AMD EPYC 9554 CPU

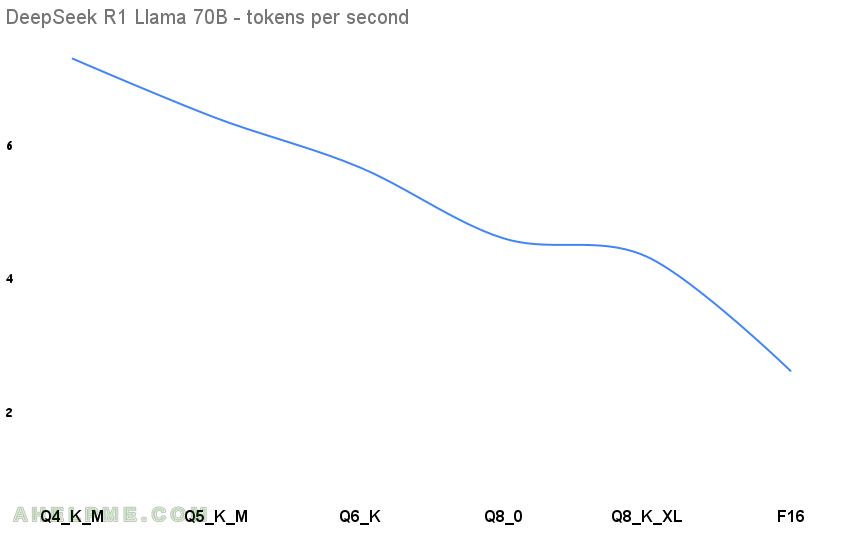

Here are the results. The first benchmark test is Q4 and is used as a baseline for the diff column below, because Q4 are really popular and they offer a good quality and really small footprint related to the full sized model version.

| N | model | parameters | quantization | memory | diff t/s % | tokens/s |

|---|---|---|---|---|---|---|

| 1 | DeepSeek R1 Distill Llama | 70B | Q4_K_M | 39.59 GiB | 0 | 7.126 |

| 2 | DeepSeek R1 Distill Llama | 70B | Q5_K_M | 46.51 GiB | 12.518 | 6.234 |

| 3 | DeepSeek R1 Distill Llama | 70B | Q6_K | 53.91 GiB | 11.870 | 5.494 |

| 4 | DeepSeek R1 Distill Llama | 70B | Q8_0 | 69.82 GiB | 19.366 | 4.43 |

| 5 | DeepSeek R1 Distill Llama | 70B | Q8_K_XL | 75.65 GiB | 6.275 | 4.152 |

| 6 | DeepSeek R1 Distill Llama | 70B | F16 | 131.42 GiB | 41.329 | 2.436 |

The difference between the Q4 and F16 in the tokens per second is 65.815% speed degradation, but the problem is the around 7 tokens per second is usable and the 2.5 is really slow. It worth noting the memory consumption is more than 128G when using the F16, so a computer with 128G RAM could not load the F16 version of the model.

Here are all the tests output:

1. DeepSeek R1 Distill Llama 70B Q4_K_M

Using unsloth DeepSeek-R1-Distill-Llama-70B-Q4_K_M.gguf file.

root@srv ~ # /root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/DeepSeek-R1-Distill-Llama-70B-Q4_K_M.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | | llama 70B Q4_K - Medium | 39.59 GiB | 70.55 B | BLAS,RPC | 64 | tg128 | 7.15 ± 0.00 | | llama 70B Q4_K - Medium | 39.59 GiB | 70.55 B | BLAS,RPC | 64 | tg256 | 7.14 ± 0.00 | | llama 70B Q4_K - Medium | 39.59 GiB | 70.55 B | BLAS,RPC | 64 | tg512 | 7.16 ± 0.00 | | llama 70B Q4_K - Medium | 39.59 GiB | 70.55 B | BLAS,RPC | 64 | tg1024 | 7.14 ± 0.00 | | llama 70B Q4_K - Medium | 39.59 GiB | 70.55 B | BLAS,RPC | 64 | tg2048 | 7.04 ± 0.00 | build: 51f311e0 (4753)

2. DeepSeek R1 Distill Llama 70B Q5_K_M

Using unsloth DeepSeek-R1-Distill-Llama-70B-Q5_K_M.gguf file.

root@srv ~ # /root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/DeepSeek-R1-Distill-Llama-70B-Q5_K_M.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | | llama 70B Q5_K - Medium | 46.51 GiB | 70.55 B | BLAS,RPC | 64 | tg128 | 6.24 ± 0.00 | | llama 70B Q5_K - Medium | 46.51 GiB | 70.55 B | BLAS,RPC | 64 | tg256 | 6.25 ± 0.00 | | llama 70B Q5_K - Medium | 46.51 GiB | 70.55 B | BLAS,RPC | 64 | tg512 | 6.27 ± 0.00 | | llama 70B Q5_K - Medium | 46.51 GiB | 70.55 B | BLAS,RPC | 64 | tg1024 | 6.24 ± 0.00 | | llama 70B Q5_K - Medium | 46.51 GiB | 70.55 B | BLAS,RPC | 64 | tg2048 | 6.17 ± 0.00 | build: 51f311e0 (4753)

3. DeepSeek R1 Distill Llama 70B Q6_K

Using unsloth DeepSeek-R1-Distill-Llama-70B-Q6_K-00001-of-00002.gguf and DeepSeek-R1-Distill-Llama-70B-Q6_K-00002-of-00002.gguf files.

root@srv ~ # /root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/DeepSeek-R1-Distill-Llama-70B-Q6_K-00001-of-00002.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | | llama 70B Q6_K | 53.91 GiB | 70.55 B | BLAS,RPC | 64 | tg128 | 5.50 ± 0.00 | | llama 70B Q6_K | 53.91 GiB | 70.55 B | BLAS,RPC | 64 | tg256 | 5.51 ± 0.00 | | llama 70B Q6_K | 53.91 GiB | 70.55 B | BLAS,RPC | 64 | tg512 | 5.52 ± 0.00 | | llama 70B Q6_K | 53.91 GiB | 70.55 B | BLAS,RPC | 64 | tg1024 | 5.50 ± 0.00 | | llama 70B Q6_K | 53.91 GiB | 70.55 B | BLAS,RPC | 64 | tg2048 | 5.44 ± 0.00 | build: 51f311e0 (4753)

4. DeepSeek R1 Distill Llama 70B Q8_0

Using unsloth DeepSeek-R1-Distill-Llama-70B-Q8_0-00001-of-00002.gguf and DeepSeek-R1-Distill-Llama-70B-Q8_0-00002-of-00002.gguf files.

root@srv ~ # /root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/DeepSeek-R1-Distill-Llama-70B-Q8_0-00001-of-00002.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | | llama 70B Q8_0 | 69.82 GiB | 70.55 B | BLAS,RPC | 64 | tg128 | 4.44 ± 0.00 | | llama 70B Q8_0 | 69.82 GiB | 70.55 B | BLAS,RPC | 64 | tg256 | 4.44 ± 0.00 | | llama 70B Q8_0 | 69.82 GiB | 70.55 B | BLAS,RPC | 64 | tg512 | 4.44 ± 0.00 | | llama 70B Q8_0 | 69.82 GiB | 70.55 B | BLAS,RPC | 64 | tg1024 | 4.44 ± 0.00 | | llama 70B Q8_0 | 69.82 GiB | 70.55 B | BLAS,RPC | 64 | tg2048 | 4.39 ± 0.00 | build: 51f311e0 (4753)

5. DeepSeek R1 Distill Llama 70B Q8_K_XL

Using unsloth DeepSeek-R1-Distill-Llama-70B-UD-Q8_K_XL-00001-of-00002.gguf and DeepSeek-R1-Distill-Llama-70B-UD-Q8_K_XL-00002-of-00002.gguf files.

root@srv ~ # /root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/DeepSeek-R1-Distill-Llama-70B-UD-Q8_K_XL-00001-of-00002.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | | llama 70B Q8_0 | 75.65 GiB | 70.55 B | BLAS,RPC | 64 | tg128 | 4.17 ± 0.00 | | llama 70B Q8_0 | 75.65 GiB | 70.55 B | BLAS,RPC | 64 | tg256 | 4.16 ± 0.00 | | llama 70B Q8_0 | 75.65 GiB | 70.55 B | BLAS,RPC | 64 | tg512 | 4.16 ± 0.00 | | llama 70B Q8_0 | 75.65 GiB | 70.55 B | BLAS,RPC | 64 | tg1024 | 4.15 ± 0.00 | | llama 70B Q8_0 | 75.65 GiB | 70.55 B | BLAS,RPC | 64 | tg2048 | 4.12 ± 0.00 | build: 51f311e0 (4753)

6. DeepSeek R1 Distill Llama 70B F16.

Using unsloth DeepSeek-R1-Distill-Llama-70B-F16-00001-of-00003.gguf, DeepSeek-R1-Distill-Llama-70B-F16-00002-of-00003.gguf and DeepSeek-R1-Distill-Llama-70B-F16-00001-of-00003.gguf files.

root@srv ~ # /root/llama.cpp/build/bin/llama-bench --numa distribute -t 64 -p 0 -n 128,256,512,1024,2048 -m /root/models/tests/DeepSeek-R1-Distill-Llama-70B-F16-00001-of-00003.gguf | model | size | params | backend | threads | test | t/s | | ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: | | llama 70B F16 | 131.42 GiB | 70.55 B | BLAS,RPC | 64 | tg128 | 2.44 ± 0.00 | | llama 70B F16 | 131.42 GiB | 70.55 B | BLAS,RPC | 64 | tg256 | 2.44 ± 0.00 | | llama 70B F16 | 131.42 GiB | 70.55 B | BLAS,RPC | 64 | tg512 | 2.44 ± 0.00 | | llama 70B F16 | 131.42 GiB | 70.55 B | BLAS,RPC | 64 | tg1024 | 2.44 ± 0.00 | | llama 70B F16 | 131.42 GiB | 70.55 B | BLAS,RPC | 64 | tg2048 | 2.42 ± 0.00 | build: 51f311e0 (4753)

Before all tests the cleaning cache commands were executed:

echo 0 > /proc/sys/kernel/numa_balancing echo 3 > /proc/sys/vm/drop_caches